Somebody asked me this question in a job interview and I replied that their joint distribution is always Gaussian. I thought that I can always write a bivariate Gaussian with their means and variance and covariances. I am wondering if there can be a case for which the joint probability of two Gaussians is not Gaussian?

- 63,378

- 26

- 142

- 467

- 2,559

- 5

- 24

- 25

-

6Another example from [Wikipedia](http://en.wikipedia.org/wiki/Multivariate_normal_distribution#Two_normally_distributed_random_variables_need_not_be_jointly_bivariate_normal). Of course, if the variables are independent and marginally Gaussian, then they are jointly Gaussian. – Jun 09 '12 at 23:30

-

5An example here http://www.wu.ece.ufl.edu/books/math/probability/jointlygaussian.pdf – Stéphane Laurent Jun 10 '12 at 09:01

4 Answers

The bivariate normal distribution is the exception, not the rule!

It is important to recognize that "almost all" joint distributions with normal marginals are not the bivariate normal distribution. That is, the common viewpoint that joint distributions with normal marginals that are not the bivariate normal are somehow "pathological", is a bit misguided.

Certainly, the multivariate normal is extremely important due to its stability under linear transformations, and so receives the bulk of attention in applications.

Examples

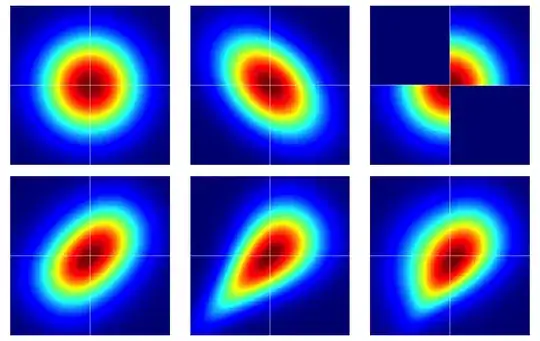

It is useful to start with some examples. The figure below contains heatmaps of six bivariate distributions, all of which have standard normal marginals. The left and middle ones in the top row are bivariate normals, the remaining ones are not (as should be apparent). They're described further below.

The bare bones of copulas

Properties of dependence are often efficiently analyzed using copulas. A bivariate copula is just a fancy name for a probability distribution on the unit square $[0,1]^2$ with uniform marginals.

Suppose $C(u,v)$ is a bivariate copula. Then, immediately from the above, we know that $C(u,v) \geq 0$, $C(u,1) = u$ and $C(1,v) = v$, for example.

We can construct bivariate random variables on the Euclidean plane with prespecified marginals by a simple transformation of a bivariate copula. Let $F_1$ and $F_2$ be prescribed marginal distributions for a pair of random variables $(X,Y)$. Then, if $C(u,v)$ is a bivariate copula, $$ F(x,y) = C(F_1(x), F_2(y)) $$ is a bivariate distribution function with marginals $F_1$ and $F_2$. To see this last fact, just note that $$ \renewcommand{\Pr}{\mathbb P} \Pr(X \leq x) = \Pr(X \leq x, Y < \infty) = C(F_1(x), F_2(\infty)) = C(F_1(x),1) = F_1(x) \>. $$ The same argument works for $F_2$.

For continuous $F_1$ and $F_2$, Sklar's theorem asserts a converse implying uniqueness. That is, given a bivariate distribution $F(x,y)$ with continuous marginals $F_1$, $F_2$, the corresponding copula is unique (on the appropriate range space).

The bivariate normal is exceptional

Sklar's theorem tells us (essentially) that there is only one copula that produces the bivariate normal distribution. This is, aptly named, the Gaussian copula which has density on $[0,1]^2$ $$ c_\rho(u,v) := \frac{\partial^2}{\partial u \, \partial v} C_\rho(u,v) = \frac{\varphi_{2,\rho}(\Phi^{-1}(u),\Phi^{-1}(v))}{\varphi(\Phi^{-1}(u)) \varphi(\Phi^{-1}(v))} \>, $$ where the numerator is the bivariate normal distribution with correlation $\rho$ evaluated at $\Phi^{-1}(u)$ and $\Phi^{-1}(v)$.

But, there are lots of other copulas and all of them will give a bivariate distribution with normal marginals which is not the bivariate normal by using the transformation described in the previous section.

Some details on the examples

Note that if $C(u,v)$ is am arbitrary copula with density $c(u,v)$, the corresponding bivariate density with standard normal marginals under the transformation $F(x,y) = C(\Phi(x),\Phi(y))$ is $$ f(x,y) = \varphi(x) \varphi(y) c(\Phi(x), \Phi(y)) \> . $$

Note that by applying the Gaussian copula in the above equation, we recover the bivariate normal density. But, for any other choice of $c(u,v)$, we will not.

The examples in the figure were constructed as follows (going across each row, one column at a time):

- Bivariate normal with independent components.

- Bivariate normal with $\rho = -0.4$.

- The example given in this answer of Dilip Sarwate. It can easily be seen to be induced by the copula $C(u,v)$ with density $c(u,v) = 2 (\mathbf 1_{(0 \leq u \leq 1/2, 0 \leq v \leq 1/2)} + \mathbf 1_{(1/2 < u \leq 1, 1/2 < v \leq 1)})$.

- Generated from the Frank copula with parameter $\theta = 2$.

- Generated from the Clayton copula with parameter $\theta = 1$.

- Generated from an asymmetric modification of the Clayton copula with parameter $\theta = 3$.

- 7,094

- 1

- 20

- 38

- 24,973

- 8

- 94

- 128

-

12+1 for the remark that the bivariate normal density is the exceptional case! – Dilip Sarwate Jun 10 '12 at 23:40

-

Maybe I am missing something, but if we start from $X_1, X_2\sim\mathcal N(0,1)$, the joint distribution $(X_1, X_2)$ is automatically defined, independently of any copula construction, and if we apply a non-Gaussian copula construction to their CDFs, it is true that we shall obtain a non-Gaussian CDF $F(x_1,x_2)$, but this function in general will not be the CDF of the pair of random variables $X_, X_2$ we started with, right? – RandomGuy Jan 06 '17 at 11:06

-

Example of how to simulate as in lower right panel: `library(copula)` `kcf – half-pass Dec 29 '18 at 18:29

-

3@RandomGuy, You’re missing an unstated assumption that $X_1, X_2 \sim independent N(0, 1)$. If you assume they’re independent, then yes, you know the joint distribution already. Without the independence assumption, knowing the marginal distributions does not give enough information to specify the joint distribution. – MentatOfDune Feb 19 '19 at 15:41

It is true that each element of a multivariate normal vector is itself normally distributed, and you can deduce their means and variances. However, it is not true that any two Guassian random variables are jointly normally distributed. Here is an example:

Edit: In response to the consensus that a random variable that is a point mass can be thought of as a normally distributed variable with $\sigma^2=0$, I'm changing my example.

Let $X \sim N(0,1)$ and let $Y = X \cdot (2B-1)$ where $B$ is a ${\rm Bernoulli}(1/2)$ random variable. That is, $Y = \pm X$ each with probability $1/2$.

We first show that $Y$ has a standard normal distribution. By the law of total probability,

$$ P(Y \leq y) = \frac{1}{2} \Big( P(Y \leq y | B = 1) + P(Y \leq y | B = 0) \Big) $$

Next,

$$ P(Y \leq y | B = 0) = P(-X \leq y) = 1-P(X \leq -y) = 1-\Phi(-y) = \Phi(y) $$

where $\Phi$ is the standard normal CDF. Similarly,

$$ P(Y \leq y | B = 1) = P(X \leq y) = \Phi(y) $$

Therefore,

$$ P(Y \leq y) = \frac{1}{2} \Big( \Phi(y) + \Phi(y) \Big) = \Phi(y) $$

so, the CDF of $Y$ is $\Phi(\cdot)$, thus $Y \sim N(0,1)$.

Now we show that $X,Y$ are not jointly normally distributed. As @cardinal points out, one characterization of the multivariate normal is that every linear combination of its elements is normally distributed. $X,Y$ do not have this property, since

$$ Y+X = \begin{cases} 2X &\mbox{if } B = 1 \\ 0 & \mbox{if } B = 0. \end{cases} $$

Therefore $Y+X$ is a $50/50$ mixture of a $N(0,4)$ random variable and a point mass at 0, therefore it cannot be normally distributed.

- 40,561

- 8

- 143

- 148

-

6I don't agree with this answer. A degenerate point mass of $1$ at $\mu$ is usually considered to be a degenerate Gaussian random variable with zero variance. Also,$(X, -X)$ are not jointly continuous though they are marginally continuous. For an example of two _jointly continuous_ random variables that are marginally Gaussian but not jointly Gaussian, see, for example, the latter half of [this answer](http://stats.stackexchange.com/a/25919/6633). – Dilip Sarwate Jun 10 '12 at 00:02

-

5@DilipSarwate, the question was to give an example (if one exists) of two variables that are normally distributed but their joint distribution is not multivariate normal. This is an example. Most standard definitions of the normal distribution (e.g. wikipedia http://en.wikipedia.org/wiki/Normal_distribution) require the variance the be strictly positive, thus not including a point mass as part of the family of normal distributions. – Macro Jun 10 '12 at 00:04

-

7A standard characterization of the multivariate Gaussian is that $X \in \mathbb R^{n}$ is multivariate Gaussian if and only if $a^T X$ is Gaussian for all $a \in \mathbb R^n$. As @Dilip hints at, it is worth considering if this true for your example. – cardinal Jun 10 '12 at 00:12

-

1${\boldsymbol a} = (1,1)$ is a counterexample, right? You'd get a point mass at 0. – Macro Jun 10 '12 at 00:13

-

-

I think that characterisation excludes $a=0$. I am also trying to get a believable reference @Macro – Jun 10 '12 at 00:16

-

Thank you @procrastinator. That was my thought as well. I couldn't immediately find a citation. – Macro Jun 10 '12 at 00:17

-

@Procrastinator: There's not reason to exclude it, and indeed, that would entail excluding *all* rank-deficient multivariate Guassians. – cardinal Jun 10 '12 at 00:18

-

@cardinal So, do you say that if $X$ is multivariate Gaussian, then $0^T X$ is Gaussian? – Jun 10 '12 at 00:19

-

2@Procrastinator: Why not? As I said, if you exclude $a = 0$ as a counterexample, you likewise implicitly exclude the class of nondegenerate multivariate Gaussian in its entirety (in that case there will *always* be an $a \neq 0$ such that $a^T X = 0$ almost surely). That doesn't seem to be a very common viewpoint. – cardinal Jun 10 '12 at 00:23

-

1@cardinal I see your point, but then we have to consider if $\sigma=0$ is allowed (wich is the Dirac delta if I am not mistaken). My religion says that $\sigma>0$ so I can call the normal distribution a continuous distribution. – Jun 10 '12 at 00:32

-

1You're ultimately constructing a bivariate distribution, Macro. A distribution can be within many different *families* of distributions. There's no inconsistency there. In this case, there's no reason to exclude it, and, indeed, by doing so, one is implicitly throwing out a whole statistically relevant and commonly used family of distributions. :) – cardinal Jun 10 '12 at 00:32

-

@Procrastinator: So, what doesn't your religion like about [these](http://en.wikipedia.org/wiki/Multivariate_normal_distribution#Degenerate_case)? ;-) – cardinal Jun 10 '12 at 00:34

-

@cardinal What I just said :), that is not a (absolutely) continuous distribution. – Jun 10 '12 at 00:36

-

I'm not sure a multivariate normal with a singular covariance matrix is a commonly used or statistically relevant. I think in that case, one would exclude the variable that is an exact linear combination of the others since they may want to compute the density function :) Regardless of that, I'm arguing a different, equivalent, condition that only deals with the univariate normal distribution. Why is the convention you're referring to more or less valid than the one I am? – Macro Jun 10 '12 at 00:37

-

Why don't they list under "related distributions" on wiki the connection between the Bernoulli and the Normal that should be included under your argument I'm guessing if you added that edit, then it would be reverted quckly and this argument could be moved to the discussion page on wikipedia ;) – Macro Jun 10 '12 at 00:39

-

@Macro I think that $\sigma=0$ correspond to a Dirac delta instead of a Bernoulli. But this discussion might continue until someone find a believable reference and win the battle. – Jun 10 '12 at 00:47

-

@Procrastinator, I was saying that $\mu X$ if $X$ is a Bernoulli trial with $p=1$ is the same as $Z \sim N(\mu,0)$ and was joking that we'd have to add this is to the "related distributions" tab on wikipedia if we are to start including the border values in the parameter spaces. – Macro Jun 10 '12 at 00:49

-

8Since you apparently don't like appeals to rationality ;-), how about appeals to authority? (That's a joke, if it's not apparent.) I just happened upon this purely by accident as I was looking something else up: **Example 2.4**, page 22 of G. A. F. Seber and A. J. Lee, *Linear Regression Analysis*, 2nd. ed., Wiley. It quoteth: "Let $Y \sim \mathcal N(\mu,\sigma^2)$ and put $\mathbf Y' = (Y, -Y)$...Thus $\mathbf Y$ has a multivariate normal distribution." – cardinal Jun 10 '12 at 00:51

-

@cardinal But it looks a bit contradictory with Definition 2.1 regarding the positive definiteness of $\Sigma$, don't you think? – Jun 10 '12 at 01:07

-

1@cardinal and Macro I see, everything depends on adopting Definition 2.1 or Definition 2.2 . So, we are at a crossroads. Thanks for the discussion gentlemen. – Jun 10 '12 at 01:14

-

cardinal, I think it's pedantic to argue that a vector is still multivariate normal even if the covariance matrix is singular and thus the density doesn't exist (btw I still don't see evidence of a consensus on this), but I suppose it's your role to make such arguments ;) (That's a joke, if that's not clear) – Macro Jun 10 '12 at 02:06

-

@Procrastinator and Macro (and cardinal): As the one whose comment started this extensive discussion, let me point out that in at least one graduate course in statistics, a random vector obtained by applying a linear transformation to a vector of independent standard normal variables is defined to be a multivariate normal vector. So, $(1,-1)X = (X, -X)$ is a multivariate normal vector according to at least one more [statistician](http://web.as.uky.edu/statistics/users/viele/sta601s08/multinorm.pdf) than just cardinal, Seber and Lee (and the students that pass that course). – Dilip Sarwate Jun 10 '12 at 02:14

-

1@DilipSarwate I see the point. Did you compare Definitions 2.1 and 2.2?. It is just intriguing how the author starts with a definition and then switches to a more general/incompatible definition. But I agree that the more general one is nicer. That example is MVN with respect to Definition 2.2 but not w.r.t. Definition 2.1 that is the main point of the discussion. Even in the link you posted, the author says "Thus, in what follows, we will assume a nonsingular covariance matrix $\Sigma$." – Jun 10 '12 at 02:28

-

@Procrastinator I don't have access to the Seber and Lee book and so cannot compare the two definitions. But many people do take cardinal's definition earlier in this thread as the canonical definition of multivariate normal vector. See for example, Didier Piau's comment on one of my [answers](http://math.stackexchange.com/a/93108/15941) on math.SE. – Dilip Sarwate Jun 10 '12 at 02:33

-

@DilipSarwate I thought you checked it because you *cited* it in your previous comment. But we are not in disagreement, sometimes people are interested only on the subfamily of absolutely continuous members, sometimes in the whole family including singular members. – Jun 10 '12 at 02:37

-

2@Procrastinator: There are good reasons to be inclusive in the definition here (i.e., adopt Def. 2.2). I was quite surprised to see S&L *start* with the definition they do. The most natural definition to me comes from considering linear transformations of iid standard normals. Reading off properties from the characteristic function is straightforward and there is no striking need to "force" a "full-rank" restriction on the definition. On the other hand, allowing for degeneracy has rich applications in linear model theory and asymptotics of categorical data analysis. – cardinal Jun 10 '12 at 15:01

-

-

Here are two brief ones (a) they show up in the analysis of the distributions of certain quadratic forms in linear model theory and (b) they greatly streamline the "standard" proofs of the asymptotic distributions of some goodness-of-fit statistics for categorical data. :) You may also be interested in C. R. Rao and S. J. Mitra (1972), [Generalized inverse of a matrix and its applications](http://projecteuclid.org/euclid.bsmsp/1200514113), *Proc. 6th Berkeley Symp. Math. Stat.*, Vol I, 601-620. – cardinal Jun 10 '12 at 15:42

-

7The discussion is about definitions. Clearly, if the covariance matrix by definition is required to be non-singular *Macro* provides an example, but this is not a example according to the more liberal definition that @cardinal refers too. One good reason to prefer the more liberal definition is that then *all* linear transformations of normal variables are normal. In particular, in linear regression with normal errors the residuals have a joint normal distribution but the covariance matrix is singular. – NRH Jun 10 '12 at 20:10

-

@DilipSarwate (and cardinal) - I'm conceding the technical point, since there appears to be consensus. I've changed my example. Thanks for the interesting discussion. – Macro Jun 10 '12 at 21:35

-

@NRH (and Dilip, cardinal), I'm beginning to understand the reasons for including such examples in the family of normal distributions, despite the the fact that the density doesn't exist in such instances. I've given a new example that doesn't rely on specifying that $\sigma^2 = 0$ precludes a random variable from being called "normally distributed". – Macro Jun 10 '12 at 21:37

-

@Macro: This new example is a better one, I think, and was the first "simple" one that came to my head when I saw this problem. I'm glad to see you give it. Note that $(2B-1)$ is an example of what are often called Rademacher functions. – cardinal Jun 10 '12 at 22:16

-

@Macro: I believe there are some typos that need correcting. Since $Y = X(2B-1) = X$ when $B = 1$, it follows that $$Y-X = \begin{cases}0, & \text{if}~ B = 1,\\-2X, & \text{if}~ B = 0,\end{cases}$$ Do you _have_ to use $Y-X$? Why not just $X+Y = 2X$ when $B = 1$ and $0$ if $B = 0$? – Dilip Sarwate Jun 10 '12 at 23:38

-

1@DilipSarwate, yes that was a typo. I meant $Y+X$, not $Y-X$. Thank you for catching that. – Macro Jun 11 '12 at 00:00

-

2@Macro, Cardinal, Dilip: I think that the discussion here spins around two distinct question: First, in the definition of the (univariate) normal density, do we admit that a constant is a normal random variable? If so, the Proposition, quoted in the wikipedia page that "Every linear combination of its components is univariate normal" is ok, because if we choose the coefficients of this combination to be zero, we obtain the zero random variable. Second, do we admit that a point mass at $\mu$ is normal? I think these two questions are related, but distinct and both relevant to this discussion. – RandomGuy Jan 06 '17 at 09:46

The following post contains an outline of a proof, just to give the main ideas and get you started.

Let $z = (Z_1, Z_2)$ be two independent Gaussian random variables and let $x = (X_1, X_2)$ be $$ x = \begin{pmatrix} X_1 \\ X_2 \end{pmatrix} = \begin{pmatrix} \alpha_{11} Z_1 + \alpha_{12} Z_2\\ \alpha_{21} Z_1 + \alpha_{22} Z_2 \end{pmatrix} = \begin{pmatrix} \alpha_{11} & \alpha_{12}\\ \alpha_{21} & \alpha_{22} \end{pmatrix} \begin{pmatrix} Z_1 \\ Z_2 \end{pmatrix} = A z. $$

Each $X_i \sim N(\mu_i, \sigma_i^2)$, but as they are both linear combinations of the same independent r.vs, they are jointly dependent.

Definition A pair of r.vs $x = (X_1, X_2)$ are said to be bivariate normally distributed iff it can be written as a linear combination $x = Az$ of independent normal r.vs $z = (Z_1, Z_2)$.

Lemma If $ x = (X_1, X_2)$ is a bivariate Gaussian, then any other linear combination of them is again a normal random variable.

Proof. Trivial, skipped to not offend anyone.

Property If $X_1, X_2$ are uncorrelated, then they are independent and vice-versa.

Distribution of $X_1 | X_2$

Assume $X_1, X_2$ are the same Gaussian r.vs as before but let's suppose they have positive variance and zero mean for simplicity.

If $\mathbf S$ is the subspace spanned by $X_2$, let $ X_1^{\mathbf S} = \frac{\rho \sigma_{X_1}}{\sigma_{X_2}} X_2 $ and $ X_1^{\mathbf S^\perp} = X_1 - X_1^{\mathbf S} $.

$X_1$ and $X_2$ are linear combinations of $z$, so $ X_2, X_1^{\mathbf S^\perp}$ are too. They are jointly Gaussian, uncorrelated (prove it) and independent.

The decomposition $$ X_1 = X_1^{\mathbf S} + X_1^{\mathbf S^\perp} $$ holds with $\mathbf{E}[X_1 | X_2] = \frac{\rho \sigma_{X_1}}{\sigma_{X_2}} X_2 = X_1^{\mathbf S}$

$$ \begin{split} \mathbf{V}[X_1 | X_2] &= \mathbf{V}[X_1^{\mathbf S^\perp}] \\ &= \mathbf{E} \left[ X_1 - \frac{\rho \sigma_{X_1}}{\sigma_{X_2}} X_2 \right]^2 \\ &= (1 - \rho)^2 \sigma^2_{X_1}. \end{split} $$

Then $$ X_1 | X_2 \sim N\left( X_1^{\mathbf S}, (1 - \rho)^2 \sigma^2_{X_1} \right).$$

Two univariate Gaussian random variables $X, Y$ are jointly Gaussian if the conditionals $X | Y$ and $Y|X$ are Gaussian too.

- 512

- 3

- 8

-

3It isn't apparent how this observation answers the question. Since the product rule is practically the definition of conditional distribution, it's not special to binormal distributions. The subsequent statement "then in order..." doesn't provide any reason: exactly why must the conditional distributions also be normal? – whuber Jan 11 '16 at 00:12

-

whuber, I am answering to the main question: "I am wondering if there can be a case for which the joint probability of two Gaussians is not Gaussian?". So, the answer is: when the conditional is not normal. - Ancillary – ancillary Jan 11 '16 at 13:02

-

3Could you complete that demonstration? Right now it's only an assertion on your part, with no proof. It's not at all evident that it's correct. It's also incomplete, because you need to establish existence: that is, you have to demonstrate it's actually *possible* for a joint distribution to have normal marginals but for which at least one conditional is non-normal. Now in fact that's trivially true, because you may freely alter each conditional distribution of a binormal on a set of measure zero without changing its marginals--but that possibility would seem to contradict your assertions. – whuber Jan 11 '16 at 13:24

-

Hi @whuber, I hope this helps more. Do you have any suggestions or edits to do? I wrote this very quickly as at the moment I haven't got much spare time :-) but I would value any suggestion or improvement you can make. Best – ancillary Aug 30 '16 at 11:57

-

(1) What are you trying to prove? (2) Because the question asks when a distribution with Gaussian marginals is *not* jointly Gaussian, I don't see how this argument is leading to anything relevant. – whuber Aug 30 '16 at 13:39

-

Well if X and Y are each Gaussian, but the conditional is not Gaussian, then the joint is not Gaussian. It is clear from that argument! So it is relevant – ancillary Aug 30 '16 at 13:46

-

Perhaps--provided you can demonstrate the existence of such a joint distribution. But that's exactly what the question is asking for. – whuber Aug 30 '16 at 13:47

-

If the joint doesn't exist: problem solved! Then the question "is the joint Gaussian?" has answer no. If the joint exists, then if the conditional is Gaussian, the joint will be Gaussian too. So the aim of my comments was: if you have well behaved random variables, you can have a nice interpretation of when the joint is Gaussian in terms of the conditionals. – ancillary Aug 30 '16 at 14:54

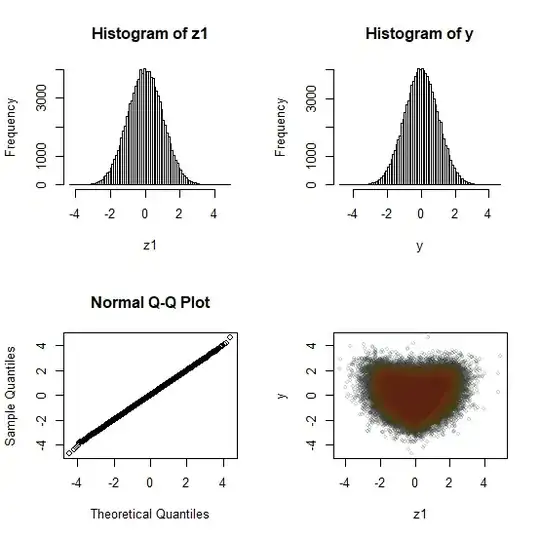

I thought it might be worth pointing out a couple of nice examples; one I've mentioned in a couple of older answers here on Cross Validated (e.g. this one) and one rather pretty one which occurred to me the other day.

Here we have two variables, $Y$ and $Z$, that have (uncorrelated) normal distributions, where $Y$ is functionally (though nonlinearly) related to $Z$. There are any number of possible examples of this type:

Start with $Z\sim N(0,1)$

Let $U = F(Z^{2})$ where $F$ is the cdf of a chi-squared variate with $1$ d.f. Note that $U$ is then standard uniform.

Let $Y = \Phi^{-1}(U)$

Then $(Y,Z)$ are marginally normal (and in this case uncorrelated) but are not jointly normal

You can generate samples from the joint distribution of Y and Z as follows (in R):

y <- qnorm(pchisq((z=rnorm(100000L))^2,1)) # if plots are too slow, try 10000L #let's take a look at it: par(mfrow=c(2,2)) hist(z,n=50) hist(y,n=50) qqnorm(y,pch=16,cex=.2,col=rgb(.2,.2,.2,.2)) plot(z,y,pch=16,cex=.2,col=rgb(.2,.2,.2,.2)) par(mfrow=c(1,1))In particular, the joint distribution lies on a continuous curve with a cusp in it.

Here's another. This gives a rather nice bivariate density with heart-shaped contours:

It relies on the fact that if $Z_1$, $Z_2$, $Z_3$, and $Z_4$ are iid standard normal, then $L=Z_1Z_2+Z_3Z_4$ is Laplace (double exponential). There's a variety of ways to convert $L$ to a normal, but one is to take $Y=\Phi^{-1}(1-\exp(-|L|))$. Then $Y$ is standard normal but (by symmetry) the relationship between $Z_i$ and $Y$ (for any $i$ in $\{1,2,3,4\}$) is the same; $Y$ and $Z_i$ are not jointly normal but are marginally normal).

See the display below (the R code for this may be a little slow, but I think it's worth the wait. If you want a faster version, cut the sample size down.

n=100000L z1=rnorm(n); z2=rnorm(n); z3=rnorm(n); z4=rnorm(n) L=z1*z2+z3*z4 y = qnorm(pexp(abs(L))) par(mfrow=c(2,2)) hist(z1,n=100) hist(y,n=100) qqnorm(y) plot(z1,y,cex=.6,col=rgb(.1,.2,.3,.2)) points(z1,y,cex=.5,col=rgb(.35,.3,.0,.1)) # this helps visualize points(z1,y,cex=.4,col=rgb(.4,.1,.1,.05)) # the contours par(mfrow=c(1,1))

- 257,508

- 32

- 553

- 939