Suppose we know p(x,y), p(x,z) and p(y,z), is it true that the joint distribution p(x,y,z) is identifiable? I.e., there is only one possible p(x,y,z) which has above marginals?

-

Related: [Is it possible to have a pair of Gaussian random variables for which the joint distribution is not Gaussian?](https://stats.stackexchange.com/q/30159/) (That pertains to the 2D joint vs 1D marginals, but the answer & the intuition is ultimately the same, plus the pictures in @Cardinal's answer are beautiful.) – gung - Reinstate Monica Feb 07 '18 at 21:27

-

@gung The relationship is somewhat remote. The subtlety behind this question is the thought that a copula shows us how to develop bivariate distributions with given marginals. But if we specify three bivariate marginals for a trivariate distribution, there must be fairly severe additional constraints on that trivariate distribution: the univariate marginals must be consistent. The question then is whether these constraints suffice to pin down the trivariate distribution. This makes it an inherently more than two dimensional question. – whuber Feb 07 '18 at 21:33

-

1@whuber, I understand you to be saying that 2D marginals are more constraining than 1D marginals, which is reasonable. My point is that in both the answer is that marginals can't sufficiently constrain the joint distribution, & that Cardinal's answer there makes the issue very easy to see. If you think this is too much of a distraction, I can delete these comments. – gung - Reinstate Monica Feb 07 '18 at 21:36

-

@gung I'm trying to say something altogether different and it's not easy to see (unless you're very good at 3D visualizations). Do you remember the cover image of Hofstadter's *Godel, Escher, Bach*? (It's easily found by Googling; maybe I'll expand my answer to include it.) The existence of those two different solids with identical sets of projections onto the coordinate axes is fairly amazing. This captures the idea that a full set of orthogonal 2D "views" of a 3D object don't necessarily determine the object. That's the crux of the matter. – whuber Feb 07 '18 at 21:47

-

@whuber, I know it well; I use it to illustrate that joint distributions can differ with the same marginal 2D projections & thus, you don't necessarily know enough by looking at a scatterplot matrix. (I also use the randu dataset to back up this point.) At any rate, this still seems to be the point that marginals don't fully specify the joint distribution, which is your answer below & Cardinal's answer in the linked thread. As I see it, the differences are that that situation is 2D w/ 1D marginals & is specific to the multivariate normal, whereas this is 3D w/ an unspecified distribution. – gung - Reinstate Monica Feb 08 '18 at 00:58

-

1@Gung allow me to try one more time. Yes, the idea that marginals do not fully determine a distribution is common to both cases. The complication in this one--the one that I believe makes it so different from the other--is that the marginals in the current situation are by no means independent: each 2D marginal determines two 1D marginals *as well as a strong relationship between those marginals.* Conceptually, then, this question might be recast as "why aren't the *dependencies* in the 2D marginals 'transitive' or 'cumulative' in the sense of determining the full 3D distribution?" – whuber Feb 08 '18 at 14:15

-

@whuber, *that* is a distinct & interesting question beyond the simple point (shared by both threads) that the marginals do not fully determine a joint distribution. Suffice it to say that you are seeing more sophistication in the post's 3 sentences than I am. At this point, should we edit the Q to bring that out? Should we delete most or all of these comments? (I might delete all but my 1st & your last, eg.) – gung - Reinstate Monica Feb 08 '18 at 14:46

3 Answers

No. Perhaps the simplest counterexample concerns the distribution of three independent $\text{Bernoulli}(1/2)$ variables $X_i$, for which all eight possible outcomes from $(0,0,0)$ through $(1,1,1)$ are equally likely. This makes all four marginal distributions uniform on $\{(0,0),(0,1),(1,0),(1,1)\}$.

Consider the random variables $(Y_1,Y_2,Y_3)$ which are uniformly distributed on the set $\{(1,0,0),(0,1,0), (0,0,1),(1,1,1)\}$. These have the same marginals as $(X_1,X_2,X_3)$.

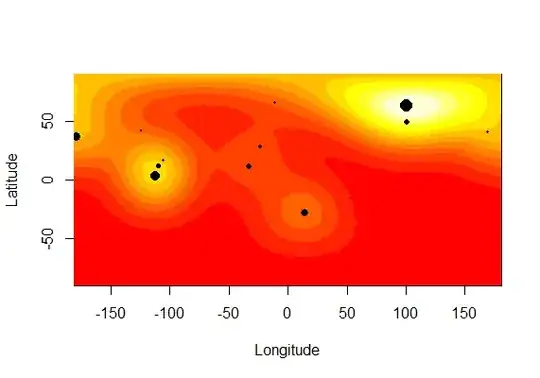

The cover of Douglas Hofstadter's Godel, Escher, Bach hints at the possibilities.

The three orthogonal projections (shadows) of each of these solids onto the coordinate planes are the same, but the solids obviously differ. Although shadows aren't quite the same thing as marginal distributions, they function in rather a similar way to restrict, but not completely determine, the 3D object that casts them.

- 281,159

- 54

- 637

- 1,101

-

1+1 of course, but If I remember correctly, the $Y1,Y2,Y3$ goes back to Bernstein and perhaps even earlier. I have used it extensively in the past to discuss the Exclusive-OR logic gate where the events that the inputs are 1 and the output is 1 are pairwise independent events (for inputs equally likely to be 0 or 1) but they are not mutually independent events – Dilip Sarwate Feb 07 '18 at 22:59

In the same spirit as whuber's answer,

Consider jointly continuous random variables $U, V, W$ with joint density function \begin{align} f_{U,V,W}(u,v,w) = \begin{cases} 2\phi(u)\phi(v)\phi(w) & ~~~~\text{if}~ u \geq 0, v\geq 0, w \geq 0,\\ & \text{or if}~ u < 0, v < 0, w \geq 0,\\ & \text{or if}~ u < 0, v\geq 0, w < 0,\\ & \text{or if}~ u \geq 0, v< 0, w < 0,\\ & \\ 0 & \text{otherwise} \end{cases}\tag{1} \end{align} where $\phi(\cdot)$ denotes the standard normal density function.

It is clear that $U, V$, and $W$ are dependent random variables. It is also clear that they are not jointly normal random variables. However, all three pairs $(U,V), (U,W), (V,W)$ are pairwise independent random variables: in fact, independent standard normal random variables (and thus pairwise jointly normal random variables). In short, $U,V,W$ are an example of pairwise independent but not mutually independent standard normal random variables. See this answer of mine for more details.

In contrast, if $X,Y,Z$ are mutually independent standard normal random variables, then they are also pairwise independent random variables but their joint density is

$$f_{X,Y,Z}(u,v,w) = \phi(u)\phi(v)\phi(w), ~~u,v,w \in \mathbb R \tag{2}$$ which is not the same as the joint density in $(1)$. So, NO, we cannot deduce the trivariate joint pdf from the bivariate pdfs even in the case when the marginal univariate distributions are standard normal and the random variables are pairwise independent.

- 41,202

- 4

- 94

- 200

You're basically asking if CAT reconstruction is possible using only images along the 3 main axes.

It is not... otherwise that's what they would do. :-) See the Radon transform for more literature.

- 1,075

- 1

- 9

- 21

-

1I like the analogy. Two aspects are troubling, though. One is the logic: just because the Radon transform (or some other technique) uses more data than the three marginals does not logically imply it really needs all those data. Another problem is that CT scans are inherently two-dimensional: they reconstruct a solid body slice by slice. (It's true that the Radon transform is defined in three and higher dimensions.) Thus they don't really get to the heart of the matter: we already know the univariate marginals aren't enough to reconstruct a 2D distribution. – whuber Feb 07 '18 at 22:24

-

@whuber: I think you misunderstood what I was saying... and the 2D vs 3D is a red herring. I was trying to say that the inverse of the Radon transform requires the full integral for its inversion (i.e. if you literally just look at the inversion formula, you see the inversion requires an integral over all angles, not a sum over *d* angles). The CAT scan was just to help the OP see it's the same problem as CT. – user541686 Feb 07 '18 at 22:46

-

That's where the logic breaks down: it's not the same problem as the CT. Your argument sounds like an analog of "every vehicle I see on the road uses at least four wheels. Therefore ground transportation with fewer than four wheels is impossible, for if it were possible, then people would be using fewer wheels to save tire costs. If you doubt this, just look at the blueprints for a car." Incidentally, the transform as implemented in a CT scanner does *not* integrate over all angles--the measure of the set of angles it uses is zero! – whuber Feb 07 '18 at 22:54

-

@whuber: Forget the CT thing for a moment. Do you agree with the rest of the logic? – user541686 Feb 07 '18 at 22:57