I made efforts in this direction and I feel myself in charge to give an answer. I written several answers and questions about this topic. Probably some of them can help you. Among others:

Regression and causality in econometrics

conditional and interventional expectation

linear causal model

Structural equation and causal model in economics

regression and causation

What is the relationship between minimizing prediction error versus parameter estimation error?

Difference Between Simultaneous Equation Model and Structural Equation Model

endogenous regressor and correlation

Random Sampling: Weak and Strong Exogenity

Conditional probability and causality

OLS Assumption-No correlation should be there between error term and independent variable and error term and dependent variable

Does homoscedasticity imply that the regressor variables and the errors are uncorrelated?

So, here:

Regression and Causation: A Critical Examination of Six Econometrics Textbooks - Chen and Pearl (2013)

the reply to your question

Under which assumptions a regression can be interpreted causally?

is given. However, at least in Pearl opinion, the question is not well posed. Matter of fact is that some points must be fixed before to “reply directly”. Moreover the language used by Pearl and its colleagues are not familiar in econometrics (not yet).

If you looking for an econometrics book that give you a best reply … I have already made this work for you. I suggest you: Mostly Harmless Econometrics: An Empiricist's Companion - Angrist and Pischke (2009). However Pearl and his colleagues do not consider exhaustive this presentation neither.

So let me try to answer in most concise, but also complete, way as possible.

Consider a data generation process $\text{D}_X(x_1, ... ,

x_n|\theta)$, where $\text{D}_X(\cdot)$ is a joint density function,

with $n$ variables and parameter set $\theta$.

It is well known that a regression of the form $x_n = f(x_1, ... ,

x_{n-1}|\theta)$ is estimating a conditional mean of the joint

distribution, namely, $\text{E}(x_n|x_1,...,x_{n-1})$. In the specific

case of a linear regression, we have something like $$ x_n =

\theta_0 + \theta_1 x_1 + ... + \theta_{n-1}x_{n-1} + \epsilon $$

The question is: under which assumptions of the DGP

$\text{D}_X(\cdot)$ can we infer the regression (linear or not)

represents a causal relationship? ... UPDATE: I am not assuming

any causal structure within my DGP.

The core of the problem is precisely here. All assumptions you invoke involve purely statistical informations only; in this case there are no ways to achieve causal conclusions. At least not in coherently and/or not ambiguous manner. In your reasoning the DGP is presented as a tools that carried out the same information that can be encoded in the joint probability distribution; no more (they are used as synonym). The key point is that, as underscored many times by Pearl, causal assumptions cannot be encoded in a joint probability distribution or any statistical concept completely attributable to it. The root of the problems is that joint probability distribution, and in particular conditioning rules, work well with observational problems but cannot facing properly the interventional one. Now, intervention is the core of causality. Causal assumptions have to stay outside distributional aspects. Most econometrics books fall in confusion/ambiguity/errors about causality because the tools presented there do not permit to distinguish clearly between causal and statistical concepts.

We need something else for pose causal assumptions. The Structural Causal Model (SCM) is the alternative proposed in causal inference literature by Pearl. So, DGP must be precisely the causal mechanism we are interested in, and our SCM encode all we know/assume about the DGP. Read here for more detail about DGP and SCM in causal inference: What's the DGP in causal inference?

Now. You, as most econometrics books, rightly invoke exogeneity, that is a causal concept:

I am however uncertain about this condition [exogeneity]. It seems too weak to

encompass all potential arguments against regression implying

causality. Hence my question above.

I understand well your perplexity about that. Actually many problems move around "exogeneity condition". It is crucial and it can be enough in quite general sense, but it must be used properly. Follow me.

Exogeneity condition must be write on a structural-causal equation (error), no others. Surely not on something like population regression (genuine concept but wrong here). But even not any kind of “true model/DGP” that not have clear causal meaning. For example, no absurd concept like "true regression" used in some presentations. Also vague/ambiguous concepts like "linear model" are used a lot, but are not adequate here.

No more or less sophisticated kind of statistical condition is enough if the above requirement is violated. Something like: weak/strict/strong exogeneity … predetermiteness … past, present, future … orthogonality/scorrelation/independence/mean independence/conditional independence .. stochastic or non stochastic regressors .. ecc. No one of them and related concepts is enough if them are referred on some error/equation/model that do not have causal meaning since origin. You need structural-causal equation.

Now, you and some econometrics books, invoke something like: experiments, randomization and related concepts. This is one right way. However it can be used not properly as in Stock and Watson manual case (if you want I can give details). Even Angrist and Pischke refers on experiments but them introduce also structural-causal concept at the core of their reasoning (linear causal model - chapter 3 pag 44). Moreover, in my checks, them are the only that introduce the concepts of bad controls. This story sound like omitted variables problem but here not only correlation condition but also causal nexus (pag 51) are invoked.

Now, exist in literature a debate between "structuralists vs experimentalists". In Pearl opinion this debate is rhetorical. Briefly, for him structural approach is more general and powerful … experimental one boil down to structural. Indeed structural equations can be viewed as language for coding a set of hypothetical experiment.

Said that, direct answer. If the equation:

$$ x_n = \theta_0 + \theta_1 x_1 + ... + \theta_{n-1}x_{n-1} + \epsilon $$

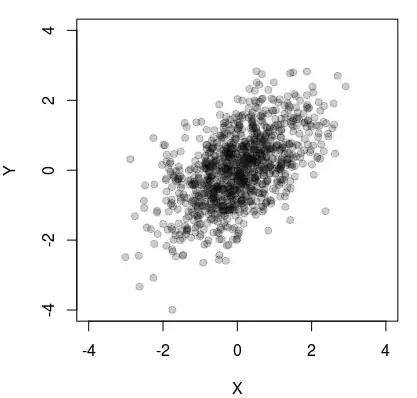

is a linear causal model like here: linear causal model

and the exogeneity condition like

$$ \text{E}[\epsilon |x_1, ... x_{n-1}] = 0$$

hold.

Then a linear regression like:

$$ x_n = \beta_0 + \beta_1 x_1 + ... + \beta_{n-1}x_{n-1} + v $$

has causal meaning. Or better all $\beta$s identifies $\theta$s and them have clear causal meaning (see note 3).

In Angrist and Pischke opinion, model like above are considered old. Them prefer to distinguish between causal variable(s) (usually only one) and control variables (read: Undergraduate Econometrics Instruction:

Through Our Classes, Darkly - Angrist and Pischke 2017). If you select the right set of controls, you achieve a causal meaning for the causal parameter. In order to select the right controls, for Angrist and Pischke you have to avoid bad controls. The same idea is used even in structural approach, but in it is well formalized in the back-door criterion [reply in: Chen and Pearl (2013)]. For some details on this criterion read here: Causal effect by back-door and front-door adjustments

As conclusion. All above says that linear regression estimated with OLS, if properly used, can be enough for identification of causal effects. Then, in econometrics and elsewhere are presented other estimators also, like IV (Instrumental Variables estimators) and others, that have strong links with regression. Also them can help for identification of causal effects, indeed they were designed for this. However the story above hold yet. If the problems above are not solved, the same, or related, are shared in IV and/or other techniques.

Note 1: I noted from comments that you ask something like: "I have to define the directionality of causation?" Yes, you must. This is a key causal assumption and a key property of structural-causal equations. In experimental side, you have to be well aware about what is the treatment variable and what the outcome one.

Note 2:

So essentially, the point is whether a coefficient represents a deep

parameter or not, something which can never ever be deduced from (that

is, it is not assured alone by) exogeneity assumptions but only from

theory. Is that a fair interpretation? The answer to the question

would then be "trivial" (which is ok): it can when theory tells you

so. Whether such parameter can be estimated consistently or not, that

is an entirely different matter. Consistency does not imply causality.

In that sense, exogeneity alone is never enough.

I fear that your question and answer come from misunderstandings. These come from conflation between causal and puerely statistical concepts. I’m not surprise about that because, unfortunately, this conflation is made in many econometrics books and it represent a tremendous mistake in econometrics literature.

As I said above and in comments, the most part of mistake come from ambiguous and/or erroneous definition of DGP (=true model). The ambiguous and/or erroneous definition of exogeneity, is a consequence. Ambiguous and/or erroneous conclusion about the question come from that. As I said in comments, the weak points of doubled and Dimitriy V. Masterov answers come from these problems.

I starting to face these problems years ago, and I started with the question: “Exogeneity imply causality? Or not? If yes, what form of exogeneity is needed?” I consulted at least a dozen of books (the more widespread were included) and many others presentations/articles about the points. There was many similarities among them (obvious) but to find two presentations that share precisely the same definitions/assumptions/conclusions was almost impossible.

From them, sometimes seemed that exogenety was enough for causality, sometimes not, sometimes depend from the form of exogeneity, sometimes nothing was said. As resume, even if something like exogeneity was used everywhere, the positions moved from “regression never imply causality” to “regression imply causality”.

I feared that some counter circuits was there but … only when I encountered the article cited above, Chen and Pearl (2013), and Pearl literature more in general, I realized that my fear were well founded. I’m econometrics lover and felt disappointment when realized this fact. Read here for more about that: How would econometricians answer the objections and recommendations raised by Chen and Pearl (2013)?

Now, exogeneity condition is something like $E[\epsilon|X]=0$ but is meaning depend crucially on $\epsilon$. What it is?

The worst position is that it represent something like “population regression error/residual” (DGP=population regression). If linearity is imposed also, this condition is useless. If not, this condition impose a linearity restriction on the regression, no more. No causal conclusions are permitted. Read here: Regression and the CEF

Another position, the most widespread yet, is that $\epsilon$ is something like “true error” but the ambiguity of DGP/true model is shared there too. Here there are the fog, in many case almost nothing is said … but the usual common ground is that it is a “statistical model” or simply a “model”. From that, exogeneity imply unbiasedness/consistency. No more. No causal conclusion, as you said, can be deduced. Then, causal conclusions come from “theory” (economic theory) as you and some books suggest. In this situation causal conclusions can arrive only at the end of the story, and them are founded on something like an, foggy, "expert judgement". No more. This seems me unsustainable position for econometric theory.

This situation is inevitable if, as you (implicitly) said, exogeneity stay in statistical side … and economic theory (or other fields) in another.

We must to change perspective. Exogeneity is, also historically, a causal concept and, as I said above, must be a causal assumption and not just statistical one. Economic theory is expressed also in term of exogeneity; them go together. In different words, the assumptions that you looking for and that permit us causal conclusion for regression, cannot stay in regression itself. These assumption must stay outside, in a structural causal model. You need two objects, no just one. The structural causal model stand for theoretical-causal assumptions, exogeneity is among them and it is needed for identification. Regression stand for estimation (under other pure statistical assumption).

Sometimes Econometric literature don't distinguish clearly between regression and true model neither, sometimes the the distinction is made but the role of true model (or DGP) is not clear. From here the conflation between causal and statistical assumptions come from; first of all an ambiguous role for exogeneity.

Exogeneity condition must be write on structural causal error. Formally, in Pearl language (formally we need it) the exogeneity condition can be written as:

$E[\epsilon |do(X)]=0$ that imply

$E[Y|do(X)]=E[Y|X]$ identifiability condition

in this sense exogeneity imply causality.

Read also here: Random Sampling: Weak and Strong Exogenity

Moreover in this article: TRYGVE HAAVELMO AND THE EMERGENCEOF CAUSAL CALCULUS – Pearl (2015). Some of the above points above are treated.

For some take away of causality in linear model read here: Linear Models: A Useful “Microscope” for Causal Analysis - Pearl (2013)

For an accessible presentation of Pearl literature read this book: JUDEA PEARL, MADELYN GLYMOUR, NICHOLAS P. JEWELL - CAUSAL INFERENCE IN STATISTICS: A PRIMER

http://bayes.cs.ucla.edu/PRIMER/

Note 3: More precisely, is needed to say that $\theta$s surely represent the so called direct causal effects, but without additional assumptions is not possible to say if they represent the total causal effects too. Obviously if there are confusion about causality at all is not possible to address this second round distinction.