In the year 2000, Judea Pearl published Causality. What controversies surround this work? What are its major criticisms?

-

12There's an [informative discussion](http://andrewgelman.com/2009/07/pearls_and_gelm/) in the archives of Andrew Gelman's blog, including contributions from Pearl and other experts. – guest Apr 14 '12 at 04:01

-

13Gelman discusses Pearl's *Causality*, in addition to SL Morgan and C Winship's *Counterfactuals and Causal Models* and A Sloman's *Causal Models* in a 2011 [review essay](http://www.stat.columbia.edu/~gelman/research/published/causalreview4.pdf) in the Am. J. of Sociology. He is generally very supportive of Pearl's contributions, especially Pearl's formalization of causal models in terms of interventions (do-calculus). However, he remains concerned that state-of-the-art causal theory may still invite oversimplified causal models and subsequently false causal inferences from observational data. – jthetzel Aug 07 '12 at 15:06

6 Answers

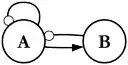

Some authors dislike Pearl's focus on the directed acyclic graph (DAG) as the way in which to view causality. Pearl essentially argues that any causal system can be considered as a non-parametric structural equation model (NPSEM), in which the value of each node is taken as a function of its parents and some individual error term; the error terms between different nodes may in general be correlated, to represent common causes.

Cartwright's book Hunting Causes and Using Them, for example, gives an example involving a car engine, which she claims cannot be modelled in the NPSEM framework. Pearl disputes this in his review of Cartwright's book.

Others caution that the use of DAGs can be misleading, in that the arrows lend an apparent authority to a chosen model as having causal implications, when this may not be the case at all. See Dawid's Beware of the DAG. For example, the three DAGs $A \rightarrow B \rightarrow C$, $A \leftarrow B \rightarrow C$ and $A \leftarrow B \leftarrow C$ all induce the same probabilistic model under Pearl's d-separation criterion, which is that A is independent of C given B. They are therefore indistinguishable based upon observational data.

However they have quite different causal interpretations, so if we wish to learn about the causal relationships here we would need more than simply observational data, whether that be the results of interventional experiments, prior information about the system, or something else.

-

7To be fair - far from being unaware of the three DAGs with the same probabilistic model, Pearl has been one of the chief promoters of the distinction between merely statistical-probabilistic-associational models and fully causal models. See for example Section 2 of ftp://ftp.cs.ucla.edu/pub/stat_ser/r354-corrected-reprint.pdf – Paul Dec 11 '17 at 18:02

-

1@Paul yes indeed; I was just reporting other people's misgivings about using DAGs. I have no such misgivings - please edit if you think the reply is unfair! – rje42 Dec 13 '17 at 09:16

-

14It just sounds like the message was totally lost in translation. Which is not necessarily the fault of your answer, if you're just reporting the criticisms people have made. The _whole point_ of Pearl's work is that different causal models can generate the same probabilistic model and hence the same-looking data. So it's not enough to have a probabilistic model, you have to base your analysis and causal interpretation on the full DAG to get reliable results. If you're just reporting what people say, I don't think your answer needs editing, these comments are sufficient clarification. – Paul Dec 13 '17 at 16:58

-

I think Pearl has all the caveats you've mentioned in his book, actually. In fact, Theorem 1.2.8 in *Causality: Models, Reasoning, and Inference, 2nd Ed.* is all about observationally equivalent DAGs. A DAG is just part of the model, and is no more authoritative or less authoritative than any other model. – Adrian Keister Oct 29 '21 at 14:56

I think this framework has a lot of trouble with general equilibrium effects or Stable Unit Treatment Value Assumption violations. In that case, the "untreated" observations no longer provide the desired counterfactual in a meaningful way. Massive job training programs that shift the entire wage distribution are one example. The counterfactual may not even be well-defined in some cases. In Morgan and Winship's Counterfactuals and Causal Models, they give an example of the claim that the 2000 election would have gone in favor of Al Gore if felons and ex-felons had been allowed to vote. They point out that the counterfactual world would have very different candidates and issues, so that you cannot characterize the alternative causal state. The ceteris paribus effect would not be the policy relevant parameter here.

- 31,081

- 5

- 63

- 138

-

1It sounds like you're saying that some counterfactuals are not reasonable because it's not reasonable to assume that only one thing changes? In the felon example, the simple fact of felons being able to vote would imply many other differences between that potential world and our actual world, so it's not reasonable to change "just one thing"? – Paul Dec 11 '17 at 17:59

-

4

-

3Thanks. I think this is a fairly profound and underappreciated point about counterfactuals. People usually assume they can do whatever they want. But just like the real world, I guess the space of valid counterfactuals can have "multicollinearity". – Paul Dec 11 '17 at 23:03

Counterfactual formal causal reasoning of the form motivated by Pearl is ill-suited to the analysis of complex dynamic causal systems.1

Disclaimer 1: I am a fan of Pearl's framework, and had the privilege of being taught counterfactual formal causal inference by two of his early exponents (Hernán and Robins).

Disclaimer 2: Levins was my mentor when I was a doctoral student, and I have published using his methods.

The counterfactual theory of causality, and the counterfactual formal causal inference/reasoning built atop of it, are profoundly useful for reasoning through both the strengths and weaknesses of causal inference based on specific combinations of study design and analyses. However, to my mind, the counterfactual theory is a theory of terminal causal narratives: $A$, and $L$, and $U$, (and maybe $V$ or $E$) happened, and then they caused $\pmb{Y}$ to happen (or not). However, the counterfactual theory of causality does not appear to describe or infer the behavior of complex causal systems (i.e. networks in which every variable is either directly or indirectly the cause of every other variable at some future time), and is thus not a theory of cyclic causal narratives.

I would raise as a counterexample of a formal causal reasoning system Levins' qualitative loop analysis, which, like Pearl's work with DAGs also hearkens back to Wright's path analysis, but employs signed digraphs in a different causal formalism (in fact, one obvious distinction is that qualitative loop analysis employs signed digraphs which are cyclic, not acyclic), to describe the behavior of such causal systems under different kinds of perturbation.

The questions posed and answered by Levins' method (and subsequent elaborations on it) include:

- How does the level of each variable in a complex system respond to press perturbation at one or more variables in the system?

- How does the life expectancy/turnover of each variable in a complex system respond to press perturbation at one or more variables in the system?

- Does variance induced by system perturbation (at specific variables) tend to diffuse across the system, or sink into very few variables in the system?

- Where do (Lyapunov) stability and instability emerge in the system?

- Where does system behavior depend on either ontological or epistemic uncertainty regarding the existence or magnitude of specific direct causal relationships comprising the system?

- What are the signs of expected bivariate correlations (or correspondences) between any variable pairs given a press perturbation at one or more variables in the system?

(Because most of Levins' loop analysis is a purely deductive method—although, see Dambacher's extension—only the bolded question is directly statistical.)

These questions are different questions than the ones posed and answered in the counterfactual formal causal inference championed by Pearl. I have even had difficulty finding examples of counterfactual formal causal inference applied in the context of stochastic processes and autoregressive models (e.g., dynamic models including $Y_{t}$ as a function of $Y_{<t}$), although this may be more due to my lack of familiarity with the intersections of Bayesian probabilistic causal graphs and Pearl's work, than due to a specific deficiency in the latter.

Aside: Sugihara's empirical dynamic modeling (see tutorial by Chaing, et al.) elaboration on state space reconstructions, likewise provides an alternative perspective to counterfactual formal causal reasoning, also from the world of complex causal systems.

1 A point similar to something Spirtes pointed out quite a while ago.

References

Chang, C.-W., Ushio, M., & Hseih, C. (2017). Empirical Dynamic Modeling for Beginners. Ecological Research, 32(6), 785–796.

Dambacher, J. M., Li, H. W., & Rossignol, P. A. (2003). Qualitative predictions in model ecosystems. Ecological Modelling, 161, 79 /93.

Dambacher, J. M., Levins, R., & Rossignol, P. A. (2005). Life expectancy change in perturbed communities: Derivation and qualitative analysis. Mathematical Biosciences, 197, 1–14.

Levins, R. (1974). The Qualitative Analysis of Partially Specified Systems. Annals of the New York Academy of Sciences, 231, 123–138.

Puccia, C. J., & Levins, R. (1986). Qualitative Modeling of Complex Systems: An Introduction to Loop Analysis and Time Averaging. Harvard University Press.

Spirtes, P. (1995). Directed Cyclic Graphical Representations of Feedback. In P. Besnard & S. Hanks (Eds.), Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence. Morgan Kaufmann Publishers, Inc.

Sugihara, G., May, R., Ye, H., Hsieh, C., Deyle, E., Fogarty, M., & Munch, S. (2012). Detecting Causality in Complex Ecosystems. Science, 338, 496–500.

Wright, S. (1934). The Method of Path Coefficients. The Annals of Mathematical Statistics, 5(3), 161–215.

- 26,219

- 5

- 78

- 131

-

2

-

Wondering if the Spirtes quote was before or after some of the newer software to come out implementing Pearl's notions. – Adrian Keister Oct 29 '21 at 15:43

-

-

Yes, I think there have been some software releases since then that can automate some aspects of Pearl's framework. The Python `dowhy` library comes to mind, and I'm sure there are others. Perhaps this criticism of Spirtes is out-of-date? – Adrian Keister Oct 29 '21 at 15:50

-

@AdrianKeister I do not see how you comments related to the substance of my answer, which is not about applying Pearl's framework, but about recognizing how ill suited it is for causal models represented by Directed *Cyclic* Graphs (as opposed to DAGs). Can you help me better understand? – Alexis Oct 29 '21 at 18:24

-

My comments weren't about the substance of your answer, merely about the Spirtes quote. – Adrian Keister Oct 29 '21 at 18:49

-

@AdrianKeister Spirtes is not quoted. He made a similar point to the point I opened with: i.e. counterfactual formal causal modeling, DAGs etc, cannot handle feedback. – Alexis Oct 29 '21 at 19:05

-

Fair enough: you didn't quote him, but your footnote indicated that Spirtes had said something to that effect. Your initial comment was not about feedback, but about complex dynamical systems. As I can certainly imagine complex dynamical systems that do not have any feedback, these two topics, while certainly related, are distinct. – Adrian Keister Oct 29 '21 at 19:27

-

@AdrianKeister I defined complex causal system here: "However, the counterfactual theory of causality does not appear to describe or infer the behavior of complex causal systems (i.e. networks in which every variable is either directly or indirectly the cause of every other variable at some future time), and is thus not a theory of ***cyclic* causal narratives**." So yes, my initial comment was pecisely about feedback. – Alexis Oct 29 '21 at 19:33

-

Well, you can define your terms your way, I suppose. It tends to hamper communication if you use a non-standard definition. I've never seen complex dynamical systems defined that way. Would Spirtes agree with your definition? – Adrian Keister Oct 29 '21 at 19:38

-

@AdrianKeister Sprites was writing about feedback. More important to the substance of my answer: I feel confident that Levins (and probably Sugihara) would feel comfortable with my definition of complex causal system. – Alexis Oct 29 '21 at 19:40

The most important criticism of Pearl's system is, from my perspective, that it has not yielded any practical, empirical advances anywhere it has been used. Given how long it has been around, there's no reason to think it will ever be a practical tool. This indicates that it can be used for some theoretical and perhaps didactic purposes, but a practical researcher will gain little from studying it.

- 111

- 1

- 2

-

4Why is it ridiculous? If Pearl promoted his system simply as some kind of conceptual, philosophical tool for understanding what causality is, I wouldn't have a problem with it. But he constantly talks it up as a "revolutionary" practical tool for researchers to use, which is simply bullshit. For example, in his latest book Pearl says that he "would not be surprised" if the front-door method "eventually becomes a serious competitor to randomized controlled trials", which is a strong claim given that there's not a single example of the method being used to solve any real problem, ever. – Matt May 22 '19 at 08:27

-

4Pearl's work has been cited tens of thousands of times. The front-door method was famously used to support the link between smoking and cancer in defiance of Ronald Fisher's testimony! – Neil G May 22 '19 at 08:47

-

15What does Pearl's citation count have to do with anything? My criticism is that the practical benefits that he has promised for decades have not materialized. Pearl came up with the front-door criterion decades after Fisher had died and the cancer-and-smoking controversy had settled down. How could the criterion have been used against Fisher? – Matt May 22 '19 at 09:09

-

2

-

-1. Pearl's framework has been used in the real world to solve real-world problems. The smoking and lung cancer link is one. I have a colleague who has used it, and had quite a complicated DAG to work with. To claim, therefore, that Pearl's framework "has not yielded any practical, empirical advances anywhere it has been used" just isn't true. It has been used. – Adrian Keister Oct 29 '21 at 15:01

-

@Adrian Keister: What are those "real-world problems" that Pearl's framework solved? Smoking causing cancer doesn't apply because that became the consensus view long before Pearl's work on causality. – Matt Jan 16 '22 at 22:59

Reading answers and comments I feel the opportunity to add something.

The accepted answer, by rje42, is focused on DAG’s and non-parametric systems; strongly related concepts. Now, capabilities and limitations of these tools can be argued, however we have to say that linear SEMs are part of the Theory presented in Pearl manual (2000 or 2009). Pearl underscores limitations of linear systems but they are part of the Theory presented.

The comment of Paul seems crucial to me: “The whole point of Pearl's work is that different causal models can generate the same probabilistic model and hence the same-looking data. So it's not enough to have a probabilistic model, you have to base your analysis and causal interpretation on the full DAG to get reliable results.” Let me says that the last phrase can be rewritten as: so it's not enough to have a probabilistic model, you need structural causal equation/model (SCM). Pearl asked us to keep in mind a demarcation line between statistical and structural/causal concepts. The former alone can be never enough for proper causal inference, we need the latter too; here stays the root of most problems. In my opinion this clear distinction and his defense, represent the most important merit of Pearl.

Moreover Pearl suggests some tools such as: DAG, d-separation, do-operator, backdoor and front door criterion, among others.

All of them are important, and express his theory, but all come from the demarcation line mentioned above, and all help us to work according with it. Put it differently, is not so tremendously relevant to argue pro or cons of one specific tool, it is rather about the necessity of the demarcation line. If the demarcation line disappears, all of Pearl's theory goes down, or, at best, adds just a bit of language to what we already have. However, this seems to me an unsustainable position. If some authors today still seriously argue so, please give me some reference about it.

I'm not yet expert enough to challenge the capability of all these tools, but they seem clear to me, and, until now, it seems to me that they work. I come from the econometric side and, about the tools therein, that I think the opposite. I can say that econometrics is: very widespread, very useful, very practical, very empirical, very challenging, very considered matters; and it has one of his most interesting topics in causality. In econometrics some causal issues can be fruitfully addressed with RCT tools. However, unfortunately, we can show that the econometrics literature, too often, addressed causal problems not properly. Shortly, this happened due to theoretical flaws. The dimensions of this problem emerge in their full width in:

Regression and Causation: A Critical Examination of Six Econometrics Textbooks - Chen and Pearl (2013) and

Trygve Haavelmo and the Emergence of causal calculus - Pearl; Econometric Theory (2015)

In these related discussions some point are addressed:

Under which assumptions a regression can be interpreted causally?

Difference Between Simultaneous Equation Model and Structural Equation Model

I don’t know if "equilibrium problems" invoked by Dimitriy V. Masterov cannot be addressed properly with Pearl SCMs, but from here:

Eight Myths About Causality and Structural Equation Models - Handbook of Causal Analysis for Social Research, Springer (2013)

it emerges that some frequently invoked limitations are false.

Finally, the argument of Matt seems to me not relevant, but not for "citations evidence" as argued by Neil G. In two words, Matt's point is

“Pearl's theory can be good for itself but not for the purpose of practice”.

This seems to me a logically wrong argument, definitely. Matter of fact is that Pearl presented a theory. So, it suffices to mention the motto “nothing can be more practical and useful than a good theory”. It is obvious that the examples in the book are as simple as possible, good didactic practice demands this. Making things more complicated is always possible and not difficult; on the other hand, proper simplifications are usually hard to make. The possibility to face simple problems or to rend them more simple seems to me strong evidence in favor of Pearl's Theory.

That said, the fact that no real issues are solved by Pearl's Theory (if it is true) is neither his responsibility not the responsibility of his theory. He himself complains that professors and researchers haven't spent time enough on his theory and tools (and on causal inference in general). This fact could be justified only in face of a clear theoretical flaw of Pearl's theory and clear superiority of another one. It is curious to see that probably the opposite is true; note that Pearl argued that RCT boil down to SCM. The problem that Matt underscores comes from professors' and researchers' responsibility.

I think that in the future Pearl's Theory will become common in econometrics too.

- 10,796

- 8

- 35

- 3,964

- 1

- 13

- 28

-

4*”Matter of fact is that Pearl presented a Theory."* What theorems did Pearl present? Is a 'do-operator' a theory? Does this theory make any change to the results when a researcher tests a physical theory at CERN or when a pharmacy company analyses the effectivity of a vaccine? Or does it just allow them to draw pretty diagrams like managers do? – Sextus Empiricus Dec 10 '20 at 08:39

-

2All things presented in Pearl 2009 represent a Theory, do-operator is relevant therein. This book is plenty of theorems, some referred on Pearl’s works. Graphs is only a language, concepts stay behind them. I’am not expert enough in Physics or pharmacy literature and/or practice for help you to find and discuss example about. – markowitz Dec 10 '20 at 09:15

-

4But what sort of *theory* is it? A reference to an entire book does not help much in conveying what this theory is and is an alarm bell that there is no theory after all, and instead just a lot of mumbo jumbo. Is there any theory from Pearl that can be described by a few sentences or by a single formula? And is the theory a *scientific* theory, which means that it can be tested experimentally or proven mathematically? Or is it just a proposal for a different language, a set of definitions, expressions and methods? Or is it more like philosophy? – Sextus Empiricus Dec 10 '20 at 09:48

-

1I do not stay here for defence Pearl’s works, he do not need my help. Pearl is an eminent scientist, and his manual was considered one of the most important from some decades to now. You said “A reference to an entire book does not help much in conveying what this theory is and is an alarm bell that there is no theory after all, and instead just a lot of mumbo jumbo”. You are wrong. Books like Pearl (2009) has been written precisely with the scope to present a Theory, but I cannot summarize it for you here. – markowitz Dec 10 '20 at 10:37

-

2However one “alarm bell” actually is here, it is your tone. Worse, in this discussion https://stats.stackexchange.com/questions/499455/monty-hall-problem-and-causality you confess: “I haven't read any of Pearl's books”, but anyway you feel the necessity to intervene here and speak against his main contribution. Forgive me for my frankness but your words reveal unjustified dislike towards Pearl and/or some ignorance. – markowitz Dec 10 '20 at 10:37

-

*“I haven't read...”* Before taking the effort to buy a book and read it, I often spend some time reading related articles or open course materials. Judea Pearl wrote for instance a very nice piece [*"Causal Inference in Statistics: An Overview"*](https://projecteuclid.org/euclid.ssu/1255440554) and a lecture note like Ricardo Silva's *"Causality"* (from [2006 Advanced Tutorial Lecture Series](http://mlg.eng.cam.ac.uk/tutorials/06/)) explains do-"operators" well. I find the diagrams are a nice graphical tool, but it's also a language that can be used as a tool for argumentum verbosum... – Sextus Empiricus Dec 10 '20 at 11:42

-

... an example is in the [comment](https://stats.stackexchange.com/questions/26437/499751?#comment765111_409548) under Matt's answer which uses a rhetoric like 'this answer is wrong because Pearls work has been cited a lot'. I do not know what it is about this 'theory' but I see it very little being discussed explicitly and more in an avoiding style like "it's all in his book". I am fine with the proponents of the methods, they are nice, but to call it a theory is too far stretched to me. It is a bit similar to Marxist philosophy and Freudian psychology, they are not really theories. – Sextus Empiricus Dec 10 '20 at 11:51

-

Instead of saying 'Pearl presented a theory', why not *show* and *demonstrate* the theory? There's *talk* about 'the theory', but like Matt says no *action*. Is it an *essential* tool that can't be missed; without which people would make mistakes? What is the actual *practical* difference of using the 'theory' and against which other 'theories' can be contrasted? Did we use wrong theories of causality before? The lack of practical demonstration in this Q&A demonstrates a criticism of Pearl's theory. Also hardly ever do you see a question being answered with DAG's unless it is about DAG's. – Sextus Empiricus Dec 10 '20 at 12:07

-

1I read carefully what you written, as always, but is not easy for me visualize exhaustively you point of view; and comments are not for long discussions. You can add your answer if you think it can help. However I suppose that you not read carefully all what I written. Indeed above are given my replies on several question and doubts you posed me. – markowitz Dec 10 '20 at 17:17

-

11/3 @SextusEmpiricus "But what sort of theory is it? A reference to an entire book does not help much in conveying what this theory is and is an alarm bell that there is no theory after all, and instead just a lot of mumbo jumbo. Is there any theory from Pearl that can be described by a few sentences or by a single formula?" It is not mumbo jumbo. It is specifically a "causal calculus" built on top of the *counterfactual theory of causation* (i.e. what we mean by saying "$A$ caused $Y$," is "if $A$ had not occurred, then $Y$ would not have occurred." – Alexis Mar 02 '21 at 21:51

-

12/3 Elaborations include probabilistic causation, and a rigorous proof of where association and causation do and do not line up, and when and how causal dependence/independence align or not with stistical dependence/independence. The places where the "backdoor criterion" which is a super-theory of "simple causal confounding", selection bias (and places where these can blur together), and differential and nondifferential measurement error cleave statistical association from *causal* association are well defined. – Alexis Mar 02 '21 at 21:51

-

13/3 Critical insights about analytic methods (e.g., instrumental variables, inverse probability weighting, etc., etc.) and their fragility or robustness under specific forms of causal assumptions, and the way specific assumptions ID specific concerns about the validity of a particular inference rounds out his work. Statisticians generally have *nothing* comparable to the edificce built atop counterfactual formal causal reasoning, and I think you do yourself a disservice by not making your way through one of the CFCR textbook. $.02 <3 – Alexis Mar 02 '21 at 21:51

-

Nothing is as practical as a good theory but it is also the case that a tree is known by its fruit. Just because Pearl presented a theory, it doesn't follow that it's good. If it doesn't bear fruit (in terms of empirical advances, see my original answer), there's a strong suspicion that it's not a good theory. My position is similar to that of [Imbens (2019)](https://arxiv.org/pdf/1907.07271v1.pdf) who argues that DAGs haven't been adopted because there are no concrete, empirical examples to show their benefits. He aptly says DAGs "appear to be a set of solutions in search of problems." – Matt Jan 16 '22 at 22:48

-

I also disagree, on principle, with the argument that it's not Pearl's responsibility to show that his theory is any good in practical settings. Many theoreticians and methodologists would be well-advised to also become experts in some "real life" research topic. If Pearl had such experience, he would probably have a more realistic view of the benefits and limitations of his theory. He might also have something more than toy models to show for it after decades of work. – Matt Jan 16 '22 at 22:50

-

@Matt, I agree with "a tree is known by its fruit". However I repeat that it is not necessarily Pearl that have to achieve them. In any case thank you for the article. I tried to expose my related points here (https://stats.stackexchange.com/questions/563346/potential-outcome-po-vs-directed-acyclic-graph-dag). Maybe you are interested in. – markowitz Feb 06 '22 at 19:41

The synthesis behind causal reasoning is access to different aspects of reality/model using inferences from data. The paradoxical features in a model are avoided through formalisation in mathematical language. Informally, these counterintuitive features arise in observing a model unlike intervening through doing calculus.

The question on the openess of algorithms in the foundations of mathematics and logic is debated. One of the central point is that formalisations lead to harness infinite power to practice but turns these consistent procedures closed. In principle, while you do, you close the system unlike observing that has an active role in quantum mechanics unlike Pearl's causation hierarchy. Quantum contextuality asserts fundamental acausal relations between events, manifest as bugs! Bug could be a way into the depth of an iceberg! Technically, DAG are insufficient to reveal deeper structure of nature, where unrelated events could be related in a non-trivial way via topology.

Pearl's vision is significant in the sense that, he formally constructed causation theory and this integrity is elegant!

- 1

- 1

-

4Can you point to a fully worked out practical example using observational data and taking into account data uncertainties, i.e., uncertainties of statistical inference? – Frank Harrell Oct 29 '21 at 19:42

-

3The conjoining of phrases like "quantum contextuality," "deeper structure of nature," and "topology" in a forum on statistics inevitably brings to mind the insights in [Harry Frankfurt's book](https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit). – whuber Oct 31 '21 at 16:14

-

Causation is at the heart of algorithmic reasoning. Statistics is just a tool! Causal model is based on equivalence between the propositions that infer to an events and the events that actually took place. The impactful consequence of this indistinguishability is a subject matter of foundations of mathematics and computer science. Its not absurd! Quantum contextuality implies acasual/non-local relations between events that are interpreted to general classical systems using topology. @whuber – Sahil Imtiyaz Oct 31 '21 at 23:34