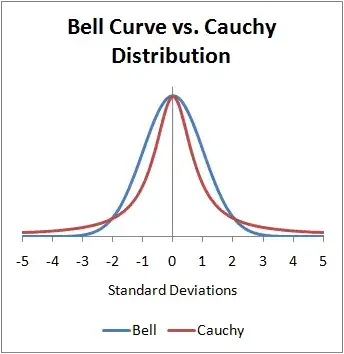

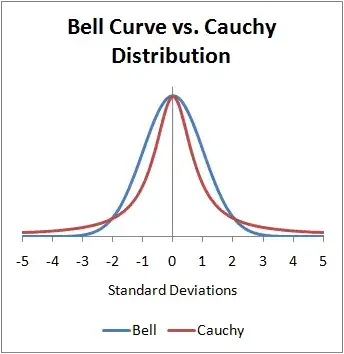

From the distribution density function we could identify a mean (=0) for Cauchy distribution just like the graph below shows. But why do we say Cauchy distribution has no mean?

From the distribution density function we could identify a mean (=0) for Cauchy distribution just like the graph below shows. But why do we say Cauchy distribution has no mean?

You can mechanically check that the expected value does not exist, but this should be physically intuitive, at least if you accept Huygens' principle and the Law of Large Numbers. The conclusion of the Law of Large Numbers fails for a Cauchy distribution, so it can't have a mean. If you average $n$ independent Cauchy random variables, the result does not converge to $0$ as $n\to \infty$ with probability $1$. It stays a Cauchy distribution of the same size. This is important in optics.

The Cauchy distribution is the normalized intensity of light on a line from a point source. Huygens' principle says that you can determine the intensity by assuming that the light is re-emitted from any line between the source and the target. So, the intensity of light on a line $2$ meters away can be determined by assuming that the light first hits a line $1$ meter away, and is re-emitted at any forward angle. The intensity of light on a line $n$ meters away can be expressed as the $n$-fold convolution of the distribution of light on a line $1$ meter away. That is, the sum of $n$ independent Cauchy distributions is a Cauchy distribution scaled by a factor of $n$.

If the Cauchy distribution had a mean, then the $25$th percentile of the $n$-fold convolution divided by $n$ would have to converge to $0$ by the Law of Large Numbers. Instead it stays constant. If you mark the $25$th percentile on a (transparent) line $1$ meter away, $2$ meters away, etc. then these points form a straight line, at $45$ degrees. They don't bend toward $0$.

This tells you about the Cauchy distribution in particular, but you should know the integral test because there are other distributions with no mean which don't have a clear physical interpretation.

Answer added in response to @whuber's comment on Michael Chernicks's answer (and re-written completely to remove the error pointed out by whuber.)

The value of the integral for the expected value of a Cauchy random variable is said to be undefined because the value can be "made" to be anything one likes. The integral $$\int_{-\infty}^{\infty} \frac{x}{\pi(1+x^2)}\,\mathrm dx$$ (interpreted in the sense of a Riemann integral) is what is commonly called an improper integral and its value must be computed as a limiting value: $$\int_{-\infty}^{\infty} \frac{x}{\pi(1+x^2)}\,\mathrm dx = \lim_{T_1\to-\infty}\lim_{T_2\to+\infty} \int_{T_1}^{T_2} \frac{x}{\pi(1+x^2)}\,\mathrm dx$$ or $$\int_{-\infty}^{\infty} \frac{x}{\pi(1+x^2)}\,\mathrm dx = \lim_{T_2\to+\infty}\lim_{T_1\to-\infty} \int_{T_1}^{T_2} \frac{x}{\pi(1+x^2)}\,\mathrm dx$$ and or course, both evaluations should give the same finite value. If not, the integral is said to be undefined. This immediately shows why the mean of the Cauchy random variable is said to be undefined: the limiting value in the inner limit diverges.

The Cauchy principal value is obtained as a single limit: $$\lim_{T\to\infty} \int_{-T}^{T} \frac{x}{\pi(1+x^2)}\,\mathrm dx$$ instead of the double limit above. The principal value of the expectation integral is easily seen to be $0$ since the limitand has value $0$ for all $T$. But this cannot be used to say that the mean of a Cauchy random variable is $0$. That is, the mean is defined as the value of the integral in the usual sense and not in the principal value sense.

For $\alpha > 0$, consider instead the integral

$$\begin{align}

\int_{-T}^{\alpha T} \frac{x}{\pi(1+x^2)}\,\mathrm dx

&= \int_{-T}^{T} \frac{x}{\pi(1+x^2)}\,\mathrm dx

+ \int_{T}^{\alpha T} \frac{x}{\pi(1+x^2)}\,\mathrm dx\\

&= 0 + \left.\frac{\ln(1+x^2)}{2\pi}\right|_T^{\alpha T}\\

&= \frac{1}{2\pi}\ln\left(\frac{1+\alpha^2T^2}{1+T^2}\right)\\

&= \frac{1}{2\pi}\ln\left(\frac{\alpha^2+T^{-2}}{1+T^{-2}}\right)

\end{align}$$

which approaches a limiting value of

$\displaystyle \frac{\ln(\alpha)}{\pi}$ as $T\to\infty$.

When $\alpha = 1$, we get the principal value $0$ discussed

above. Thus, we cannot assign an unambiguous meaning to

the expression

$$\int_{-\infty}^{\infty} \frac{x}{\pi(1+x^2)}\,\mathrm dx$$

without specifying how the two infinities were approached,

and to ignore this point leads to all sorts of complications

and incorrect results because things are not always what

they seem when the milk of principal value masquerades as the

cream of value. This is why the mean of the Cauchy

random variable is said to be undefined rather than have

value $0$, the principal value of the integral.

If one is using the measure-theoretic approach to probability and the expected value integral is defined in the sense of a Lebesgue integral, then the issue is simpler. $\int g$ exists only when $\int |g|$ is finite, and so $E[X]$ is undefined for a Cauchy random variable $X$ since $E[|X|]$ is not finite.

While the above answers are valid explanations of why the Cauchy distribution has no expectation, I find the fact that the ratio $X_1/X_2$ of two independent normal $\mathcal{N}(0,1)$ variates is Cauchy just as illuminating: indeed, we have $$ \mathbb{E}\left[ \frac{|X_1|}{|X_2|} \right] = \mathbb{E}\left[ |X_1| \right] \times \mathbb{E}\left[ \frac{1}{|X_2|} \right] $$ and the second expectation is $+\infty$.

The Cauchy has no mean because the point you select (0) is not a mean. It is a median and a mode. The mean for an absolutely continuous distribution is defined as $\int x f(x) dx$ where $f$ is the density function and the integral is taken over the domain of $f$ (which is $-\infty$ to $\infty$ in the case of the Cauchy). For the Cauchy density, this integral is simply not finite (the half from $-\infty$ to $0$ is $-\infty$ and the half from $0$ to $\infty$ is $\infty$).

The Cauchy distribution is best thought of as the uniform distribution on a unit circle, so it would be surprising if averaging made sense. Suppose $f$ were some kind of "averaging function". That is, suppose that, for each finite subset $X$ of the unit circle, $f(X)$ was a point of the unit circle. Clearly, $f$ has to be "unnatural". More precisely $f$ cannot be equivariant with respect to rotations. To obtain the Cauchy distribution in its more usual, but less revealing, form, project the unit circle onto the x-axis from (0,1), and use this projection to transfer the uniform distribution on the circle to the x-axis.

To understand why the mean doesn't exist, think of x as a function on the unit circle. It's quite easy to find an infinite number of disjoint arcs on the unit circle, such that, if one of the arcs has length d, then x > 1/4d on that arc. So each of these disjoint arcs contributes more than 1/4 to the mean, and the total contribution from these arcs is infinite. We can do the same thing again, but with x < -1/4d, with a total contribution minus infinity. These intervals could be displayed with a diagram, but can one make diagrams for Cross Validated?

The mean or expected value of some random variable $X$ is a Lebesgue integral defined over some probability measure $P$: $$EX=\int XdP$$

The nonexistence of the mean of Cauchy random variable just means that the integral of Cauchy r.v. does not exist. This is because the tails of Cauchy distribution are heavy tails (compare to the tails of normal distribution). However, nonexistence of expected value does not forbid the existence of other functions of a Cauchy random variable.

Here is more of a visual explanation. (For those of us that are math challenged.). Take a cauchy distributed random number generator and try averaging the resulting values. Here is a good page on a function for this. https://math.stackexchange.com/questions/484395/how-to-generate-a-cauchy-random-variable You will find that the "spikiness" of the random values cause it to get larger as you go instead of smaller. Hence it has no mean.

Just to add to the excellent answers, I will make some comments about why the nonconvergence of the integral is relevant for statistical practice. As others have mentioned, if we allowed the principal value to be a "mean" then the slln are not anymore valid! Apart from this, think about the implications of the fact that , in practice, all models are approximations. Specifically, the Cauchy distribution is a model for an unbounded random variable. In practice, random variables are bounded, but the bounds are often vague and uncertain. Using unbounded models is way to alleviate that, it makes unnecessary the introduction of unsure (and often unnatural) bounds into the models. But for this to make sense, important aspects of the problem should not be affected. That means that, if we were to introduce bounds, that should not alter in important ways the model. But when the integral is nonconvergent that does not happen! The model is unstable, in the sense that the expectation of the RV would depend on the largely arbitrary bounds. (In applications, there is not necessarily any reason to make the bounds symmetric!)

For this reason, it is better to say the integral is divergent than saying it is "infinite", the last being close to imply some definite value when no exists! A more thorough discussion is here.

I wanted to be a bit picky for a second. The graphic at the top is wrong. The x-axis is in standard deviations, something that does not exist for the Cauchy distribution. I am being picky because I use the Cauchy distribution every single day of my life in my work. There is a practical case where the confusion could cause an empirical error. Student's t-distribution with 1 degree of freedom is the standard Cauchy. It will usually list various sigmas required for significance. These sigmas are NOT standard deviations, they are probable errors and mu is the mode.

If you wanted to do the above graphic correctly, either the x-axis is raw data, or if you wanted them to have equivalent sized errors, then you would give them equal probable errors. One probable error is .67 standard deviations in size on the normal distribution. In both cases it is the semi-interquartile range.

Now as to an answer to your question, everything that everyone wrote above is correct and it is the mathematical reason for this. However, I suspect you are a student and new to the topic and so the counter-intuitive mathematical solutions to the visually obvious may not ring true.

I have two nearly identical real world samples, drawn from a Cauchy distribution, both have the same mode and the same probable error. One has a mean of 1.27 and one has a mean of 1.33. The one with a mean of 1.27 has a standard deviation of 400, the one with the mean of 1.33 has a standard deviation of 5.15. The probable error for both is .32 and the mode is 1. This means that for symmetric data, the mean is not in the central 50%. It only takes ONE additional observation to push the mean and/or the variance outside significance for any test. The reason is that the mean and the variance are not parameters and the sample mean and the sample variance are themselves random numbers.

The simplest answer is that the parameters of the Cauchy distribution do not include a mean and therefore no variance about a mean.

It is likely that in your past pedagogy the importance of the mean was in that it is usually a sufficient statistic. In long run frequency based statistics the Cauchy distribution has no sufficient statistic. It is true that the sample median, for a Cauchy distribution with support over the entire reals, is a sufficient statistic, but that is because it inherits it from being an order statistic. It is sort of coincidentally sufficient, lacking an easy way to think about it. Now in Bayesian statistics there is a sufficient statistic for the parameters of the Cauchy distribution and if you use a uniform prior then it is also unbiased. I bring this up because if you have to use them on a daily basis, you have learned about every way there is to perform estimations on them. This is due to the fact that inference between long run frequency based statistics and Bayesian statistics runs in opposite directions.

There are no valid order statistics that can be used as estimators for truncated Cauchy distributions, which are what you are likely to run into in the real world, and so there is no sufficient statistic in frequency based methods for most but not all real world applications.

What I suggest is to step away from the mean, mentally, as being something real. It is a tool, like a hammer, that is broadly useful and can usually be used. Sometimes that tool won't work.

A mathematical note on the normal and the Cauchy distributions. When the data is received as a time series, then the normal distribution only happens when errors converge to zero as t goes to infinity. When data is received as a time series, then the Cauchy distribution happens when the errors diverge to infinity. One is due to a convergent series, the other due to a divergent series. Cauchy distributions never arrive at a specific point at the limit, they swing back and forth across a fixed point so that fifty percent of the time they are on one side and fifty percent of the time on the other. There is no median reversion.

To put it simply, the area under the curve approaches infinity as you zoom out. If you sample a finite region, you can find a mean for that region. However, there is no mean for infinity.