I am wondering how to find the general normal stationary process satisfying $X_{n+2} + X_{n} = 0$. Any help would be much appreciated, although I am relatively new to this space so some details how to do this in general would be very nice. Alternatively please feel free to point out relevant literature online.

- 132,789

- 81

- 357

- 650

- 219

- 2

- 10

-

1What is the meaning of stationary? The condition that $X_{n+2}=-X_n$ puts a constraint on the random variables since there are really only two random variables $X_{\text{odd}}$ and $X_{\text{even}}$ in this random process. – Dilip Sarwate Oct 07 '12 at 21:14

-

@DilipSarwate Sorry for a late reply i would imagine stationarity has the following implications here. 1) Mean is independent of time. 2) Covariance between any two readings is independent of time. Any thoughts ? – Comic Book Guy Oct 08 '12 at 01:25

-

Unless you choose all your $X_i$ to have zero mean, the mean of a process with the property that $X_{n+2}=-X_n$ can hardly be said to be constant, right? In fact, the mean function has values $$\ldots, \mu_{\text{even}}, \mu_{\text{odd}}, - \mu_{\text{even}}, -\mu_{\text{odd}},\mu_{\text{even}}, \mu_{\text{odd}},\ldots$$ right? Also, the covariance function of a stationary process does _not_ have the property that you think it has. You may want to look at [this answer](http://dsp.stackexchange.com/a/488/235) on dsp.SE to see what might be an appropriate set of conditions to require. – Dilip Sarwate Oct 08 '12 at 01:40

-

@DilipSarwate I am happy to consider only the case where the mean is 0. I believe that would be known as normalised time series right ? I am going to read the link you referred to and would get back to you when i have done so. – Comic Book Guy Oct 08 '12 at 02:02

-

@DilipSarwate Thanks for referring me to that excellent answer and i hope more smart people choose to provide such knowledge dumps. As per the Covariance property i assumed it has i got that from http://www.stat.columbia.edu/~rdavis/papers/VAG002.pdf I assume the stochastic process i am considering is strictly stationary. I look forward to your thoughts. – Comic Book Guy Oct 08 '12 at 17:53

-

@DilipSarwate I think you are correct in pointing out that the covariance property does not hold the covariance property is for weakly stationary processes. Since all weakly stationary processes are stationary( as per Gaussian Processes By Takeyuki Hida, Masuyuki Hitsuda page 38) it may actually have the covariance property but assuming our process is strictly stationary(smallest assumption) i guess i can not make use of this information. I suppose to solve this problem i should be trying to equate bivariate joint normal distributions of $(X_{n+2}, X_{-n})$ for n odd and even. Any thoughts ? – Comic Book Guy Oct 08 '12 at 18:56

-

@DilipSarwate On re-reading the question i have come to realize the question has nothing to do with Gaussian stationary process. The word normal is being used to describe the time series as normalized. – Comic Book Guy Oct 08 '12 at 19:24

2 Answers

My answer is somewhat different from the one posted by @mpiktas.

Consider a discrete-time random process $X_0, X_1, X_2, \ldots$ with the property that $X_{n+2} = -X_n$. This process actually has only two random variables $X_0$ and $X_1$: everything else is predetermined since the process must necessarily be $$X_0, X_1, -X_0, -X_1, X_0, X_1, -X_0, -X_1, \cdots $$

Can such a process be a stationary process?

Well, in order for the

process to be stationary, it is necessary that all the random

variables have the same distribution. Thus, $X_0$ and $X_1$

must have the same distribution. So must $X_0$ and $X_2 = -X_0$ have

the same distribution. If $f(x)$ denotes the common probability

density function of $X_0$ and $X_1$ (including as a special case a

discrete density function), then $f(x)$ must be an even function

of $x$. If the density function admits a mean, the mean must be $0$.

(Note that

we could have $X_0$ and $X_1$ be standard Cauchy random

variables whose density function is even but the mean does

not exist, see e.g. the answers to this question).

Next, suppose that $(X_0,X_1)$ have joint density $f(x,y)$. If the process is stationary, then $(X_1,X_2) = (X_1,-X_0)$, $(X_2,X_3) = (-X_0,-X_1)$ and $(X_3,X_4) = (-X_1,X_0)$ all must have the same joint density. It follows that $$f(x,y) = f(y,-x) = f(-x,-y) = f(-y,x).$$ Note that this condition is always satisfied if $X_0$ and $X_1$ are independent (in addition to being identically distributed random variables with even density functions) e.g. the independent uniform $\{+1,-1\}$ random variables in @cardinal's comment on mpiktas's answer or independent zero-mean Gaussian random variables with the same variance. But I am not sure that independence is necessary for this relation to hold. (Note added in edit: In fact, as cardinal's insightful comment on this answer shows, $(X_0,X_1)$ taking on values $(1,0), (0,1), (-1,0), (0,-1)$ with equal probability satisfy the above condition but are not independent ). To tie this in with mpiktas's answer, note that if $X_0$ and $X_1$ have finite variance $\sigma^2$, then, for a stationary process, the autocorrelation function $R_X(m,m+n) = E[X_mX_{m+n}]$ (which is the same as the autocovariance function $C_X(m,m+n) = \text{cov}(X_m, X_{m+n})$ since the mean is $0$) must not depend at all on the choice of $m$, but must be a function only of $n$, the separation between the variables. From this we get that $$\text{cov}(X_0, X_1) = \text{cov}(X_1,X_2) = \text{cov}(X_1, - X_0) = -\text{cov}(X_0, X_1),$$ that is, $X_0$ and $X_1$, the only two random variables in the process, must be uncorrelated random variables. Note also that the covariance matrix in mpiktas's answer must be of the form $$\begin{bmatrix} r(0) & 0 & -r(0) & 0 & r(0) & \ldots \\ 0 & r(0) & 0 & -r(0) & 0 & \ldots \\ -r(0) & 0 & r(0) & 0 & -r(0) & \ldots \\ 0 & -r(0) & 0 & r(0) & 0 & \ldots \\ r(0) & 0 & -r(0) & 0 & r(0) & \ldots \\ \ldots & \ldots & \ldots & \ldots & \ldots \end{bmatrix}$$ In particular, there is no need to consider values of $r(1)$ other than $0$ and simulate anything to see if the eigenvalues are positive or not.

In summary, there do exist discrete-time stationary normal random processes with the property that $X_{n+2} = -X_n$. Such random processes are necessarily of the form $$X_0, X_1, -X_0, -X_1, X_0, X_1, -X_0, -X_1, \cdots $$ where $X_0$ and $X_1$ are zero-mean uncorrelated Gaussian random variables with the same variance. As a special case, $X_0$ and $X_1$ being independent $N(0,\sigma^2)$ random variables will work since independent Gaussian random variables are uncorrelated. But we could also have $X_1 = ZX_0$ where $Z$, which takes on values $+1$ and $-1$ with equal probability $\frac{1}{2}$, is independent of $X_0$. (This is a standard example of uncorrelated marginally Gaussian random variables that are not independent; they are not jointly Gaussian). It must also be said that these random processes are not particularly interesting as a model for random phenomena since every realization (or sample path) of the process is necessarily of the form $$a, b, -a, -b, a, b, -a, -b, \cdots$$ for independent $X_0$ and $X_1$ and of the form $$a, a, -a, -a, a, a, -a, -a \cdots~~~~~ \text{or}~~~~~ a, -a, -a, a, a, -a, -a, a,\cdots$$ for uncorrelated but not independent $X_0$ and $X_1$.

- 41,202

- 4

- 94

- 200

-

3The two variables need not be independent. Consider $(X_0,X_1)$ uniform on $\{(1,0), (0,1), (-1,0), (0,-1)\}$. Though I have not checked this carefully (yet), more generally, if $U_1$ and $U_2$ are iid symmetric random variables, I believe $X_0 = U_1 + U_2$ and $X_1 = U_1 - U_2$ should work. – cardinal Oct 10 '12 at 04:01

-

@cardinal Thank you for confirming my conjecture. As I said in my answer, the random variables "...must be _uncorrelated_ random variables; independence might not be necessary." For $U_1$ and $U_2$ being iid symmetric and $X_0 = U_1+U_2$, $X_1 = U_1-U_2$, $\text{cov}(X_0,X_1)=0$ by the bilinearity of covariance. Of course, if $U_0$ and $U_1$ are _Gaussian_ as the OP belatedly admitted his instructor wants them to be, then $X_0$ and $X_1$ are also independent zero-mean Gaussian random variables. – Dilip Sarwate Oct 10 '12 at 13:00

-

Yes, I meant it to be a confirmatory statement. Apologies if it did not sound that way. :-) The intuition behind the end of my last comment is that, e.g., in the case of a density, the construction should rotate it by 45 degrees, thus satisfying the constraints you mention. But existence of a density is not required for the "result" (which I still haven't checked). – cardinal Oct 10 '12 at 13:15

-

@DilipSarwate Thank you for your excellent and insightful answer. This is a problem from Feller's book and he claims that $X_n = U \cos (n\pi/2) + V \sin(n\pi/2)$ as the solution to this problem. I do n't see how he still got that though. – Comic Book Guy Oct 10 '12 at 20:03

-

@Hardy: *I don't see how he still got that though.* Try calculating $\cos(n\pi/2)$ and $\sin(n\pi/2)$ for a few values of $n$ and then compare it to Dilip's answer. – cardinal Oct 10 '12 at 20:16

-

@cardinal Thanks for that. Look i get that the formula in my comment would produce the sequence in the answer above, but in a way i am kind of retrofitting the answer to the sequence. I was hoping that there is a generic technique to get to such formulas but i'd imagine the answer is no for that. The algorithm to compute such answers would be to determine the sequence and then try find a formula which produces that sequence. Please let me know if i am mistaken. – Comic Book Guy Oct 10 '12 at 21:47

If the equation has a stationary solution, it is always a good idea to check whether the covariance function satisfies the required properties.

So if we assume that there is a stationary solution to an equation $X_{n+2}+X_{n}=0$, then clearly $EX_n=0$. The covariance function for such process must satisfy the following relationships

$$r(2k)=-(1)^kr(0)$$ $$r(2k+1)=-(1)^{k}r(1)$$

for all $k\in \mathbb{Z}$. But for $r$ to be a covariance function, the matrix

$$\begin{bmatrix} r(0) & r(1) & ... & r(n)\\ r(1) & r(0) & ... & r(n-1)\\ ... & ... & ... & ...\\ r(n) & r(n-1) & ... & r(0) \end{bmatrix}$$

must be semi-positive definite for each $n$. Or alternatively the inequality

$$\sum_{i=1}^n\sum_{j=1}^na_{i}a_{j}r(i-j)\ge 0$$

must hold for any $n\in \mathbb{N}$, and any $\{a_1,...,a_n\}\subset \mathbb{R}^n$.

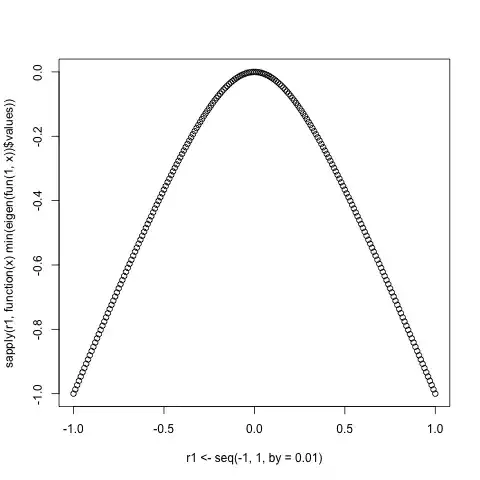

To get an idea we can see what happens in the case $n=3$. Let us restrict $r(0)=1$, then $r(1)$ can range from $-1$ to 1, since for covariance function we must have $|r(i)|\le r(0)$ for all $i$. Now create the corresponding covariance matrix and calculate its minimal eigen value. If matrix is semi-positive definite the minimal eigen value should be non-negative. Here is the code:

> fun<-function(a,b)matrix(c(a,b,-a,b,a,b,-a,b,a),ncol=3)

> fun(1,0.4)

[,1] [,2] [,3]

[1,] 1.0 0.4 -1.0

[2,] 0.4 1.0 0.4

[3,] -1.0 0.4 1.0

plot(r1<-seq(-1,1,by=0.01),sapply(r1,function(x)min(eigen(fun(1,x))$values)))

We see that minimal eigenvalues of covariance matrix is non-negative only when $r(1)=0$. For this case we have

\begin{align} \sum_{i=1}^n\sum_{j=1}^na_ia_jr(i-j)&=r(0)\sum_{k}\sum_l a_{2k}a_{2l}(-1)^{k-l}+r(0)\sum_{k}\sum_{l}a_{2k-1}a_{2l-1}(-1)^{k-l}\\ &=r(0)\left(\sum_k a_{2k}(-1)^{k+1}\right)^2+r(0)\left(\sum_k a_{2k-1}(-1)^{k+1}\right)^2\ge 0 \end{align}

So the solution exists when $r(1)=0$. Probably it is possible to prove that this is the only case, i.e. $r(1)$ cannot get any other value.

- 33,140

- 5

- 82

- 138

-

mpiktas, ignoring for a moment whatever the OP really means by "normal" (there appears to be some confusion in this regard), Dilip's comment provides an example that should be clearly seen to be strictly stationary. For a binary example, let $X_1$ and $X_2$ be independent uniform on $\{-1,+1\}$ and consider the sequence $X_1, X_2, -X_1, -X_2, X_1,\ldots$. The finite dimensional distributions are shift invariant. The same technique works taking standard normals instead of uniform on $\{-1,+1\}$. – cardinal Oct 09 '12 at 17:08

-

@cardinal, well this coincides with $r(1)=0$. It seems that the matrix can be semi-positive definite. Then it is ok. I'll change my answer to reflect that. – mpiktas Oct 09 '12 at 17:51

-

-

@mpiktas Thank you for your post that was very educational. I spoke to my uni lecturer and he said the normal actually refers to Gaussian in this question and he gave me a hint without having done any calculations to try evaluate the auto-covariance. I am going to try do that this evening and i'll try post my findings. – Comic Book Guy Oct 09 '12 at 20:05