I have read that this doesn't work, but I do not understand exactly why. Please can someone explain.

-

3https://en.wikipedia.org/wiki/Cauchy_distribution#Explanation_of_undefined_moments – corey979 Oct 12 '21 at 20:24

-

5My pen began smoking... The paper caught fire... I had to put it out. Computing with divergent integrals can be hazardous! – whuber Oct 12 '21 at 20:33

-

1We have many explanations. They can be found with [a site search](https://stats.stackexchange.com/search?q=cauchy+distribution+variance+infinit*). One of the more useful threads is https://stats.stackexchange.com/questions/91512/. – whuber Oct 12 '21 at 20:45

-

Also relevant is [What is the difference between finite and infinite variance](http://stats.stackexchange.com/questions/94402/), but the key point here is that the variance of the Cauchy ($t$ with 1 degree of freedom) is undefined, as it has to be since the mean is undefined! Whereas for the $t$ distribution with 2 df, the mean is zero and the variance is infinite. – Silverfish Oct 13 '21 at 00:42

-

I haven't found in any of the threads a really good explanation of what happens if you try to find the mean and variance of $t_1$, $t_2$ and $t_3$. I think differences between those 3 cases would be instructive to the OP as to the different issues that can arise (eg "undefined" vs "infinite"). As such I don't think the question should be closed as duplicate, unless a better-matched thread comes to light! Perhaps the question that comes closest is [Why does the Cauchy distribution have no mean?](https://stats.stackexchange.com/q/36027) - clearly undefined mean implies undefined variance! – Silverfish Oct 13 '21 at 00:51

-

@Silverfish That first comment is incorrect, because the variance can be defined without reference to the mean. The variance of the Cauchy distribution is well-defined, exists, and is *infinite.* These points are all discussed in various related threads. – whuber Oct 13 '21 at 13:44

-

1@whuber [This discussion on whether variance must be defined with reference to the mean](https://stats.stackexchange.com/questions/91512/how-can-a-distribution-have-infinite-mean-and-variance#comment179342_91515) is interesting, though it seems fair to say that definition of variance in terms of the mean is much more commonly seen, even if it has some disadvantages compared to eg looking at squares of differences between pairs of data-points. – Silverfish Oct 13 '21 at 14:03

-

Plenty of reputable sources [eg the NIST Engineering Statistics Handbook](https://www.itl.nist.gov/div898/handbook/eda/section3/eda3663.htm) do take the approach that the variance of the Cauchy distribution is "undefined" rather than "infinite". I'm not sure they're *incorrect* to do that. I think a really good answer to the OP's question would address the matter of what "taste" in definitions is required for either "infinite" or "undefined" to become the more *palatable* answer to a pathological problem... – Silverfish Oct 13 '21 at 14:09

-

1I should certainly post a corrigendum to my earlier statement that the Cauchy distribution's variance is undefined "as it has to be since the mean is undefined" - this is obviously true if we define the variance in terms of the mean, but this is not the only way that variance can be defined, as @whuber points out – Silverfish Oct 13 '21 at 14:26

2 Answers

Comment. One clue is that you get erratic results when you try Monte Carlo approximation.

Student's t distribution with one degree of freedom is standard Cauchy. In the R code below I try to approximate the (nonexistent) standard deviation of this distribution by finding the sample SD of a sample of a million. In six tries I get six very different answers. The very fat tails of the Cauchy distribution (which prevent existence of the population standard deviation) give far outliers at random that make the sample standard deviations very different from one run to another.

set.seed(1234)

sd(rt(10^6,1))

[1] 4369.947

sd(rt(10^6,1))

[1] 546.1966

sd(rt(10^6,1))

[1] 1111.853

sd(rt(10^6,1))

[1] 1040.681

sd(rt(10^6,1))

[1] 975.8808

sd(rt(10^6,1))

[1] 597.3756

By contrast, if I do the same thing for a normal distribution with $\sigma = 50,$ I get consistent answers to about two or three significant digits.

set.seed(1235)

sd(rnorm(10^6,0,50))

[1] 50.00368

sd(rnorm(10^6,0,50))

[1] 50.05707

sd(rnorm(10^6,0,50))

[1] 50.0941

sd(rnorm(10^6,0,50))

[1] 50.01311

sd(rnorm(10^6,0,50))

[1] 49.98236

sd(rnorm(10^6,0,50))

[1] 49.99185

The method also works for samples of a million from an exponential population with rate $1/50,$ mean $50,$ and standard deviation $50.$ (Agreement to about two significant digits.)

set.seed(1236)

sd(rexp(10^6,1/50))

[1] 50.01171

sd(rexp(10^6,1/50))

[1] 50.07582

sd(rexp(10^6,1/50))

[1] 50.05828

sd(rexp(10^6,1/50))

[1] 50.04774

sd(rexp(10^6,1/50))

[1] 50.08311

sd(rexp(10^6,1/50))

[1] 50.04089

Finally, Student's t distribution with 3 degrees of freedom does have a standard deviation $(\sigma = \sqrt{3}\approx 1.732).$ (Results close to 1.7.)

set.seed(1237)

sd(rt(10^6,3))

[1] 1.71784

sd(rt(10^6,3))

[1] 1.733405

sd(rt(10^6,3))

[1] 1.730881

sd(rt(10^6,3))

[1] 1.717023

sd(rt(10^6,3))

[1] 1.739444

sd(rt(10^6,3))

[1] 1.736086

- 47,896

- 2

- 28

- 76

-

2The erratic results indeed can serve to illustrate one of the implications of having a huge variance. However, a Monte-Carlo explanation is ultimately unsatisfying because (among other things) it doesn't really distinguish distributions with finite variances from those with infinite variances. You will get equally erratic results when simulating, say, from a Student $t(2.01)$ distribution, which does have a finite variance. – whuber Oct 13 '21 at 13:48

I thought I would take a stab at it.

The standard formula for the standard deviation assumes that a standard deviation exists. One way to look at it would be to imagine that you created a pattern recognition program that recognizes noses. Imagine that if you feed in an image, then it will output the location of the nose.

Vertebrates have noses. Nothing else has a nose.

If you run the nose finding algorithm on a tree, it will still output the location of the tree’s nose. As you can imagine, that will be a meaningless thing to do.

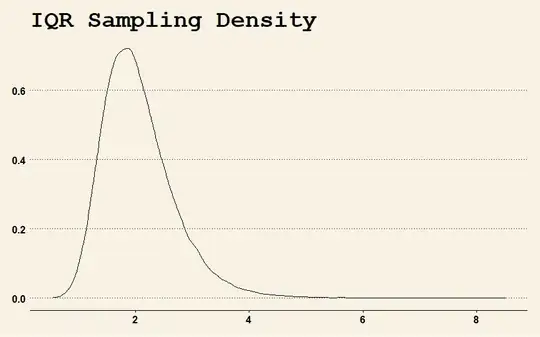

So what I did was create 100,000 samples of thirty observations each from the standard Cauchy distribution in R. I used it to compare the estimates of the interquartile range and the algorithmic estimate of the standard deviation that appears in most textbooks.

First, I estimated the interquartile range (IQR) and the standard deviation (SD) for each sample using the R functions IQR and sd. The distribution of one estimate versus the other is here.

Second, I restricted the estimate of the SD to 750, which is about 99.5% of the cases. Its graph is here.

Fifty percent of the data for the entire set falls between $\pm{1}$. Tukey’s five point summary for the data is

Minimum -417,190

1 Quartile -1

Median 0

3 Quartile 1

Maximum 4,285,676

The average estimated value of the SD is 52.28. Remember that half the data falls between -1 and 1.

Tukey’s five-point summary for the SD is

Minimum 0.75

1 Quartile 3.76

Median 6.47

3 Quartile 13.76

Maximum 782,453

On the other hand, the IQR is reasonably well behaved, as can be seen by the sampling distribution of the IQR. I could not produce a visual for the sampling distribution of the SD because it is too irregularly shaped.

The worst sample of the 100,000 samples, in terms of estimating the IQR was sample 42447. It's plot is

As you can see, the SD is all over the place because it doesn't exist. Formally speaking, it is undefined. So is the nose on a tree.

The code to create it was

rm(list=ls())

library(ggplot2)

library(ggthemes)

set.seed(500)

observations<-30

experiments<-100000

x<-matrix(rcauchy(observations*experiments),nrow = observations)

scale_estimates<-data.frame(standard_deviation=apply(x,2,sd), Interquartile_range=apply(x,2,IQR))

a<-ggplot(data = scale_estimates,aes(Interquartile_range,standard_deviation))+theme_wsj()

a<-a+geom_point()+ggtitle("IQR Versus Standard Deviation")

print(a)

a<-ggplot(data = scale_estimates,aes(Interquartile_range,standard_deviation))+theme_wsj()

a<-a+geom_point()+coord_cartesian(ylim = c(0,750))

print(a)

print(fivenum(scale_estimates$standard_deviation))

print(mean(scale_estimates$standard_deviation))

print(fivenum(as.vector(x)))

print(mean(x))

a<-ggplot(data = scale_estimates,aes(Interquartile_range))+theme_wsj()

a<-a+geom_density()+ggtitle("IQR Sampling Density")

print(a)

print(fivenum(scale_estimates$Interquartile_range))

print(mean(scale_estimates$Interquartile_range))

print(scale_estimates[scale_estimates$Interquartile_range>8.5,])

worst_estimator_data<-data.frame(x=x[,42447])

a<-ggplot(data = worst_estimator_data,aes(x))+theme_wsj()

a<-a+geom_density()+ggtitle("KDE of Worst Sample")

print(a)

I do apologize, I didn't annotate the R code. I got sleepy. You will need to change the worst plot if you change the seed or comment it out.

One thing I did not do was increase the sample size. If I had, the measurement of the SD would have gotten larger and crazier, while the sampling distribution of the IQR would have narrowed.

The estimates of the SD of the Cauchy distribution get worse as the sample size gets larger and larger.

I think this example emphasizes one very useful thing. The formulas that appear in textbooks are not the true values. They are estimators. In this case, a true mean, variance or standard deviation do not exist, but the formula doesn't vanish. It just doesn't do anything useful.

- 6,957

- 13

- 21

-

In this case the SD *does* exist, but is infinite. Please also see related comments at https://stats.stackexchange.com/questions/548039/what-happens-when-you-try-to-find-standard-deviation-of-a-non-truncated-cauchy#comment1006781_548039 and https://stats.stackexchange.com/questions/548039/what-happens-when-you-try-to-find-standard-deviation-of-a-non-truncated-cauchy#comment1006784_548071. – whuber Oct 13 '21 at 13:50

-

@whuber but infinity is not in the set of real numbers. It might go to infinity, but doesn't that, in part depend on how the integral is defined. I defer to you as this is your bailiwick as I may be misunderstanding the idea of a limit and a definition. That might be an interesting question for stack exchange if I could think about how to generalize it. It is being picky but it might matter. Does the wording of the definitions matter and does it produce differing intuition? Is there a lesson there? Some probability paradoxes, seem to be partly limit based. – Dave Harris Oct 15 '21 at 03:16

-

@whuber I will think about how to edit the post to conform to your comment. – Dave Harris Oct 15 '21 at 03:17