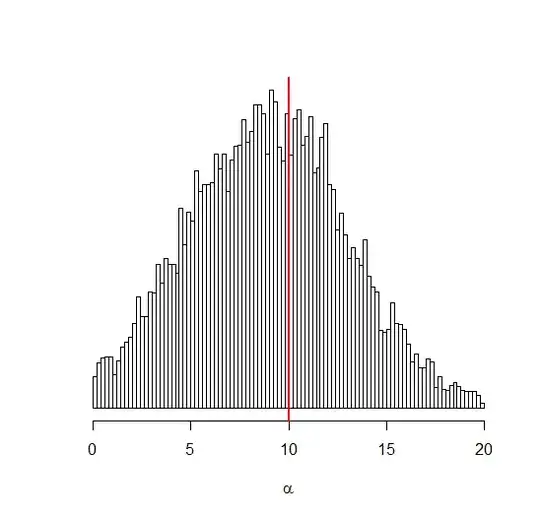

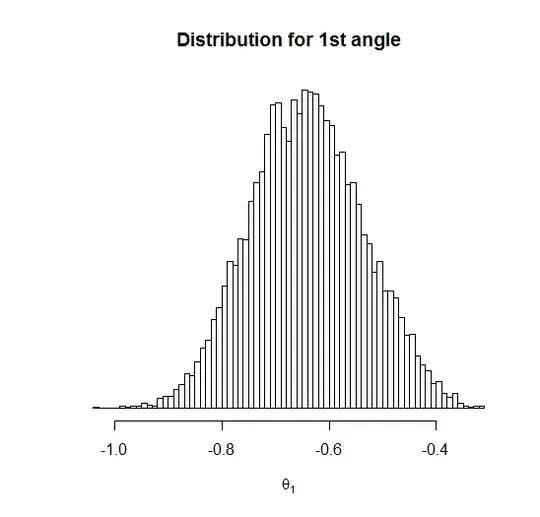

I am trying to learn Stan in R and as a fun challenge I am trying to estimate the location of a lighthouse based on the observed flashes. But the models I tried do not converge (Rhat != 1) or have estimated parameters with a large spread.

The observed data are flashes from a lighthouse 100 meters away the (straight) coast line. The angle is uniformly distributed, but the observed flashes along the coast line are heavy tailed.

n_flashes <- 50

loc <- c(0, 100)

angles <- runif(n_flashes, -pi/2, pi/2)

angles_x <- loc[2] * tan(angles)

flashes <- loc[1] + angles_x

I want to estimate the lighthouse location by given the actual model generation process to stan. This is my stan model:

data {

int<lower=0> N;

real flashes[N];

}

parameters {

real x_loc;

real<lower=0> y_loc;

real<lower=-pi()/2, upper=pi()/2> angle[N];

}

model {

x_loc ~ normal(0, 10);

y_loc ~ normal(100, 10);

for (i in 1:N) {

flashes[i] ~ cauchy(x_loc + tan(angle[i]) * y_loc, 1);

}

}

(Note: I model the flash observation with a 1 meter standard deviation, because Stan requires me to give a distribution for flashes[i]).

Then I call the model from R:

n_flashes <- 50

loc <- c(0, 100)

angles <- runif(n_flashes, -pi/2, pi/2)

angles_x <- loc[2] * tan(angles)

flashes <- loc[1] + angles_x

stan_input <- list()

stan_input$flashes <- flashes

stan_input$N <- n_flashes

fit <- stan("lighthouse.stan", data = stan_input)

But this model has large Rhat's. How can I improve this model to prevent this? How can I model the angles in stan? How would you model the location of a lighthouse based on the flashes observed along the coast line?