I have been studying LSTMs for a while. I understand at a high level how everything works. However, going to implement them using Tensorflow I've noticed that BasicLSTMCell requires a number of units (i.e. num_units) parameter.

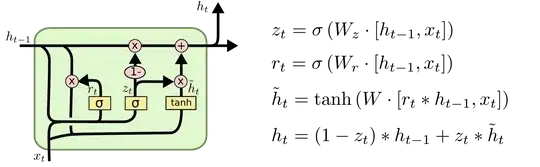

From this very thorough explanation of LSTMs, I've gathered that a single LSTM unit is one of the following

which is actually a GRU unit.

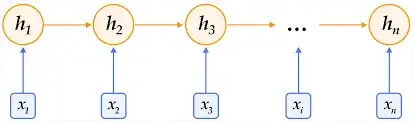

I assume that parameter num_units of the BasicLSTMCell is referring to how

many of these we want to hook up to each other in a layer.

That leaves the question - what is a "cell" in this context? Is a "cell" equivalent to a layer in a normal feed-forward neural network?