Apologies, this question is quite long.

Apologies, this question is quite long.

I am trying to implement a paper on optimising the working of multilayer LSTM. The optimisation process works as follows:

First I wrote a sequential code for an LSTM network.However, I didn't use the concept of multi-layer, as I was not aware of this concept.

I implemented the basic optimisations, but when I reached the later steps, I found that I need to use the concept of hidden layers.

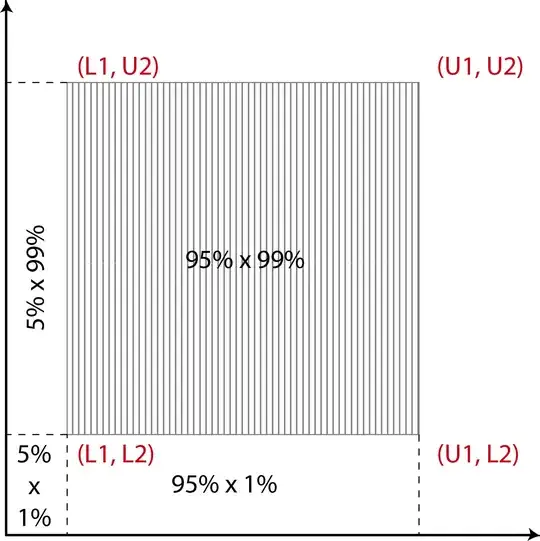

Below is my current understanding of hidden layers via diagram drawn by me. I wanted to ask if my understanding is correct or not, and if it is correct, What would be the values in place of '?'. I am a beginner in RNNs, Hence, I am deeply grateful for your time.