it's hard to relate the equation h(x) to neural network.

linear regression contains a vector to be optimized/learned which can be also viewed as a projection from the input to the output. This process is kind of like the connections in our brain then it is called neural network.

I didn't the see the neural cells network, there only an equation,

using equation could solve the application problem.

The cell also can be interpreted as the connection as the vector in the linear regression. Consider this, if a value in the vector makes a certain number in the input very large or vanishingly zero, then it just transmits or stops the information from the one side to the other.

But how to go further to the neural network?

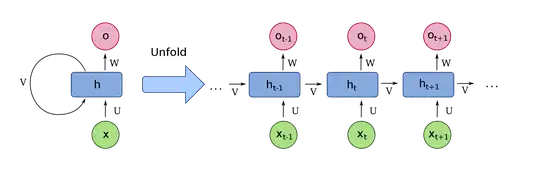

RNN is a neural network much more complex than linear regression, because it contains many gates(non-linear transformation like tanh, also called activation function) and linear transformations. It also takes the information from the previous step into consideration.

Let's unroll the RNN as requested in the comments:

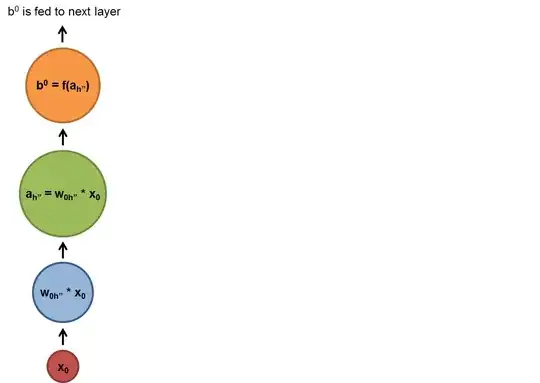

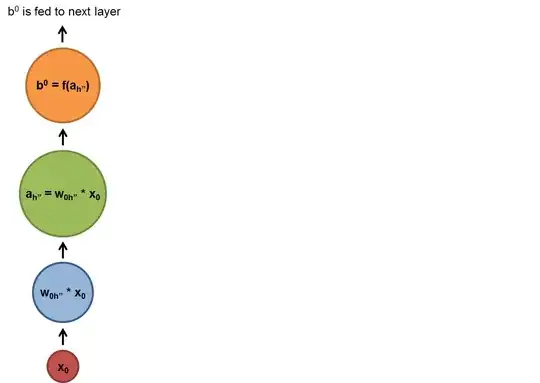

As shown below, the blue and green circles are linear or non-linear transformations(actually activation or activation functions combined after linear transformation which is normally matrix multiplications for batched cases) and the f functions are some non-linear transformations. After we process the $x_i$ we put the output(with the normal input of the step $x_{i+1}$) to the cell in the next step $x_{i+1}$ and apply a non-linear transform to them and get the output of the step $x_{i+1}$ and so on.

Simplified illustration from here: https://deeplearning4j.org/lstm.html

Some shameless recommendations you may need:

Structure of Recurrent Neural Network (LSTM, GRU)

Is anyone stacking LSTM and GRU cells together and why?

Understanding LSTM units vs. cells