There are some strong voices in the Econometrics community against the validity of the Ljung-Box $Q$-statistic for testing for autocorrelation based on the residuals from an autoregressive model (i.e. with lagged dependent variables in the regressor matrix), see particularly Maddala (2001) "Introduction to Econometrics (3d edition), ch 6.7, and 13. 5 p 528. Maddala literally laments the widespread use of this test, and instead considers as appropriate the "Langrange Multiplier" test of Breusch and Godfrey.

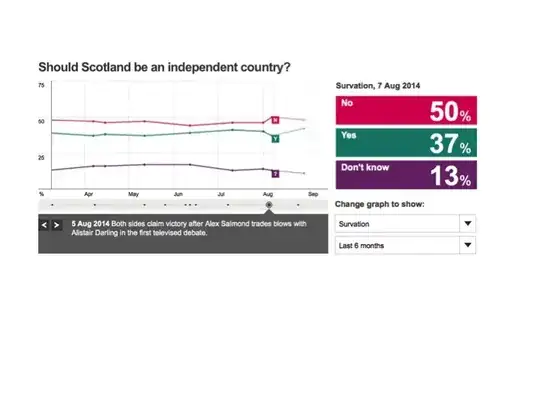

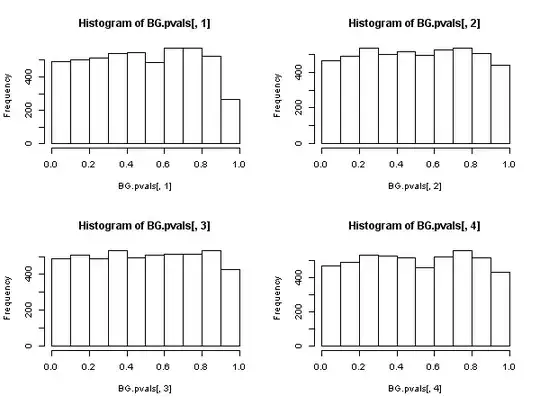

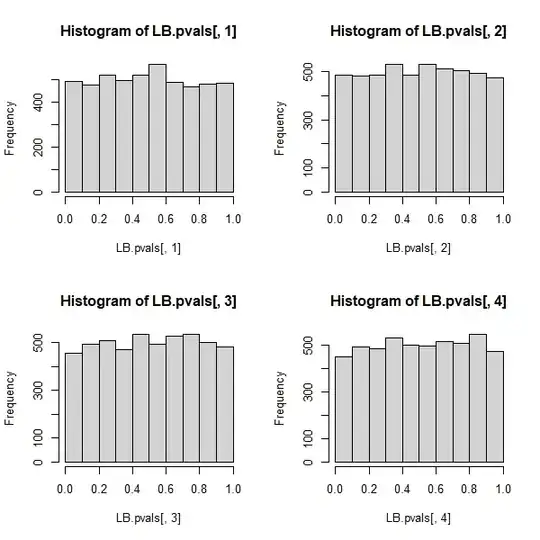

Maddala's argument against the Ljung-Box test is the same as the one raised against another omnipresent autocorrelation test, the "Durbin-Watson" one: with lagged dependent variables in the regressor matrix, the test is biased in favor of maintaining the null hypothesis of "no-autocorrelation" (the Monte-Carlo results obtained in @javlacalle answer allude to this fact). Maddala also mentions the low power of the test, see for example Davies, N., & Newbold, P. (1979). Some power studies of a portmanteau test of time series model specification. Biometrika, 66(1), 153-155.

Hayashi(2000), ch. 2.10 "Testing For serial correlation", presents a unified theoretical analysis, and I believe, clarifies the matter. Hayashi starts from zero:

For the Ljung-Box $Q$-statistic to be asymptotically distributed as a chi-square, it must be the case that the process $\{z_t\}$ (whatever $z$ represents), whose sample autocorrelations we feed into the statistic is, under the null hypothesis of no autocorrelation, a martingale-difference sequence, i.e. that it satisfies

$$E(z_t \mid z_{t-1}, z_{t-2},...) = 0$$

and also it exhibits "own" conditional homoskedasticity

$$E(z^2_t \mid z_{t-1}, z_{t-2},...) = \sigma^2 >0$$

Under these conditions the Ljung-Box $Q$-statistic (which is a corrected-for-finite-samples variant of the original Box-Pierce $Q$-statistic), has asymptotically a chi-squared distribution, and its use has asymptotic justification.

Assume now that we have specified an autoregressive model (that perhaps includes also independent regressors in addition to lagged dependent variables), say

$$y_t = \mathbf x_t'\beta + \phi(L)y_t + u_t$$

where $\phi(L)$ is a polynomial in the lag operator, and we want to test for serial correlation by using the residuals of the estimation. So here $z_t \equiv \hat u_t$.

Hayashi shows that in order for the Ljung-Box $Q$-statistic based on the sample autocorrelations of the residuals, to have an asymptotic chi-square distribution under the null hypothesis of no autocorrelation, it must be the case that all regressors are "strictly exogenous" to the error term in the following sense:

$$E(\mathbf x_t\cdot u_s) = 0 ,\;\; E(y_t\cdot u_s)=0 \;\;\forall t,s$$

The "for all $t,s$" is the crucial requirement here, the one that reflects strict exogeneity. And it does not hold when lagged dependent variables exist in the regressor matrix. This is easily seen: set $s= t-1$ and then

$$E[y_t u_{t-1}] = E[(\mathbf x_t'\beta + \phi(L)y_t + u_t)u_{t-1}] =$$

$$ E[\mathbf x_t'\beta \cdot u_{t-1}]+ E[\phi(L)y_t \cdot u_{t-1}]+E[u_t \cdot u_{t-1}] \neq 0 $$

even if the $X$'s are independent of the error term, and even if the error term has no-autocorrelation: the term $E[\phi(L)y_t \cdot u_{t-1}]$ is not zero.

But this proves that the Ljung-Box $Q$ statistic is not valid in an autoregressive model, because it cannot be said to have an asymptotic chi-square distribution under the null.

Assume now that a weaker condition than strict exogeneity is satisfied, namely that

$$E(u_t \mid \mathbf x_t, \mathbf x_{t-1},...,\phi(L)y_t, u_{t-1}, u_{t-2},...) = 0$$

The strength of this condition is "inbetween" strict exogeneity and orthogonality. Under the null of no autocorrelation of the error term, this condition is "automatically" satisfied by an autoregressive model, with respect to the lagged dependent variables (for the $X$'s it must be separately assumed of course).

Then, there exists another statistic based on the residual sample autocorrelations, (not the Ljung-Box one), that does have an asymptotic chi-square distribution under the null. This other statistic can be calculated, as a convenience, by using the "auxiliary regression" route: regress the residuals $\{\hat u_t\}$ on the full regressor matrix and on past residuals (up to the lag we have used in the specification), obtain the uncentered $R^2$ from this auxilliary regression and multiply it by the sample size.

This statistic is used in what we call the "Breusch-Godfrey test for serial correlation".

It appears then that, when the regressors include lagged dependent variables (and so in all cases of autoregressive models also), the Ljung-Box test should be abandoned in favor of the Breusch-Godfrey LM test., not because "it performs worse", but because it does not possess asymptotic justification. Quite an impressive result, especially judging from the ubiquitous presence and application of the former.

UPDATE: Responding to doubts raised in the comments as to whether all the above apply also to "pure" time series models or not (i.e. without "$x$"-regressors), I have posted a detailed examination for the AR(1) model, in https://stats.stackexchange.com/a/205262/28746 .