$R^2$ compares the fit of the chosen model with that of a horizontal straight line (the null hypothesis). If the chosen model fits worse than a horizontal line, then $R^2$ is negative. Note that $R^2$ is not always the square of anything, so it can have a negative value without violating any rules of math. $R^2$ is negative only when the chosen model does not follow the trend of the data, so fits worse than a horizontal line.

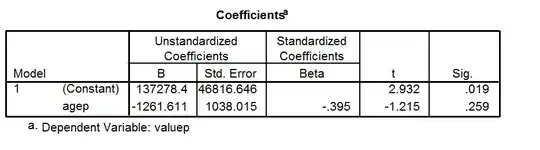

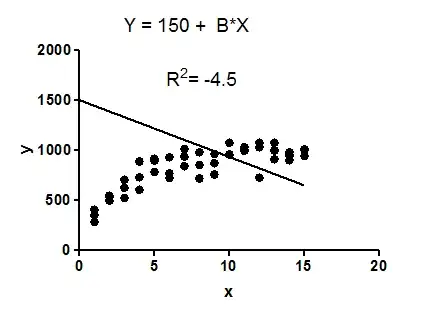

Example: fit data to a linear regression model constrained so that the $Y$ intercept must equal $1500$.

The model makes no sense at all given these data. It is clearly the wrong model, perhaps chosen by accident.

The fit of the model (a straight line constrained to go through the point (0,1500)) is worse than the fit of a horizontal line. Thus the sum-of-squares from the model $(SS_\text{res})$ is larger than the sum-of-squares from the horizontal line $(SS_\text{tot})$.

$R^2$ is computed as $1 - \frac{SS_\text{res}}{SS_\text{tot}}$.

(here, $SS_{res}$ = residual error.)

When $SS_\text{res}$ is greater than $SS_\text{tot}$, that equation computes a negative value for $R^2$.

With linear regression with no constraints, $R^2$ must be positive (or zero) and equals the square of the correlation coefficient, $r$. A negative $R^2$ is only possible with linear regression when either the intercept or the slope are constrained so that the "best-fit" line (given the constraint) fits worse than a horizontal line. With nonlinear regression, the $R^2$ can be negative whenever the best-fit model (given the chosen equation, and its constraints, if any) fits the data worse than a horizontal line.

Bottom line: a negative $R^2$ is not a mathematical impossibility or the sign of a computer bug. It simply means that the chosen model (with its constraints) fits the data really poorly.