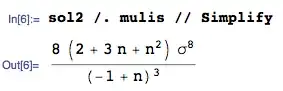

I'm analyzing a data set with three categorical variables, two of which are binary, the last of which takes on $5$ possible values. As such, when trying to find a relationship (if any) between the two binary variables (putting aside the final one for now), I utilized the method logistic regression (I'm working in R, so plugging it all in isn't too bad). I then ran the same regression now applying the $5$-category categorical variable as a bucket, i.e. I divided the data set into $5$ parts and ran the same regression as before, just on each bucket separately. (This $5$-tier variable is income quintile of the subjects, for clarity.) The results of my testing are below:

R outputs null deviance when you run logistic regressions, and from what I understand, that is typically denoted as $G^2$. I also ran $\chi^2$ tests for independence on each of my contingency tables (I have $6$: one for the total population, and then one for each quintile of income). I suppose my question is this: if I know that my $\chi^2$ value is above the significance level, which means I should reject the null hypothesis of independence, does that mean that my $G^2$ also implies the same information? I'm rather new to deviance calculations (I've seen $\chi^2$ loads before, but never $G^2$, or logistic regressions for that matter), and so a bit of clarification on the relationship between these two statistics would be sincerely appreciated.

Additionally (and distinctly), it seems that my data implies that on a macro level there is some dependence between the two variables of interest. However, when we inspect it by income group, this relationship seems to be lost/more muddled (for small enough $\alpha$). I'm familiar with the concept of Simpson's Paradox, but this seems to be slightly different than that. Is there any name for what I've encountered here? Any suggestions/information/references would be immensely appreciated. Cheers.