I am using the UCBAdmissions dataset and glm from R to check for Simpson's paradox. I am aware that with data that I have, it is easier to visualise the paradox, but I am trying to inspect it via a Poisson/Log-linear model too.

My attempt

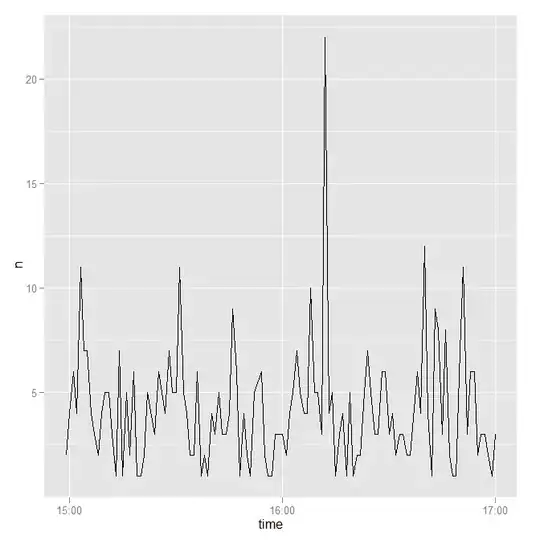

To visualise this is easy:

library(broom)

ucb_tidy <- tidy(UCBAdmissions) %>% group_by(Gender, Dept) %>% mutate(prop = n/sum(n))

ggplot(ucb_tidy, aes(x=Gender, y=prop, fill = Admit)) +

ggtitle("Acceptance Rate Split by Gender & Department") +

facet_wrap(~ Dept, nrow = 2) +

guides(fill = guide_legend(reverse = TRUE)) +

geom_bar(stat = "identity") +

xlab("Gender") + ylab("% of Applicants") +

scale_y_continuous(labels = percent_format())

But to check and show this with a Poisson model, I tried the following:

# Postulate that Gender and Admission are conditionally independent, given Dept.

cond_ind1 <- glm(n ~ (Gender + Admit) * Dept, family = poisson, data = ucb_tidy)

summary(cond_ind1)

Call:

glm(formula = n ~ (Gender + Admit) * Dept, family = poisson,

data = ucb_tidy)

Deviance Residuals:

Min 1Q Median 3Q Max

-3.4776 -0.4144 0.0098 0.3089 2.2321

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 6.27557 0.04248 147.744 < 2e-16 ***

GenderFemale -2.03325 0.10233 -19.870 < 2e-16 ***

AdmitRejected -0.59346 0.06838 -8.679 < 2e-16 ***

DeptB -0.40575 0.06770 -5.993 2.06e-09 ***

DeptC -1.53939 0.08305 -18.536 < 2e-16 ***

DeptD -1.32234 0.08159 -16.207 < 2e-16 ***

DeptE -2.40277 0.11014 -21.816 < 2e-16 ***

DeptF -3.09624 0.15756 -19.652 < 2e-16 ***

GenderFemale:DeptB -1.07581 0.22860 -4.706 2.52e-06 ***

GenderFemale:DeptC 2.63462 0.12343 21.345 < 2e-16 ***

GenderFemale:DeptD 1.92709 0.12464 15.461 < 2e-16 ***

GenderFemale:DeptE 2.75479 0.13510 20.391 < 2e-16 ***

GenderFemale:DeptF 1.94356 0.12683 15.325 < 2e-16 ***

AdmitRejected:DeptB 0.05059 0.10968 0.461 0.645

AdmitRejected:DeptC 1.20915 0.09726 12.432 < 2e-16 ***

AdmitRejected:DeptD 1.25833 0.10152 12.395 < 2e-16 ***

AdmitRejected:DeptE 1.68296 0.11733 14.343 < 2e-16 ***

AdmitRejected:DeptF 3.26911 0.16707 19.567 < 2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 2650.095 on 23 degrees of freedom

Residual deviance: 21.736 on 6 degrees of freedom

AIC: 216.8

Number of Fisher Scoring iterations: 4

This, however, is not a good fit if we use pchisq to check for goodness of fit, and does not directly show the effect of admission via departments, for sex.

I thought of another formulation:

# Postulate that Gender and Admission are jointly independent of Dept (or not)

joint_ind1 <- glm(n ~ Dept + (Gender*Admit), family = poisson, data = ucb_tidy)

summary(joint_ind1)

Call:

glm(formula = n ~ Dept + (Gender * Admit), family = poisson,

data = ucb_tidy)

Deviance Residuals:

Min 1Q Median 3Q Max

-19.7226 -7.8760 0.6475 7.1298 14.7146

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 5.50922 0.04106 134.188 < 2e-16 ***

DeptB -0.46679 0.05274 -8.851 < 2e-16 ***

DeptC -0.01621 0.04649 -0.349 0.727355

DeptD -0.16384 0.04832 -3.391 0.000696 ***

DeptE -0.46850 0.05276 -8.879 < 2e-16 ***

DeptF -0.26752 0.04972 -5.380 7.44e-08 ***

GenderFemale -0.76584 0.05128 -14.933 < 2e-16 ***

AdmitRejected 0.22013 0.03879 5.675 1.38e-08 ***

GenderFemale:AdmitRejected 0.61035 0.06389 9.553 < 2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 2650.1 on 23 degrees of freedom

Residual deviance: 2004.2 on 15 degrees of freedom

AIC: 2181.3

Number of Fisher Scoring iterations: 5

This has an even poorer fit, and does not have the logical appeal as the conditionally independent model.

My question is:

- How can I study Simpson's paradox via the Poisson regression model? Would you recommend another model (e.g., binomial logistic regression)?