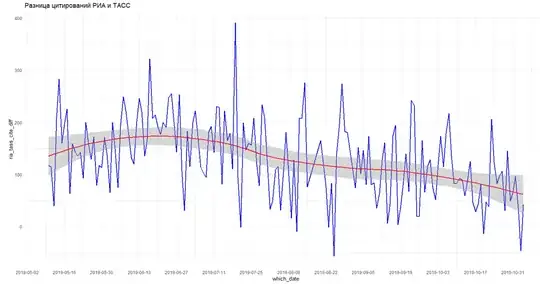

At a news agency, I want to understand whether the number of breaking news infuence the number of citations other media make relative to the news agency.

I do not measure citations of exact news, but only numbers per day totalling to the agency.

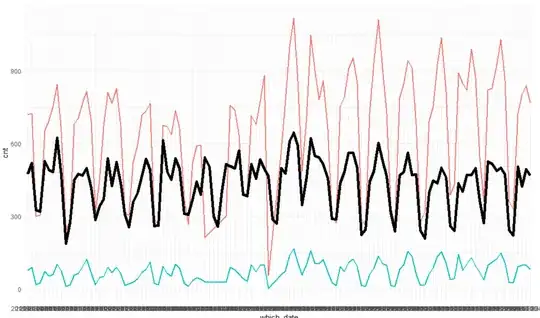

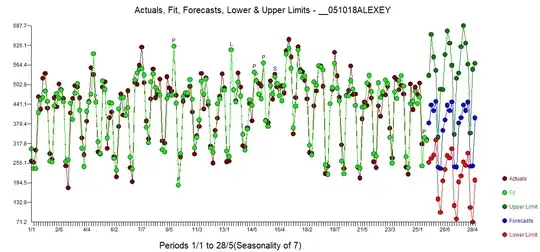

The red line stands for regular news, green line is the number of breaking news, and the thick black is the number of citations.

I know the number of news articles where the media mention the news agency (cite_cnt) daily. I also know the number of regular news (news_cnt) and breaking news (flash_cnt) that the news agency made daily.

I build this linear model:

tass_lm <-

lm(

cite_cnt ~ news_cnt + flash_cnt

, dat_tass_cast

)

summary(tass_lm)

Call:

lm(formula = cite_cnt ~ news_cnt + flash_cnt, data = dat_tass_cast)

Residuals:

Min 1Q Median 3Q Max

-137.316 -42.530 0.271 32.947 228.625

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 276.37487 17.96854 15.381 < 2e-16 ***

news_cnt 0.09829 0.06547 1.501 0.13595

flash_cnt 1.39011 0.42608 3.263 0.00145 **

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 68.63 on 117 degrees of freedom

Multiple R-squared: 0.6066, Adjusted R-squared: 0.5998

F-statistic: 90.19 on 2 and 117 DF, p-value: < 2.2e-16

To my surprise I found that the number of breaking news is significantly linked to the number of citations. For other news agencies I have the data from, this coefficient is not significant.

So, on top of average level and influence of the regular, I see that breaking news relates to higher number of citations.

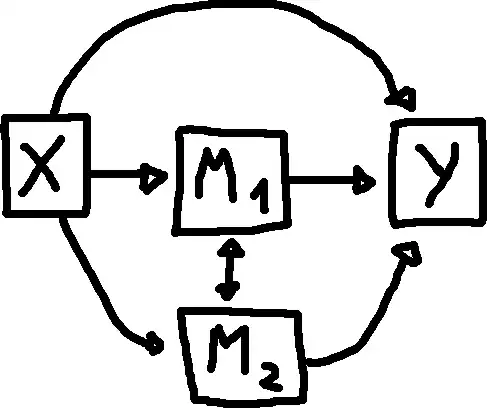

Q: I am prone to conclude that 1) breaking news matter a lot if we want more citations. 2) (this is my main question) increasing the number of breaking news will increase citation number if all other factors (including those out of scope) do not change. Is such a causal reference vaild in this case?

Update:

tass_lm <-

lm(

cite_cnt ~ as.factor(dweek) + news_cnt + flash_cnt

, dat_tass_cast

)

summary(tass_lm)

Call:

lm(formula = cite_cnt ~ as.factor(dweek) + news_cnt + flash_cnt,

data = dat_tass_cast)

Residuals:

Min 1Q Median 3Q Max

-110.880 -37.185 -0.297 30.907 117.174

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 283.66023 15.67226 18.100 < 2e-16 ***

as.factor(dweek)2 146.71450 19.30685 7.599 1.02e-11 ***

as.factor(dweek)3 163.97176 20.55983 7.975 1.49e-12 ***

as.factor(dweek)4 162.46087 21.41195 7.587 1.08e-11 ***

as.factor(dweek)5 170.88246 21.52676 7.938 1.80e-12 ***

as.factor(dweek)6 164.23197 19.08878 8.604 5.70e-14 ***

as.factor(dweek)7 6.24548 16.87997 0.370 0.712

news_cnt -0.12054 0.05378 -2.241 0.027 *

flash_cnt 1.56664 0.32715 4.789 5.22e-06 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 49.21 on 111 degrees of freedom

Multiple R-squared: 0.8081, Adjusted R-squared: 0.7943

F-statistic: 58.43 on 8 and 111 DF, p-value: < 2.2e-16

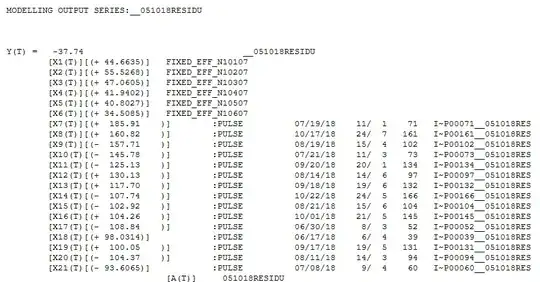

Update 2 (GLM):

Using GLM with the Possion family resulted in even higer t-statistic for the breaking news counts.

tass_glm <-

glm(

cite_cnt ~ as.factor(dweek) + news_cnt + flash_cnt

, dat_tass_cast

, family = poisson(link = "log")

)

summary(tass_glm)

Call:

glm(formula = cite_cnt ~ as.factor(dweek) + news_cnt + flash_cnt,

family = poisson(link = "log"), data = dat_tass_cast)

Deviance Residuals:

Min 1Q Median 3Q Max

-6.0270 -1.8957 -0.0114 1.5932 6.7059

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 5.647e+00 1.754e-02 322.026 < 2e-16 ***

as.factor(dweek)2 4.148e-01 2.046e-02 20.277 < 2e-16 ***

as.factor(dweek)3 4.535e-01 2.152e-02 21.073 < 2e-16 ***

as.factor(dweek)4 4.468e-01 2.213e-02 20.189 < 2e-16 ***

as.factor(dweek)5 4.620e-01 2.211e-02 20.894 < 2e-16 ***

as.factor(dweek)6 4.539e-01 2.009e-02 22.590 < 2e-16 ***

as.factor(dweek)7 2.035e-02 2.023e-02 1.006 0.314

news_cnt -2.474e-04 5.234e-05 -4.727 2.28e-06 ***

flash_cnt 3.290e-03 3.094e-04 10.632 < 2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 3462.96 on 119 degrees of freedom

Residual deviance: 676.84 on 111 degrees of freedom

AIC: 1639.5

Number of Fisher Scoring iterations: 4