First, note that your understanding of PCA is slightly off. PCA doesn't "group" variables into principal components. Each principal component is, rather, a new variable (a "new metabolite") of the same length as each of your original variables (in your case, 16).

It's true that each principal component can represent a group of original metabolites, but it's more complicated and sophisticated than that. Each metabolite can actually be correlated with multiple principal components, each a different amount. It could be true that a group of metabolites are purely represented principal component A and another group is purely represented by principal component B, but it is far more likely that each is correlated with multiple PCs, each to a different extent.

So think of each PC as a new metabolite (in PCA parlance we'd call it an "eigenmetabolite") that is representative of a general pattern across the metabolites. Some metabolites are highly representative of this pattern, while some aren't, and some share some properties with multiple patterns.

Now we come to your question. The way we bring in a response variable is to look at the relationship- for example, a correlation or linear regression- between each principal component (each eigenmetabolite) and the response variable. Here is a neat little example in R code. (Note that I use the svd function rather than the pls package, as you might be using).

set.seed(1337)

center.rows = function(m) t(apply(m, 1, function(r) r - mean(r)))

response.var = rnorm(16, 0, 1)

relevant.metabolites = t(replicate(50, rnorm(16, response.var, 2)))

unrelated.metabolites = t(replicate(110, rnorm(16, 0, 2)))

metabolite.data = rbind(relevant.metabolites, unrelated.metabolites)

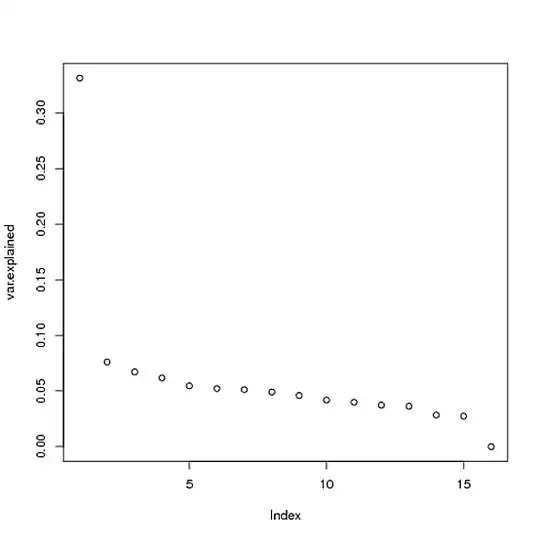

s = svd(center.rows(metabolite.data))

What I did is create a matrix of simulated metabolite data with 50 genes that are somewhat correlated with the response variable, and 110 genes that aren't. We can see that only one PC is significant, and it explains ~33% of the variance:

var.explained = s$d^2 / sum(s$d^2)

plot(var.explained)

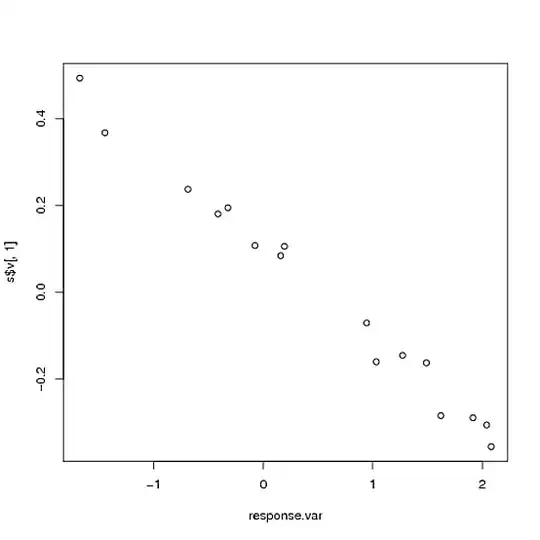

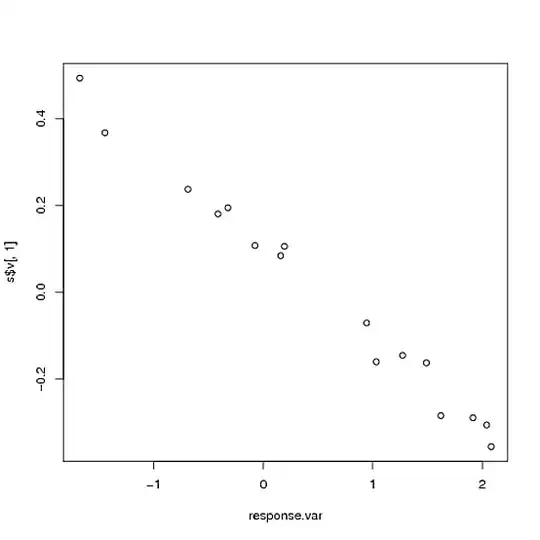

Now let's compare that PC to the response variable:

plot(response.var, s$v[, 1])

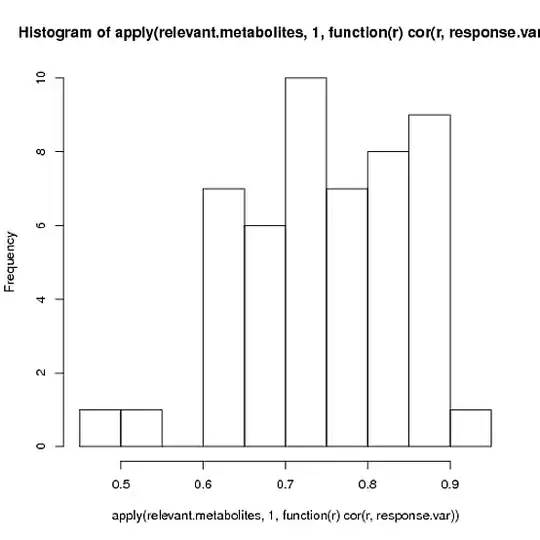

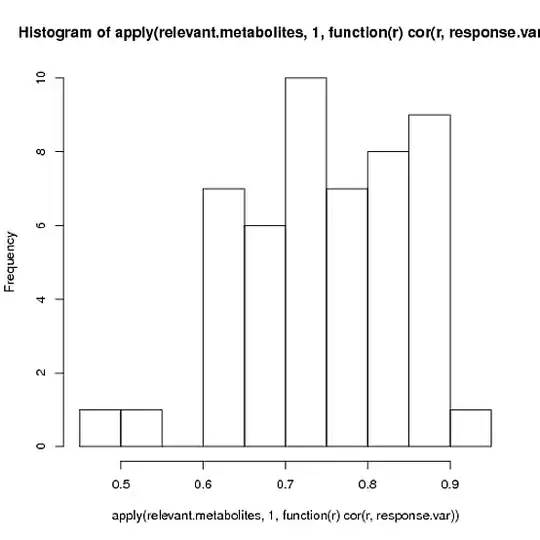

As you can see, the first PC almost perfectly recaptures the response variable that we used to create the matrix (correlation is -.993), even though we put a lot of extra noise in. (Don't worry that the correlation is negative- the direction is arbitrary). Indeed, this correlation with the "eigenmetabolite" is considerably greater than the correlation of the response variable to any of the individual significant genes:

hist(apply(relevant.metabolites, 1, function(r) cor(r, response.var)))

Of course, that's exactly why we're applying PCA in the first place. While the relationship between the response variable and any individual metabolite may be too weak to measure over the rest of the noise, when you combine many genes together you might find that a principal component captures that response well!