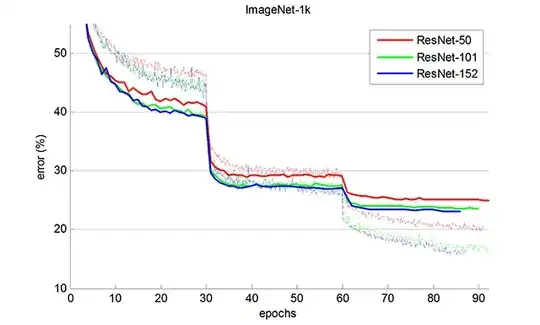

I've seen plots of test/training error suddenly dropping at certain epoch(s) a few times during the neural network training, and I wonder what causes these performance jumps:

This image is taken from Kaiming He's Github, but similar plots show up in many papers.