So I am trying to understand U-Nets better, and I built a very shallow U-Net and trained it to denoise MNIST images (training set is 90% of the whole dataset).

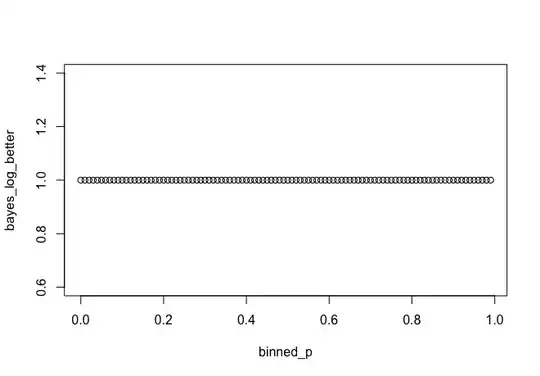

The loss function evolution I obtained was very strange and I did not manage to understand it:

The loss I am using is mean square error and the x-axis is epochs.

My big problem with this loss function evolution is that if I had set for, say, 10 epochs, then I would have reached a wrong conclusion regarding this architecture. So I guess my question is 2-folds:

- is there an explanation behind this weird loss function evolution?

- how to avoid early stopping in such situations (my validation loss follows exactly the same pattern)? Or even simply setting a number of epochs too low?

Note that this is a different problem than the one laid out in this question, because in my case the loss function is absolutely flat.

EDIT

After reviewing my architecture, I noticed that my final activation was a ReLU instead of a sigmoid. With a sigmoid, I don't have this behaviour, but imho the question still stands.