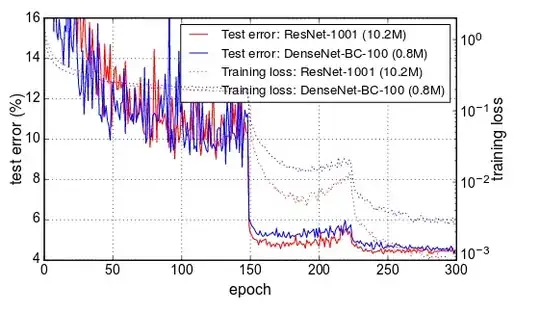

I was wondering the same thing. If the issue is indeed the learning rate schedule. Then doesn't that imply that there is a huge opportunity to increase accuracy/speed up convergence by optimizing the schedule? It seems abit crazy that the loss gets lowered by a factor of 3 in 1 epoch by lowering the learning rate.

"we train models for 90 epochs with a batch size of 256.

The learning rate is set to 0.1 initially, and is lowered by

10 times at epoch 30 and 60. "

Did they just randomly chose those numbers or have they brute forced it to find the best schedule?

I guess the point is to haver higher learning rate in the beginning since the weights are further from optimum and maybe there is a higher risk of local minima. Have anyone seen any papers comparing different LR schedules on imagenet using densenet or resnet?

From this paper https://arxiv.org/pdf/1706.02677.pdf, you can see the same drop and yes it is perfectly aligned with their learning rate schedule drop on epoch 30 and 60.

Looking at this article: https://www.jeremyjordan.me/nn-learning-rate/

On the loss topology images, i guess the point is that the first high learning rate finds the deepest valley but the lr is to high to go very deep in it. Then when lowering the LR you can descent deeper into that valley resulting in the drop in loss that we see. It could be interesting to test a schedule that drops the LR based on some calculation on the per epoch validation loss. E.g. if the slope of the validation is flattening out over the last x number of epochs then we can already decrease the LR instead of running with the same lr for e.g. 30 epochs more.