How does the reparameterization trick for variational autoencoders (VAE) work? Is there an intuitive and easy explanation without simplifying the underlying math? And why do we need the 'trick'?

-

12One part of the answer is to notice that all Normal distributions are just scaled and translated versions of Normal(1, 0). To draw from Normal(mu, sigma) you can draw from Normal(1, 0), multiply by sigma (scale), and add mu (translate). – monk Jan 24 '18 at 23:53

-

3@monk: it should have been Normal(0,1) instead of (1,0) right or else multiplying and shifting would completely go hay wire! – Hossein Sep 30 '19 at 19:03

-

@Breeze Ha! Yes, of course, thanks. – monk Sep 30 '19 at 21:06

7 Answers

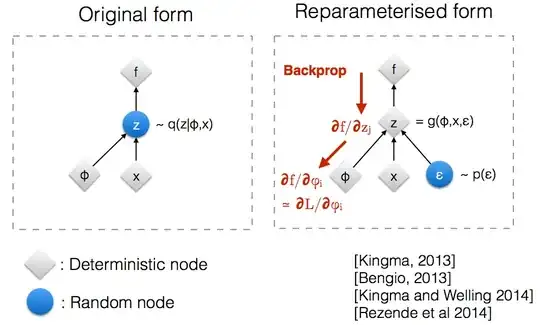

After reading through Kingma's NIPS 2015 workshop slides, I realized that we need the reparameterization trick in order to backpropagate through a random node.

Intuitively, in its original form, VAEs sample from a random node $z$ which is approximated by the parametric model $q(z \mid \phi, x)$ of the true posterior. Backprop cannot flow through a random node.

Introducing a new parameter $\epsilon$ allows us to reparameterize $z$ in a way that allows backprop to flow through the deterministic nodes.

-

5

-

8It's not, but it's not a "source of randomness" - this role has been taken over by $\epsilon$. – quant_dev Sep 17 '18 at 20:47

-

1Note that this method has been proposed multiple times before 2014: http://blog.shakirm.com/2015/10/machine-learning-trick-of-the-day-4-reparameterisation-tricks/ – quant_dev Sep 17 '18 at 20:49

-

4

-

7Unfortunately, it is not. Original form can still be backpropagatable however with higher variance. Details can be found from [my post](https://blog.neurallearningdymaics.com/2019/06/variational-autoencoders-1-motivation.html). – JP Zhang Jun 05 '19 at 15:35

-

-

1@JPZhang your link is behind a paywall. Is there a free version? – David Ireland Dec 19 '21 at 12:48

-

f is not backprop over paramerters, but the gradient of interest is, see sec.2.2 of the paper you cite. The point is to reduce sample variance, but the paper doesn't illustrate this point well. The fig is misleading I would say, even it's from the author. – Albert Chen Feb 28 '22 at 19:06

Assume we have a normal distribution $q$ that is parameterized by $\theta$, specifically $q_{\theta}(x) = N(\theta,1)$. We want to solve the below problem $$ \text{min}_{\theta} \quad E_q[x^2] $$ This is of course a rather silly problem and the optimal $\theta$ is obvious. However, here we just want to understand how the reparameterization trick helps in calculating the gradient of this objective $E_q[x^2]$.

One way to calculate $\nabla_{\theta} E_q[x^2]$ is as follows $$ \nabla_{\theta} E_q[x^2] = \nabla_{\theta} \int q_{\theta}(x) x^2 dx = \int x^2 \nabla_{\theta} q_{\theta}(x) \frac{q_{\theta}(x)}{q_{\theta}(x)} dx = \int q_{\theta}(x) \nabla_{\theta} \log q_{\theta}(x) x^2 dx = E_q[x^2 \nabla_{\theta} \log q_{\theta}(x)] $$

For our example where $q_{\theta}(x) = N(\theta,1)$, this method gives $$ \nabla_{\theta} E_q[x^2] = E_q[x^2 (x-\theta)] $$

Reparameterization trick is a way to rewrite the expectation so that the distribution with respect to which we take the gradient is independent of parameter $\theta$. To achieve this, we need to make the stochastic element in $q$ independent of $\theta$. Hence, we write $x$ as $$ x = \theta + \epsilon, \quad \epsilon \sim N(0,1) $$ Then, we can write $$ E_q[x^2] = E_p[(\theta+\epsilon)^2] $$ where $p$ is the distribution of $\epsilon$, i.e., $N(0,1)$. Now we can write the derivative of $E_q[x^2]$ as follows $$ \nabla_{\theta} E_q[x^2] = \nabla_{\theta} E_p[(\theta+\epsilon)^2] = E_p[2(\theta+\epsilon)] $$

Here is an IPython notebook I have written that looks at the variance of these two ways of calculating gradients. http://nbviewer.jupyter.org/github/gokererdogan/Notebooks/blob/master/Reparameterization%20Trick.ipynb

- 1,349

- 8

- 11

-

6

-

8it's 0. one way to see that is to note that E[x^2] = E[x]^2 + Var(x), which is theta^2 + 1 in this case. So theta=0 minimizes this objective. – goker May 07 '18 at 18:38

-

So, it depends completely on the problem? For say min_\theta E_q [ |x|^(1/4) ] it might be completely different? – Anne van Rossum May 12 '18 at 10:49

-

What depends on the problem? The optimal theta? If so, yes it certainly depends on the problem. – goker May 13 '18 at 17:32

-

In the equation just after "this method gives", shouldn't it be $\nabla_\theta E_q[x^2] = E_q[x^2 (x-\theta) q_\theta(x)]$ instead of just $\nabla_\theta E_q[x^2] = E_q[x^2 (x-\theta)]$? – AlphaOmega Jan 23 '19 at 19:52

-

@AlphaOmega Where does the extra $q_{\theta}(x)$ come from? Perhaps, you are referring to the $q$ that comes from the expectation $E_q$. – goker Jan 24 '19 at 21:02

-

@goker In the line above you are saying $\nabla_\theta E_q[x^2]=E_q[x^2\nabla_\theta \log q_\theta (x)]$. And given that $q_\theta(x)$ is the normal distribution, with $\theta$ being its mean, its derivative wrt to theta is $\nabla_\theta q_\theta (x) = (x-\theta) q_\theta(x)$ for $\sigma^2 = 1$. Basically, $\frac{\partial}{\partial \mu} exp(-\frac{(x-\mu)^2}{2}) = (x-\mu)exp(-\frac{(x-\mu)^2}{2})$. – AlphaOmega Jan 25 '19 at 09:44

-

3@AlphaOmega you need to take the derivative of $\log q_{\theta}(x)$. Then $\nabla_{\theta} \log q_{\theta}(x) = \nabla_{\theta} ( - \frac{(x-\theta)^2}{2} )$ (ignoring terms that don't depend on $\theta$. Finally, $\nabla_{\theta} (- \frac{(x-\theta)^2}{2}) = (x-\theta)$. – goker Jan 26 '19 at 13:56

-

-

Perhaps obvious, but I don’t see the connection in the second last eq. E_q=E_p. I understand x is the change of variable , but why p=q inside the expectation? Why can we replace in such a way ? – c.uent Aug 07 '20 at 02:54

-

@c.uent becase $p$ is the distribution of $\epsilon$ (the variable we change to). probably a more explicit way of seeing this is to write the expectations out as integrals, and changing the variables. – goker Aug 08 '20 at 08:08

-

Yes, that helped a lot. Thanks! Basically, you have q_theta(\theta+\epsilon) = p(\theta). In case, anybody has problems, remember that q is N(\theta, 1) and p is a gaussian(0,1). Thanks, @goker – c.uent Aug 11 '20 at 00:00

-

1@goker could you please further explain equation3, right after One way to calculate ∇θEq[x2] is as follow? I am not sure how you transited from the third equality to fourth. – samsambakster Aug 04 '21 at 07:11

A reasonable example of the mathematics of the "reparameterization trick" is given in goker's answer, but some motivation could be helpful. (I don't have permissions to comment on that answer; thus here is a separate answer.)

In short, we want to compute some value $G_\theta$ of the form, $$G_\theta = \nabla_{\theta}E_{x\sim q_\theta}[\ldots]$$

Without the "reparameterization trick", we can often rewrite this, per goker's answer, as $E_{x\sim q_\theta}[G^{est}_\theta(x)]$, where, $$G^{est}_\theta(x) = \ldots\frac{1}{q_\theta(x)}\nabla_{\theta}q_\theta(x) = \ldots\nabla_{\theta} \log(q_\theta(x))$$

If we draw an $x$ from $q_\theta$, then $G^{est}_\theta$ is an unbiased estimate of $G_\theta$. This is an example of "importance sampling" for Monte Carlo integration. If the $\theta$ represented some outputs of a computational network (e.g., a policy network for reinforcement learning), we could use this in back-propagatation (apply the chain rule) to find derivatives with respect to network parameters.

The key point is that $G^{est}_\theta$ is often a very bad (high variance) estimate. Even if you average over a large number of samples, you may find that its average seems to systematically undershoot (or overshoot) $G_\theta$.

A fundamental problem is that essential contributions to $G_\theta$ may come from values of $x$ which are very rare (i.e., $x$ values for which $q_\theta(x)$ is small). The factor of $\frac{1}{q_\theta(x)}$ is scaling up your estimate to account for this, but that scaling won't help if you don't see such a value of $x$ when you estimate $G_\theta$ from a finite number of samples. The goodness or badness of $q_\theta$ (i.e.,the quality of the estimate, $G^{est}_\theta$, for $x$ drawn from $q_\theta$) may depend on $\theta$, which may be far from optimum (e.g., an arbitrarily chosen initial value). It is a little like the story of the drunk person who looks for his keys near the streetlight (because that's where he can see/sample) rather than near where he dropped them.

The "reparameterization trick" sometimes address this problem. Using goker's notation, the trick is to rewrite $x$ as a function of a random variable, $\epsilon$, with a distribution, $p$, that does not depend on $\theta$, and then rewrite the expectation in $G_\theta$ as an expectation over $p$,

$$G_\theta = \nabla_\theta E_{\epsilon\sim p}[J(\theta,\epsilon)] = E_{\epsilon\sim p}[ \nabla_\theta J(\theta,\epsilon)]$$ for some $J(\theta,\epsilon)$.

The reparameterization trick is especially useful when the new estimator, $\nabla_\theta J(\theta,\epsilon)$, no longer has the problems mentioned above (i.e., when we are able to choose $p$ so that getting a good estimate does not depend on drawing rare values of $\epsilon$). This can be facilitated (but is not guaranteed) by the fact that $p$ does not depend on $\theta$ and that we can choose $p$ to be a simple unimodal distribution.

However, the reparamerization trick may even "work" when $\nabla_\theta J(\theta,\epsilon)$ is not a good estimator of $G_\theta$. Specifically, even if there are large contributions to $G_\theta$ from $\epsilon$ which are very rare, we consistently don't see them during optimization and we also don't see them when we use our model (if our model is a generative model). In slightly more formal terms, we can think of replacing our objective (expectation over $p$) with an effective objective that is an expectation over some "typical set" for $p$. Outside of that typical set, our $\epsilon$ might produce arbitrarily poor values of $J$ -- see Figure 2(b) of Brock et. al. for a GAN evaluated outside the typical set sampled during training (in that paper, smaller truncation values corresponding to latent variable values farther from the typical set, even though they are higher probability).

I hope that helps.

- 411

- 4

- 3

-

"The factor of 1/qθ(x) is scaling up your estimate to account for this, but if you never see such a value of x, that scaling won't help." Can you explain more? – czxttkl Nov 10 '18 at 19:52

-

@czxttkl In practice we estimate expected values with a finite number of samples. If $q_\theta$ is very small for some $x$, then we may be very unlikely to sample such an $x$. So even though $G_{\theta}^{est}(x)$ includes a large factor of $1/q_\theta$, and may make a meaningful contribution to the true expected value, it may be excluded from our estimate of the expected value for any reasonable number of samples. – Seth Bruder Nov 19 '18 at 17:59

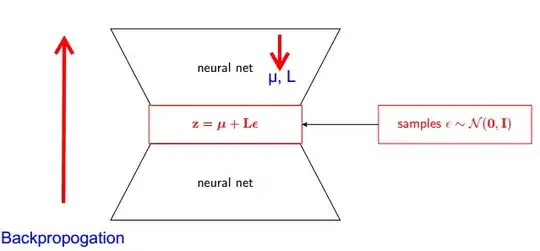

Let me explain first, why do we need Reparameterization trick in VAE.

VAE has encoder and decoder. Decoder randomly samples from true posterior Z~ q(z∣ϕ,x). To implement encoder and decoder as a neural network, you need to backpropogate through random sampling and that is the problem because backpropogation cannot flow through random node; to overcome this obstacle, we use reparameterization trick .

Now lets come to trick. Since our posterior is normally distributed, we can approximate it with another normal distribution . We approximate Z with normally distributed ε.

But how this is relevant ?

Now instead of saying that Z is sampled from q(z∣ϕ,x) , we can say Z is a function that takes parameter (ε,( µ, L)) and these µ, L comes from upper neural network (encoder). Therefore while backpropogation all we need is partial derivatives w.r.t. µ, L and ε is irrelevant for taking derivatives.

- 321

- 2

- 5

-

Best video to understand this concept. I would recommend to watch complete video for better understanding but if you want to understand only reparameterization trick then watch from 8 minute. https://www.youtube.com/channel/UCNIkB2IeJ-6AmZv7bQ1oBYg – Sherlock Feb 27 '18 at 23:42

-

$q(z|\phi, x)$ is not the true posterior, it is the approximated one. We do not know what the true posterior is. – mesllo Dec 18 '21 at 15:30

I thought the explanation found in Stanford CS228 course on probabilistic graphical models was very good. It can be found here: https://ermongroup.github.io/cs228-notes/extras/vae/

I've summarized/copied the important parts here for convenience/my own understanding (although I strongly recommend just checking out the original link).

So, our problem is that we have this gradient we want to calculate: $$\nabla_\phi \mathbb{E}_{z\sim q(z|x)}[f(x,z)]$$

If you're familiar with score function estimators (I believe REINFORCE is just a special case of this), you'll notice that is pretty much the problem they solve. However, the score function estimator has a high variance, leading to difficulties in learning models much of the time.

So, under certain conditions, we can express the distribution $q_\phi (z|x)$ as a 2-step process.

First we sample a noise variable $\epsilon$ from a simple distribution $p(\epsilon)$ like the standard Normal. Next, we apply a deterministic transformation $g_\phi(\epsilon, x)$ that maps the random noise onto this more complex distribution. This second part is not always possible, but it is true for many interesting classes of $q_\phi$.

As an example, let's use a very simple q from which we sample.

$$z \sim q_{\mu, \sigma} = \mathcal{N}(\mu, \sigma)$$ Now, instead of sampling from $q$, we can rewrite this as $$ z = g_{\mu, \sigma}(\epsilon) = \mu + \epsilon\cdot\sigma$$ where $\epsilon \sim \mathcal{N}(0, 1)$.

Now, instead of needing to get the gradient of an expectation of q(z), we can rewrite it as the gradient of an expectation with respect to the simpler function $p(\epsilon)$.

$$\nabla_\phi \mathbb{E}_{z\sim q(z|x)}[f(x,z)] = \mathbb{E}_{\epsilon \sim p(\epsilon)}[\nabla_\phi f(x,g(\epsilon, x))]$$

This has lower variance, for imo, non-trivial reasons. Check part D of the appendix here for an explanation: https://arxiv.org/pdf/1401.4082.pdf

- 121

- 1

- 3

-

Hi, do you know, why in the implementation, they divide the std by 2? (i.e std = torch.exp(z_var / 2) ) in the reparameterization ? – Hossein Sep 08 '19 at 14:15

We have our probablistic model. And want to recover parameters of the model. We reduce our task to optimizing variational lower bound (VLB). To do this we should be able make two things:

- calculate VLB

- get gradient of VLB

Authors suggest using Monte Carlo Estimator for both. And actually they introduce this trick to get more precise Monte Carlo Gradient Estimator of VLB.

It's just improvement of numerical method.

- 141

- 3

The reparameterization trick reduces the variance of the MC estimator for the gradient dramatically. So it's a variance reduction technique:

Our goal is to find an estimate of $$ \nabla_\phi \mathbb E_{q(z^{(i)} \mid x^{(i)}; \phi)} \left[ \log p\left( x^{(i)} \mid z^{(i)}, w \right) \right] $$

We could use the "Score function estimator": $$ \nabla_\phi \mathbb E_{q(z^{(i)} \mid x^{(i)}; \phi)} \left[ \log p\left( x^{(i)} \mid z^{(i)}, w \right) \right] = \mathbb E_{q(z^{(i)} \mid x^{(i)}; \phi)} \left[ \log p\left( x^{(i)} \mid z^{(i)}, w \right) \nabla_\phi \log q_\phi(z)\right] $$ But the score function estimator has high variance. E.g. if the probability $p\left( x^{(i)} \mid z^{(i)}, w \right)$ is very small then the absolute value of $\log p\left( x^{(i)} \mid z^{(i)}, w \right)$ is very large and the value itself is negative. So we would have high variance.

With Reparametrization $z^{(i)} = g(\epsilon^{(i)}, x^{(i)}, \phi)$ we have $$ \nabla_\phi \mathbb E_{q(z^{(i)} \mid x^{(i)}; \phi)} \left[ \log p\left( x^{(i)} \mid z^{(i)}, w \right) \right] = \mathbb E_{p(\epsilon^{(i)})} \left[ \nabla_\phi \log p\left( x^{(i)} \mid g(\epsilon^{(i)}, x^{(i)}, \phi), w \right) \right] $$

Now the expectation is w.r.t. $p(\epsilon^{(i)})$ and $p(\epsilon^{(i)})$ is independent of the gradient parameter $\phi$. So we can put the gradient directly inside the expectation which can be easily seen by writing out the expectation explicitly. The gradient values are much smaller. Therefore, we have (intuitively) lower variance.

Note: We can do this reparametrization trick only if $z^{(i)}$ is continuous so we can take the gradient of $z^{(i)} = g(\epsilon^{(i)}, x^{(i)}, \phi)$.

- 622

- 5

- 10