Feedforward neural networks trained to reconstruct their own input. Usually one of the hidden layers is a "bottleneck", leading to encoder->decoder interpretation.

Autoencoders can be applied to unlabeled data to learn features that best represent the variations in the data distribution. This process is known as unsupervised feature learning or representation learning.

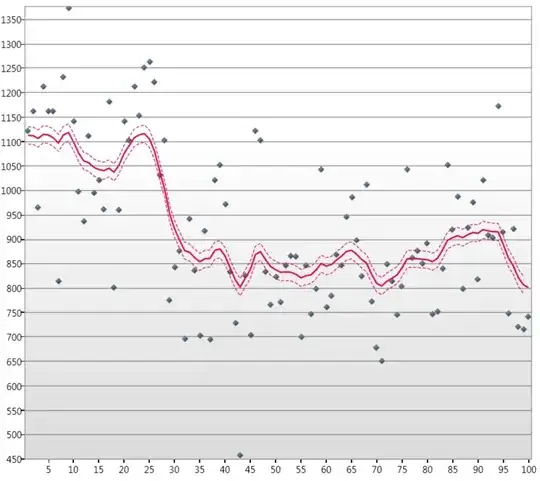

Here is an example of a set of filters learned by an autoencoder on a face recognition dataset: