Just wondering if it is possible to find the Expected value of x if it is normally distributed, given that is below a certain value (for example, below the mean value).

- 63,378

- 26

- 142

- 467

- 181

- 1

- 1

- 3

-

It is of course possible. At a minimum you could calculate by brute force $F(t)^{-1} \int_{- \infty}^{x} t f(t) dt$. Or if you know $\mu$ and $\sigma$ you could estimate it using a simulation. – dsaxton Aug 08 '15 at 16:32

-

@dsaxton There are some typos in that formula, but we get the idea. What I am curious about is how exactly you would run the simulation when the threshold is far below the mean. – whuber Aug 08 '15 at 17:01

-

1@whuber Yes, $F(t)$ should be $F(x)$. It wouldn't be very smart to do a simulation when $F(x)$ is close to zero, but as you pointed out there's an exact formula anyways. – dsaxton Aug 08 '15 at 17:12

-

@dsaxton OK, fair enough. I was only hoping you had in mind some kind of clever and simple idea for simulating from the tail of a normal distribution. – whuber Aug 08 '15 at 17:16

-

More or less the same question in Math.SE: http://math.stackexchange.com/questions/749664/average-iq-of-mensa – JiK Aug 08 '15 at 19:32

-

@whuber For whatever it's worth, a simple but not so clever way would be to simulate directly from the conditional distribution by taking $F^{-1}(F(x) U)$ where $U \sim$ uniform$(0, 1)$. – dsaxton Aug 08 '15 at 22:58

2 Answers

A normally distributed variable $X$ with mean $\mu$ and variance $\sigma^2$ has the same distribution as $\sigma Z + \mu$ where $Z$ is a standard normal variable. All you need to know about $Z$ is that

- its cumulative distribution function is called $\Phi$,

- it has a probability density function $\phi(z) = \Phi^\prime(z)$, and that

- $\phi^\prime(z) = -z \phi(z)$.

The first two bullets are just notation and definitions: the third is the only special property of normal distributions we will need.

Let the "certain value" be $T$. Anticipating the change from $X$ to $Z$, define

$$t = (T-\mu)/\sigma,$$

so that

$$\Pr(X \le T) = \Pr(Z \le t) = \Phi(t).$$

Then, starting with the definition of the conditional expectation we may exploit its linearity to obtain

$$\eqalign{ \mathbb{E}(X\,|\, X \le T) &= \mathbb{E}(\sigma Z + \mu \,|\, Z \le t) = \sigma \mathbb{E}(Z \,|\, Z \le t) + \mu \mathbb{E}(1 \,|\, Z \le t) \\ &= \left(\sigma \int_{-\infty}^t z \phi(z) dz + \mu \int_{-\infty}^t \phi(z) dz \right) / \Pr(Z \le t)\\ &=\left(-\sigma \int_{-\infty}^t \phi^\prime(z) dz + \mu \int_{-\infty}^t \Phi^\prime(z) dz\right) / \Phi(t). }$$

The Fundamental Theorem of Calculus asserts that any integral of a derivative is found by evaluating the function at the endpoints: $\int_a^b F^\prime(z) dz = F(b) - F(a)$. This applies to both integrals. Since both $\Phi$ and $\phi$ must vanish at $-\infty$, we obtain

$$\mathbb{E}(X\,|\, X \le T) = \mu - \sigma \frac{\phi\left(t\right)}{\Phi\left(t\right)}.$$

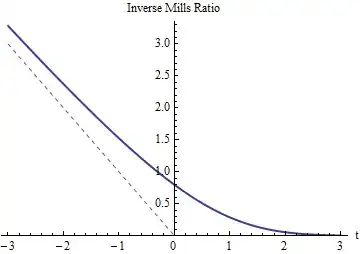

It's the original mean minus a correction term proportional to the Inverse Mills Ratio.

As we would expect, the inverse Mills ratio for $t$ must be positive and exceed $-t$ (whose graph is shown with a dotted red line). It has to dwindle down to $0$ as $t$ grows large, for then the truncation at $Z=t$ (or $X=T$) changes almost nothing. As $t$ grows very negative, the inverse Mills ratio must approach $-t$ because the tails of the normal distribution decrease so rapidly that almost all the probability in the left tail is concentrated near its right-hand side (at $t$).

Finally, when $T = \mu$ is at the mean, $t=0$ where the inverse Mills Ratio equals $\sqrt{2/\pi} \approx 0.797885$. This implies the expected value of $X$, truncated at its mean (which is the negative of a half-normal distribution), is $-\sqrt{2/\pi}$ times its standard deviation below the original mean.

- 281,159

- 54

- 637

- 1,101

In general, let $X$ have distribution function $F(X)$.

We have, for $x\in[c_1,c_2]$, \begin{eqnarray*} P(X\leq x|c_1\leq X \leq c_2)&=&\frac{P(X\leq x\cap c_1\leq X \leq c_2)}{P(c_1\leq X \leq c_2)}=\frac{P(c_1\leq X \leq x)}{P(c_1\leq X \leq c_2)}\\&=&\frac{F(x)-F(c_1)}{F(c_2)-F(c_1)} \end{eqnarray*} You may obtain special cases by taking, for example $c_1=-\infty$, which yields $F(c_1)=0$.

Using conditional cdfs, you may get conditional densities (e.g., $f(x|X<0)=2\phi(x)$ for $X\sim N(0,1)$), which can be used for conditional expectations.

In your example, integration by parts gives $$ E(X|X<0)=2\int_{-\infty}^0x\phi(x)=-2\phi(0), $$ like in @whuber's answer.

- 25,948

- 3

- 57

- 106

-

1+1 (somehow I missed this when it first appeared). The first part is an excellent account of how to obtain truncated distribution functions and the second shows how to compute their PDFs. – whuber Sep 22 '16 at 04:17