I am running following data and code for analyzing non-linear regression and to get simplest equation of curve that fits the data:

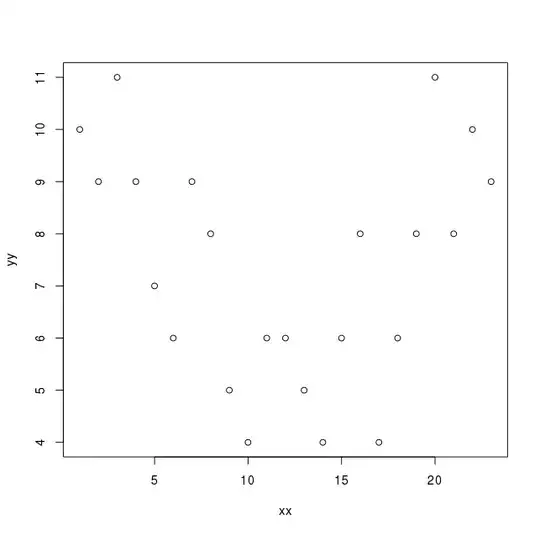

> dput(ddf)

structure(list(xx = 1:23, yy = c(10L, 9L, 11L, 9L, 7L, 6L, 9L,

8L, 5L, 4L, 6L, 6L, 5L, 4L, 6L, 8L, 4L, 6L, 8L, 11L, 8L, 10L,

9L)), .Names = c("xx", "yy"), row.names = c(NA, -23L), class = "data.frame")

>

> head(ddf)

xx yy

1 1 10

2 2 9

3 3 11

4 4 9

5 5 7

6 6 6

> fit = lm(yy ~ poly(xx, 9), data=ddf)

> summary(fit)

Call:

lm(formula = yy ~ poly(xx, 9), data = ddf)

Residuals:

Min 1Q Median 3Q Max

-1.9890 -1.2031 0.1086 0.7493 2.4248

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 7.347826 0.356758 20.596 2.62e-11

poly(xx, 9)1 -0.880172 1.710953 -0.514 0.615582

poly(xx, 9)2 7.821383 1.710953 4.571 0.000524 # NOTE THIS

poly(xx, 9)3 0.424579 1.710953 0.248 0.807892

poly(xx, 9)4 -2.151779 1.710953 -1.258 0.230641

poly(xx, 9)5 -0.876964 1.710953 -0.513 0.616857

poly(xx, 9)6 -0.961726 1.710953 -0.562 0.583610

poly(xx, 9)7 -0.002171 1.710953 -0.001 0.999007

poly(xx, 9)8 -0.051884 1.710953 -0.030 0.976269

poly(xx, 9)9 0.840177 1.710953 0.491 0.631571

Residual standard error: 1.711 on 13 degrees of freedom

Multiple R-squared: 0.6451, Adjusted R-squared: 0.3993

F-statistic: 2.625 on 9 and 13 DF, p-value: 0.05575

If I use 'raw=TRUE' :

> fit = lm(yy ~ poly(xx, 9, raw=TRUE), data=ddf)

> summary(fit)

Call:

lm(formula = yy ~ poly(xx, 9, raw = TRUE), data = ddf)

Residuals:

Min 1Q Median 3Q Max

-1.9890 -1.2031 0.1086 0.7493 2.4248

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 2.844e+00 1.529e+01 0.186 0.855

poly(xx, 9, raw = TRUE)1 1.310e+01 2.711e+01 0.483 0.637

poly(xx, 9, raw = TRUE)2 -8.439e+00 1.723e+01 -0.490 0.632 # NOTE THIS

poly(xx, 9, raw = TRUE)3 2.637e+00 5.432e+00 0.485 0.635

poly(xx, 9, raw = TRUE)4 -4.719e-01 9.715e-01 -0.486 0.635

poly(xx, 9, raw = TRUE)5 5.112e-02 1.048e-01 0.488 0.634

poly(xx, 9, raw = TRUE)6 -3.400e-03 6.937e-03 -0.490 0.632

poly(xx, 9, raw = TRUE)7 1.355e-04 2.757e-04 0.492 0.631

poly(xx, 9, raw = TRUE)8 -2.967e-06 6.032e-06 -0.492 0.631

poly(xx, 9, raw = TRUE)9 2.739e-08 5.578e-08 0.491 0.632

Residual standard error: 1.711 on 13 degrees of freedom

Multiple R-squared: 0.6451, Adjusted R-squared: 0.3993

F-statistic: 2.625 on 9 and 13 DF, p-value: 0.05575

I find that if I do not use 'raw=TRUE', one P value (2nd) is significant, but it is not significant if I use 'raw=TRUE'. Why does this occur and what does it mean?

I asked above question at stackoverflow but was advised to post here. Thanks for your help.