Let your (centered) data be stored in a $n\times d$ matrix $\mathbf X$ with $d$ features (variables) in columns and $n$ data points in rows. Let the covariance matrix $\mathbf C=\mathbf X^\top \mathbf X/n$ have eigenvectors in columns of $\mathbf E$ and eigenvalues on the diagonal of $\mathbf D$, so that $\mathbf C = \mathbf E \mathbf D \mathbf E^\top$.

Then what you call "normal" PCA whitening transformation is given by $\mathbf W_\mathrm{PCA} = \mathbf D^{-1/2} \mathbf E^\top$, see e.g. my answer in How to whiten the data using principal component analysis?

However, this whitening transformation is not unique. Indeed, whitened data will stay whitened after any rotation, which means that any $\mathbf W = \mathbf R \mathbf W_\mathrm{PCA}$ with orthogonal matrix $\mathbf R$ will also be a whitening transformation. In what is called ZCA whitening, we take $\mathbf E$ (stacked together eigenvectors of the covariance matrix) as this orthogonal matrix, i.e. $$\mathbf W_\mathrm{ZCA} = \mathbf E \mathbf D^{-1/2} \mathbf E^\top = \mathbf C^{-1/2}.$$

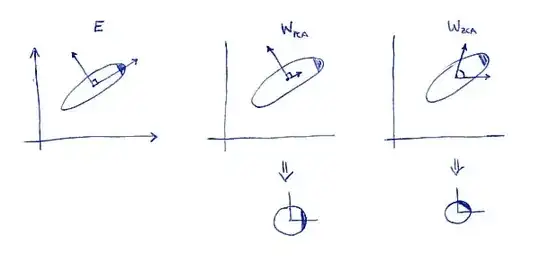

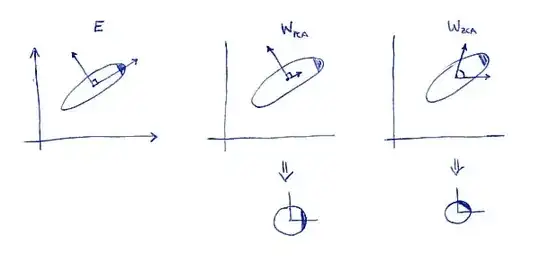

One defining property of ZCA transformation (sometimes also called "Mahalanobis transformation") is that it results in whitened data that is as close as possible to the original data (in the least squares sense). In other words, if you want to minimize $\|\mathbf X - \mathbf X \mathbf A^\top\|^2$ subject to $ \mathbf X \mathbf A^\top$ being whitened, then you should take $\mathbf A = \mathbf W_\mathrm{ZCA}$. Here is a 2D illustration:

Left subplot shows the data and its principal axes. Note the dark shading in the upper-right corner of the distribution: it marks its orientation. Rows of $\mathbf W_\mathrm{PCA}$ are shown on the second subplot: these are the vectors the data is projected on. After whitening (below) the distribution looks round, but notice that it also looks rotated --- dark corner is now on the East side, not on the North-East side. Rows of $\mathbf W_\mathrm{ZCA}$ are shown on the third subplot (note that they are not orthogonal!). After whitening (below) the distribution looks round and it's oriented in the same way as originally. Of course, one can get from PCA whitened data to ZCA whitened data by rotating with $\mathbf E$.

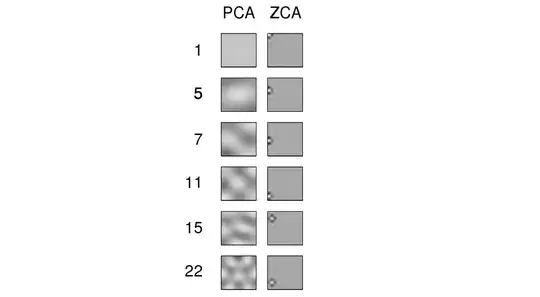

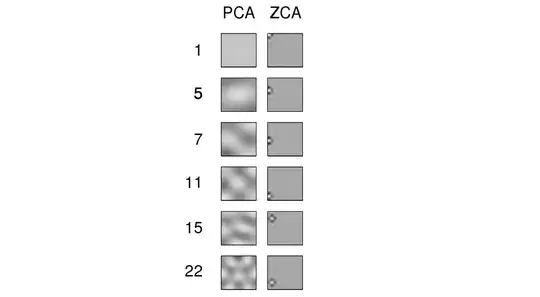

The term "ZCA" seems to have been introduced in Bell and Sejnowski 1996 in the context of independent component analysis, and stands for "zero-phase component analysis". See there for more details. Most probably, you came across this term in the context of image processing. It turns out, that when applied to a bunch of natural images (pixels as features, each image as a data point), principal axes look like Fourier components of increasing frequencies, see first column of their Figure 1 below. So they are very "global". On the other hand, rows of ZCA transformation look very "local", see the second column. This is precisely because ZCA tries to transform the data as little as possible, and so each row should better be close to one the original basis functions (which would be images with only one active pixel). And this is possible to achieve, because correlations in natural images are mostly very local (so de-correlation filters can also be local).

Update

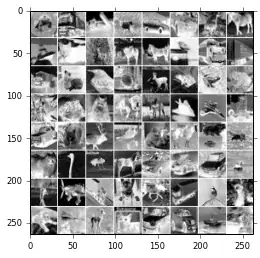

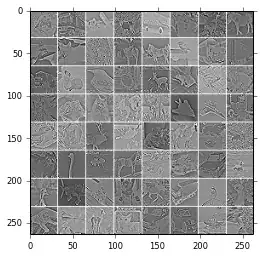

More examples of ZCA filters and of images transformed with ZCA are given in Krizhevsky, 2009, Learning Multiple Layers of Features from Tiny Images, see also examples in @bayerj's answer (+1).

I think these examples give an idea as to when ZCA whitening might be preferable to the PCA one. Namely, ZCA-whitened images still resemble normal images, whereas PCA-whitened ones look nothing like normal images. This is probably important for algorithms like convolutional neural networks (as e.g. used in Krizhevsky's paper), which treat neighbouring pixels together and so greatly rely on the local properties of natural images. For most other machine learning algorithms it should be absolutely irrelevant whether the data is whitened with PCA or ZCA.