As several others have already mentioned, there are many situations where theory dictates a regression through the origin (e.g. y = corn production, x = amount of cultivated land; when x=0, y must be 0). In these situations, I would first run a traditional regression with a constant and slope term. Then, test whether the estimated intercept is significantly different than zero (Kutner, 2004). If the intercept is not significantly different than zero anyways, you may have a good argument for setting it equal to zero.

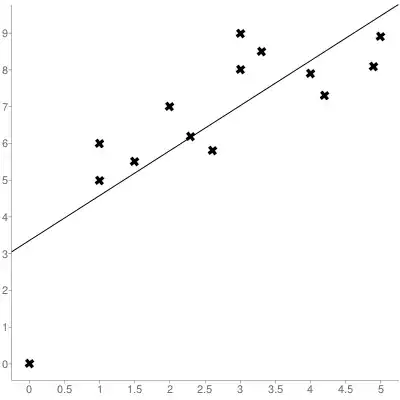

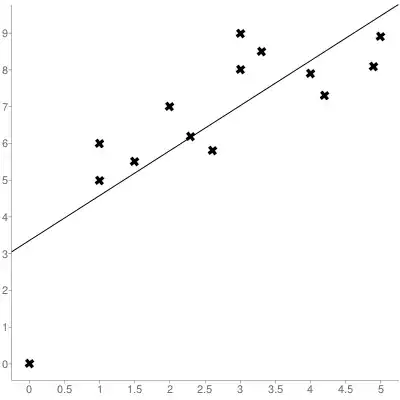

NOTE: What happens if you KNOW the function must go through the origin, but you still have a significant intercept term? Beware of the following scenario:

Here, the data point in the lower left corner corresponds to (0,0). Nevertheless, the linear regression appears to have a significant, positive, intercept. The underlying reason is likely that, between $x=0$ and $x=1$, there is a rapid, non-linear increase in $Y$. That is, the linear model is a good approximation for the data within $x \geq 1$, but does not extrapolate well below this range. This can suggest further refinements to your model.

WARNING: As Alecos explained, the residuals in a regression through the origin model will typically have a nonzero mean and will not sum to zero. Why is this important? The short answer is that it affects the calculation of $R^2$, and can make it difficult to interpret. In fact, $R^2$ can be negative in this case.

To see this, we have to consider how $R^2$ is derived. We start with the identity:

$$(Y_i - \bar{Y}) = (Y_i - \hat{Y}_i) + (\hat{Y}_i - \bar{Y})$$

where $\bar{Y}$ is the mean of all dependent variable observations $Y_i$, and $\hat{Y}_i$ is our predicted value for each observation. Now square both sides and sum over all $i$:

$$\sum(Y_i - \bar{Y})^2 = \sum(Y_i - \hat{Y}_i)^2 + \sum(\hat{Y}_i - \bar{Y})^2 + 2 \sum(Y_i - \hat{Y}_i)(\hat{Y}_i - \bar{Y})$$

It can be shown that, for a linear model with a slope and intercept, the cross-product term is equal to zero. However, this is not the case for regression through the origin. If that term did go to zero, we get the following equation

$$\sum(Y_i - \bar{Y})^2 = \sum(Y_i - \hat{Y}_i)^2 + \sum(\hat{Y}_i - \bar{Y})^2$$

which states that the total variability in the dependent variable, $SSTO = \sum(Y_i - \bar{Y})^2$, is the sum of the variability explained by the model, $SSR = \sum(\hat{Y}_i - \bar{Y})^2$, and the remaining unexplained variability $SSE = \sum(Y_i - \hat{Y}_i)^2$. We summarize this with the $R^2$ statistic, $R^2 = SSR/SSTO$.

However, all of this is based on the assumption that $\sum(Y_i - \hat{Y}_i)(\hat{Y}_i - \bar{Y})=0$. Again, this is not the case for regression through the origin. In extreme cases, when $SSR>SSTO$, $R^2$ will be less than zero.

References: The following article succinctly describes all of this: Eisenhauer (2003) "Regression through the Origin". Also see the textbook "Applied Linear Regression Models" by Kutner et al. (I believe chapter 3 has a section devoted to this).

Edit: After posting I came across this previous question, which is very relevant. Removal of statistically significant intercept term increases $R^2$ in linear model