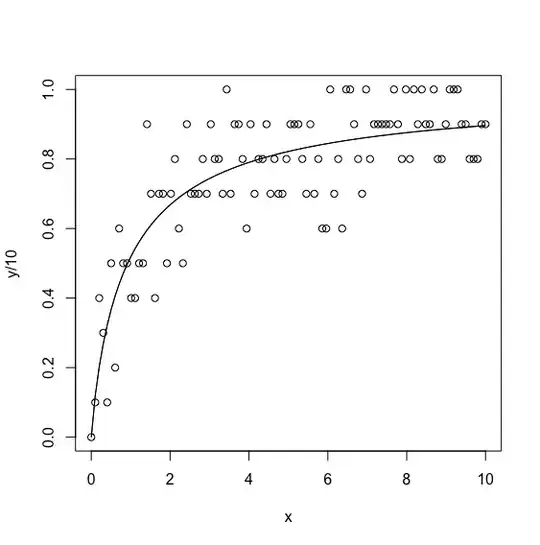

Consider a case where I have developed a predictive model using logistic regression. Now the logistic models gives a probability even when all the inputs are zero (because of the intercept). Now consider the case of predicting if a subject has disease or not. Here the probability carries a lot of significance. In this case, even when all the inputs are zero, there is a probability that the subject has disease. Is there a way we can remove this? Can we make a condition in the output that when all the inputs are zeros then the probability is zero, or can we subtract the probability with the probability when all inputs are zero?

To explain further, consider this example from Wikipedia https://en.wikipedia.org/wiki/Logistic_regression#Example:_Probability_of_passing_an_exam_versus_hours_of_study

The logistic model is

Coefficient Std.Error z-value P-value (Wald)

Intercept −4.0777 1.7610 −2.316 0.0206

Hours 1.5046 0.6287 2.393 0.0167

And the output of the model is

Hours of study Probability of passing exam

1 0.07

2 0.26

3 0.61

4 0.87

5 0.97

Here when the "Hours of study" is 0, the output is 0.02. Now how can we remove this bias?