Are there any empirical studies justifying the use of the one standard error rule in favour of parsimony? Obviously it depends on the data-generation process of the data, but anything which analyses a large corpus of datasets would be a very interesting read.

The "one standard error rule" is applied when selecting models through cross-validation (or more generally through any randomization-based procedure).

Assume we consider models $M_\tau$ indexed by a complexity parameter $\tau\in\mathbb{R}$, such that $M_\tau$ is "more complex" than $M_{\tau'}$ exactly when $\tau>\tau'$. Assume further that we assess the quality of a model $M$ by some randomization process, e.g., cross-validation. Let $q(M)$ denote the "average" quality of $M$, e.g., the mean out-of-bag prediction error across many cross-validation runs. We wish to minimize this quantity.

However, since our quality measure comes from some randomization procedure, it comes with variability. Let $s(M)$ denote the standard error of the quality of $M$ across the randomization runs, e.g., the standard deviation of the out-of-bag prediction error of $M$ over cross-validation runs.

Then we choose the model $M_\tau$, where $\tau$ is the smallest $\tau$ such that

$$q(M_\tau)\leq q(M_{\tau'})+s(M_{\tau'}),$$

where $\tau'$ indexes the (on average) best model, $q(M_{\tau'})=\min_\tau q(M_\tau)$.

That is, we choose the simplest model (the smallest $\tau$) which is no more than one standard error worse than the best model $M_{\tau'}$ in the randomization procedure.

I have found this "one standard error rule" referred to in the following places, but never with any explicit justification:

- Page 80 in Classification and Regression Trees by Breiman, Friedman, Stone & Olshen (1984)

- Page 415 in Estimating the Number of Clusters in a Data Set via the Gap Statistic by Tibshirani, Walther & Hastie (JRSS B, 2001) (referencing Breiman et al.)

- Pages 61 and 244 in Elements of Statistical Learning by Hastie, Tibshirani & Friedman (2009)

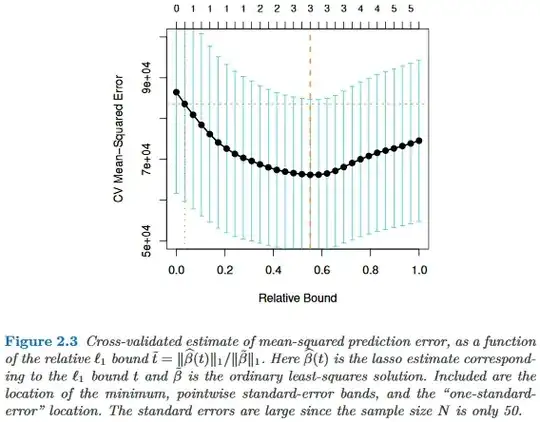

- Page 13 in Statistical Learning with Sparsity by Hastie, Tibshirani & Wainwright (2015)