I don't know of any rigorous justification for the "one-standard-error" rule. It seems to be a rule of thumb for situations where the analyst is more interested in parsimony than in predictive accuracy.

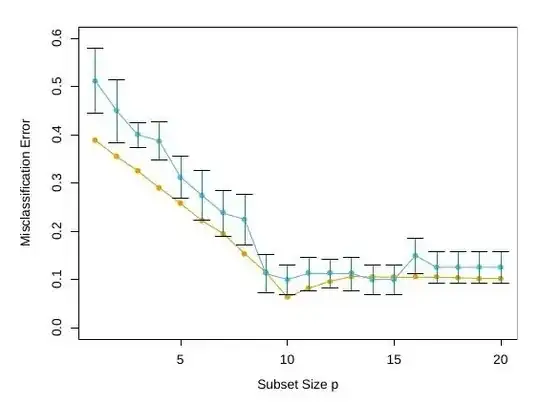

It's important to recognize the artificial model being evaluated in the section of ESL that brings up the "one-standard-error" rule (p.244; Figure 7.9 posted by @Rickyfox) and how that type of model might not be relevant to many real-world problems. It's "from the scenario in the bottom right panel of Figure 7.3," which is explained in text on p. 226: it's a classification problem with 80 cases. The 20 predictors are each uniformly and independently distributed in [0,1]; the true class is 1 if the sum of the first 10 predictors is > 5.

Thus the model used for this example has no correlations among the predictors, and 10 of the predictors have no predictive value at all. If you didn't know beforehand how many predictors are associated with the class membership but you suspected that only a small number are and that the predictors wouldn't be inter-correlated, one could argue that the "one-standard-error" rule would tend to give you the smallest useful LASSO model, and would be close to the "true" model.

I haven't, however, come across many real-world situations where there are no correlations among the predictors or where one could a priori assume that a large number are unrelated to outcome. In those cases I don't know that there is any justification for the "one-standard-error" rule. Minimum cross-validation error would seem much better justified in such real-world situations.

Also, note that the variable selection performed by LASSO makes the most sense in situations where there aren't correlations among predictors. If there are such correlations, the specific predictors selected are likely to depend heavily on the data sample at hand, as you can illustrate by repeating LASSO on multiple bootstrapped samples of such a dataset. So, yes, you can select predictors with LASSO but there is no assurance, with correlated predictors, that the selected predictors are in any sense "true" predictors, just useful ones.