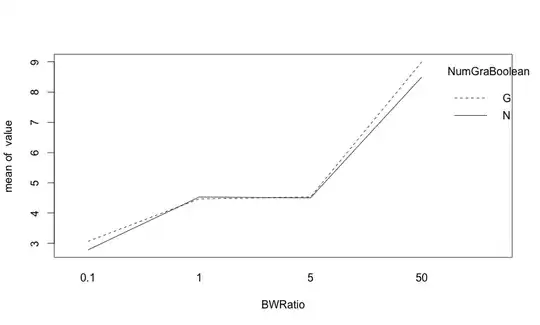

I did lasso selection using lars::lars(), then I got this plot. I have no idea how to interpret it:

Could anyone provide a brief explanation? Why does it plot standardized coefficients against |beta|/max|beta|?

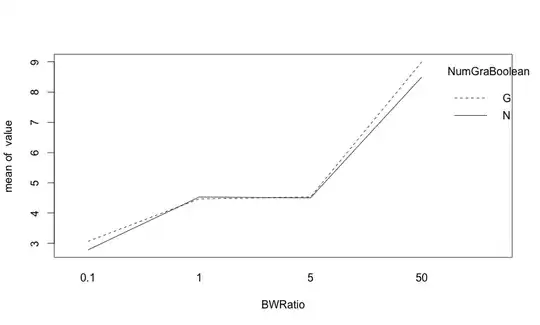

I did lasso selection using lars::lars(), then I got this plot. I have no idea how to interpret it:

Could anyone provide a brief explanation? Why does it plot standardized coefficients against |beta|/max|beta|?

In regression, you're looking to find the $\beta$ that minimizes:

$ (Y - X_1\beta_1 - X_2\beta_2 - \text{...})^2 $

LASSO applies a penalty term to the minimization problem:

$ (Y - X_1\beta_1 - X_2\beta_2 - \text{...})^2 + \alpha\sum_i{|\beta_i|}$

So when $\alpha$ is zero, there is no penalization, and you have the OLS solution - this is max $|\beta|$ (or since I didn't write it as a vector, max $\sum{|\beta_i|}$).

As the penalization $\alpha$ increases, $\sum{|\beta_i|}$ is pulled towards zero, with the less important parameters being pulled to zero earlier. At some level of $\alpha$, all the $\beta_i$ have been pulled to zero.

This is the x-axis on the graph. Instead of presenting it as high $\alpha$ on the left decreasing to zero when moving right, it presents it as the ratio of the sum of the absolute current estimate over the sum of the absolute OLS estimates. The vertical bars indicate when a variable has been pulled to zero (and appear to be labeled with the number of variables remaining)

For the y-axis being standardized coefficients, generally when running LASSO, you standardize your X variables so that the penalization occurs equally over the variables. If they were measured on different scales, the penalization would be uneven (for example, consider multiplying all the values of one explanatory variable by 0.01 - then the coefficient of the OLS estimate would be 100x the size, and would be pulled harder when running LASSO).