There are differences in the assumptions and the hypotheses that are tested.

The ANOVA (and t-test) is explicitly a test of equality of means of values. The Kruskal-Wallis (and Mann-Whitney) can be seen technically as a comparison of the mean ranks.

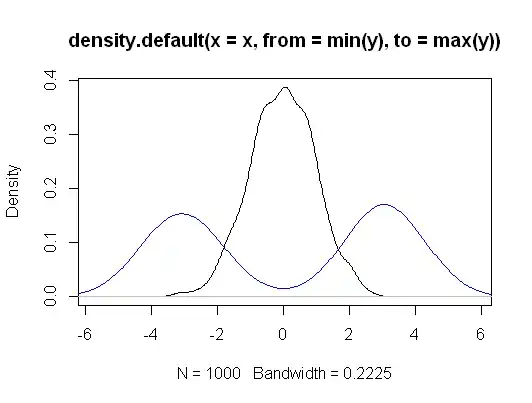

Hence, in terms of original values, the Kruskal-Wallis is more general than a comparison of means: it tests whether the probability that a random observation from each group is equally likely to be above or below a random observation from another group. The real data quantity that underlies that comparison is neither the differences in means nor the difference in medians, (in the two sample case) it is actually the median of all pairwise differences - the between-sample Hodges-Lehmann difference.

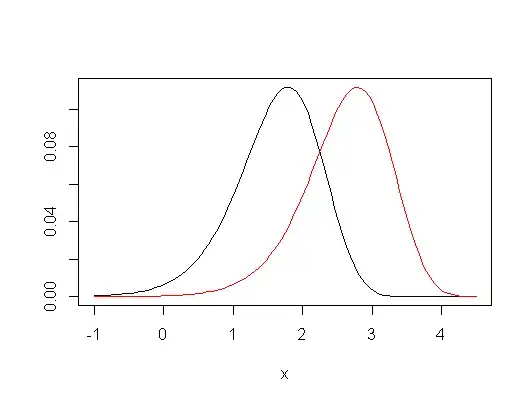

However if you choose to make some restrictive assumptions, then Kruskal-Wallis can be seen as a test of equality of population means, as well as quantiles (e.g. medians), and indeed a wide variety of other measures. That is, if you assume that the group-distributions under the null hypothesis are the same, and that under the alternative, the only change is a distributional shift (a so called "location-shift alternative"), then it is also a test of equality of population means (and, simultaneously, of medians, lower quartiles, etc).

[If you do make that assumption, you can obtain estimates of and intervals for the relative shifts, just as you can with ANOVA. Well, it is also possible to obtain intervals without that assumption, but they're more difficult to interpret.]

If you look at the answer here, especially toward the end, it discusses the comparison between the t-test and the Wilcoxon-Mann-Whitney, which (when doing two-tailed tests at least) are the equivalent* of ANOVA and Kruskal-Wallis applied to a comparison of only two samples; it gives a little more detail, and much of that discussion carries over to the Kruskal-Wallis vs ANOVA.

* (aside a particular issue that arises with multigroup comparisons where you can have non-transitive pairwise differences)

It's not completely clear what you mean by a practical difference. You use them in generally a generally similar way. When both sets of assumptions apply they usually tend to give fairly similar sorts of results, but they can certainly give fairly different p-values in some situations.

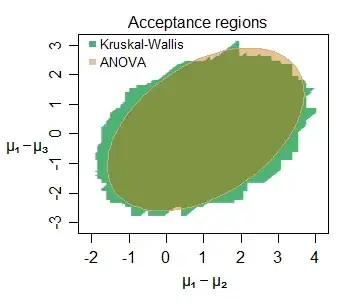

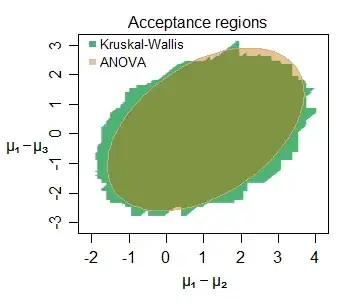

Edit: Here's an example of the similarity of inference even at small samples -- here's the joint acceptance region for the location-shifts among three groups (the second and third each compared with the first) sampled from normal distributions (with small sample sizes) for a particular data set, at the 5% level:

Numerous interesting features can be discerned -- the slightly larger acceptance region for the KW in this case, with its boundary consisting of vertical, horizontal and diagonal straight line segments (it is not hard to figure out why). The two regions tell us very similar things about the parameters of interest here.