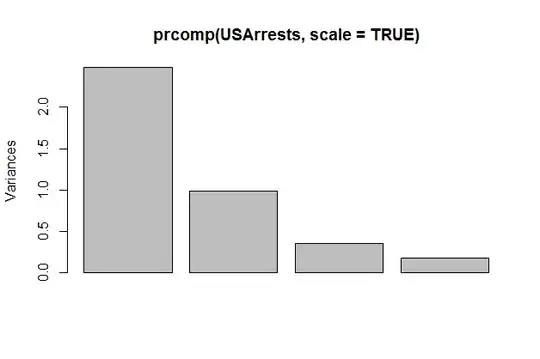

Normalization is important in PCA since it is a variance maximizing exercise. It projects your original data onto directions which maximize the variance. The first plot below shows the amount of total variance explained in the different principal components wher we have not normalized the data. As you can see, it seems like component one explains most of the variance in the data.

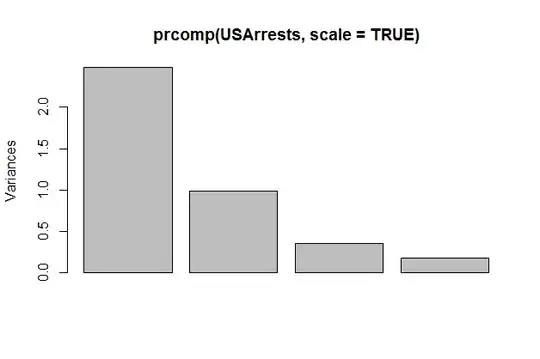

If you look at the second picture, we have normalized the data first. Here it is clear that the other components contribute as well. The reason for this is because PCA seeks to maximize the variance of each component. And since the covariance matrix of this particular dataset is:

Murder Assault UrbanPop Rape

Murder 18.970465 291.0624 4.386204 22.99141

Assault 291.062367 6945.1657 312.275102 519.26906

UrbanPop 4.386204 312.2751 209.518776 55.76808

Rape 22.991412 519.2691 55.768082 87.72916

From this structure, the PCA will select to project as much as possible in the direction of Assault since that variance is much greater. So for finding features usable for any kind of model, a PCA without normalization would perform worse than one with normalization.