In the face of an apparent paradox, it helps to resort to definitions and first principles. These are applied below to

Describe the random variables $X,$ $Y,$ and $Z$

Compute the expectations needed for the correlation coefficient calculation.

Compute the correlation coefficient itself.

It is all simple and straightforward--precisely those characteristics of small problems like this that help illuminate basic concepts and procedures which otherwise may be so tricky and counterintuitive.

By definition, a random variable (such as $X$, $Y$, or $Z$) is a real-valued measurable function of a probability space $(\Omega, \mathfrak{S}, p)$. In more colloquial language (as explained at What is meant by a "random variable"?), $\Omega$ is a box, its elements $\omega \in \Omega$ are tickets (slips of paper), $\mathfrak S$ stipulates what events can be given probabilities, and $p$ tells us the proportions of each kind of ticket in the box. A "measurable function" merely is a consistent way of writing numbers on the tickets.

Because the setting involves two distinct random variables $(X,Y)$ we begin by writing these values on the tickets. There are $3\times 3 = 9$ possibilities, small enough to tabulate:

$$

\begin{array}{ccccccc}

X & Y & Z & XZ & X^2 & Z^2 & p\\

0 & 0 & 0 & 0 & 0 & 0 & p_0 q_0 \\

0 & 1 & 0 & 0 & 0 & 0 & p_0 q_1 \\

0 & 2 & 1 & 0 & 0 & 1 & p_0 q_2 \\

1 & 0 & 0 & 0 & 1 & 0 & p_1 q_0 \\

1 & 1 & 1 & 1 & 1 & 1 & p_1 q_1 \\

1 & 2 & 1 & 1 & 1 & 1 & p_1 q_2 \\

2 & 0 & 1 & 2 & 4 & 1 & p_2 q_0 \\

2 & 1 & 1 & 2 & 4 & 1 & p_2 q_1 \\

2 & 2 & 1 & 2 & 4 & 1 & p_2 q_2 \\

\end{array}

$$

Each row (beneath the header) gives information about one kind of the nine tickets. The $X$ column shows the value of $X$ written on each ticket and the $Y$ column shows the value of $Y$. The rest is deduced from the information given:

$Z$ is computed according to its definition in terms of $X$ and $Y$.

$XZ$ is the product of $X$ and $Z$.

$X^2$ and $Z^2$ are the squares of $X$ and $Z$, respectively.

Those latter three columns were computed in anticipation they would be needed for calculating the correlation coefficient of $X$ and $Z$. The final column, $p$, computes the proportions of each kind of ticket assuming $X$ and $Y$ are independent. This means the proportion of tickets where $(X,Y) = (i,j)$ is the product of the probabilities $\Pr(X=i) = p_i$ and $\Pr(Y=j) = q_j$.

By definition, the expectation of a random variable is its average in the box, weighted according to the proportions. Thus the six expectations (simplified using the axiom of total probability $p_0+p_1+p_2=1=q_0+q_1+q_2$ to eliminate $p_0$ and $q_0$) are

$$\eqalign{

\mathbb{E}[X] &= p_1 q_0+2 p_2 q_0+p_1 q_1+2 p_2 q_1+p_1 q_2+2 p_2 q_2 & = p_1 + 2p_2 \\

\mathbb{E}[Y] &=p_1 q_0+2 p_2 q_0+p_1 q_1+2 p_2 q_1+p_1 q_2+2 p_2 q_2 & = q_1 + 2q_2 \\

\mathbb{E}[Z] &=p_1 q_0+p_2 q_0+p_1 q_1+p_2 q_1+p_1 q_2+p_2 q_2 &= p_1 q_1-p_2 \left(q_2-1\right)+q_2 \\

\mathbb{E}[XZ] &=p_1 q_0+2 p_2 q_0+p_1 q_1+2 p_2 q_1+p_1 q_2+2 p_2 q_2 &= p_1 \left(q_1+q_2\right)+2 p_2 \\

\mathbb{E}[X^2] &=p_1 q_0+4 p_2 q_0+p_1 q_1+4 p_2 q_1+p_1 q_2+4 p_2 q_2&= p_1+4 p_2 \\

\mathbb{E}[Z^2] &=p_1 q_0+p_2 q_0+p_1 q_1+p_2 q_1+p_1 q_2+p_2 q_2 &= p_1 q_1-p_2 \left(q_2-1\right)+q_2.

}$$

The correlation coefficient is defined as

$$\rho_{X,Z} = \frac{\mathbb{E}[XZ] - \mathbb{E}[X]\mathbb{E}[Z]}{\sqrt{\mathbb{E}[X^2] - \mathbb{E}[X]^2}\sqrt{\mathbb{E}[Z^2] - \mathbb{E}[Z]^2}}.$$

The rest is arithmetic (shown below for completeness).

Plugging in the preceding values produces

$$\frac{-\left(p_1+2 p_2\right) \left(p_2 \left(-\left(q_2-1\right)\right)+p_1 q_1+q_2\right)+p_1 \left(q_1+q_2\right)+2 p_2}{\sqrt{\left(-\left(p_1+2 p_2\right){}^2+p_1+4 p_2\right) \left(-\left(p_2 \left(-\left(q_2-1\right)\right)+p_1 q_1+q_2\right){}^2-p_2 \left(q_2-1\right)+p_1 q_1+q_2\right)}}$$

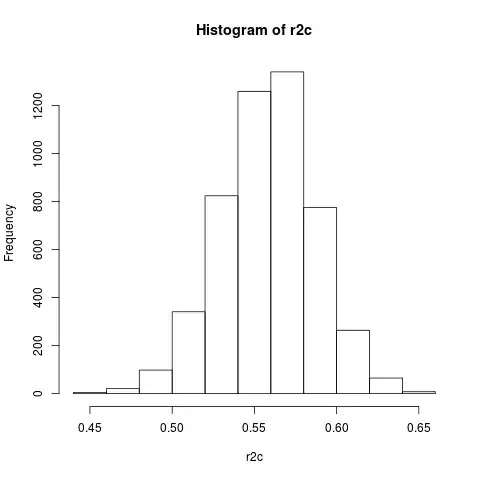

for the correlation coefficient. It can range anywhere from $0$ (approached in the limit as $p_1=p_2=1/2$ and $q_1=q_2=1/2-e^2$ for which it equals $\frac{e}{\sqrt{1-e^2}} \to 0$ as $e\to 0$) through $1$ (set $p_1=0, p_2=1/2, q_1=0, q_2=0$ for instance). Setting (as in the code of the question) $p_1 =q_1= 0.18, p_2 =q_2= 0.01$ gives $\rho_{X,Z} = 0.46308$ and $p_1= q_1 = 0.32, p_2=q_2 = 0.04$ gives $\rho_{X,Z} = 0.564433.$