I'm trying to understand the effects of the explanatory variables of my logit regression.

(Intercept) -6.7909619 0.0938448 -72.364 < 2e-16 ***

level 0.0755949 0.0192928 3.918 8.92e-05 ***

building.count 0.0697849 0.0091334 7.641 2.16e-14 ***

gold.spent 0.0019825 0.0001794 11.051 < 2e-16 ***

npc 0.0171680 0.0056615 3.032 0.00243 **

friends 0.0304137 0.0044568 6.824 8.85e-12 ***

post.count -0.0132424 0.0041761 -3.171 0.00152 **

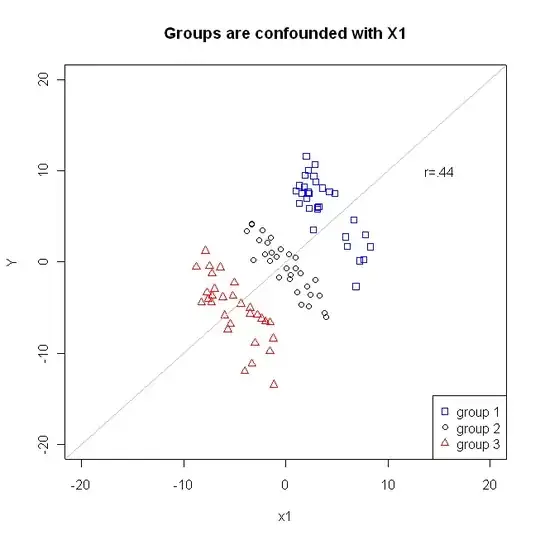

But when I look at the raw data, I don't understand why post.count is negatively effects my output. And also post.count and my dependent variable (revenue.all.time) has small but positive correlation:

> with(sn, cor(post.count, revenue.all.time))

[1] 0.009806015

I also checked the correlations between post.count variable and the others:

> with(sn, cor(post.count, gold.spent))

[1] 0.296514

> with(sn, cor(post.count, level))

[1] 0.4289456

> with(sn, cor(post.count, building.count))

[1] 0.4140521

> with(sn, cor(post.count, npc))

[1] 0.3370106

> with(sn, cor(post.count, friends))

[1] 0.007695264

but they're all positive as well. So why does my model denote post.count's coefficient negative ?

Thanks,