I am working on a binary classification with an imbalanced dataset of 977 records (77:23 class ratio). My label 1 (POS not met) is the minority class.

Currently without any over/under sampling techniques (as it is not recommended), I get the below performance (using `class_weight parameter though)

And my roc_auc score is 0.8024156371012354

Based on the above results, to me, it feels like my model still doesn't perform well on POS not met which is our label 1.

However, my auc is 80% which feels like a decent figure to look at.

Now my question is as follows

a) Irrespective of business decision to keep/reject the model, based on the above metrics alone, how do I know my model is performing?

I read that AUC talks about discriminative ability between the positive and negative classes. **Does it mean my model decision threshold should be 0.8?

** While my model is good at discriminating between positive and negative, it is bad at identifying not met as not met (recall only 60%). But my dataset is imbalanced though. Would auc still apply?

b) Is my dataset imbalanced first of all?

What is considered imbalance? 1:99 or 10:90 or 20:80 etc?

Is there any measure (like correlation coefficient) that can indicate the imbalance level?

c) Based on above matrix, how should I interpret the f1-score, recall and auc together?

What does high auc but poor f1 mean?

update

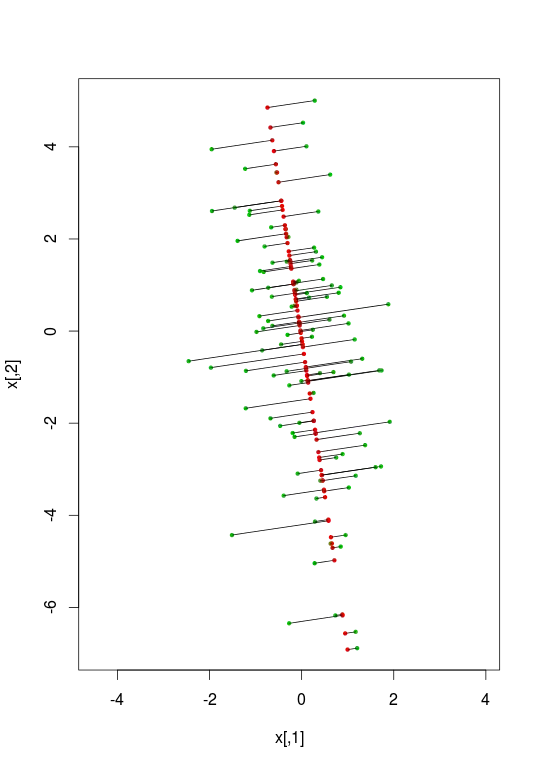

I used the code below (from online) to get the best f1 at different thresholds