I´ve been working in a random forest model for credit scoring in R. I've trained a model using caret::train.

My data "df_samples_rf" has the next structure:

control <- trainControl(method="repeatedcv",

number = 10,

repeats = 5,

search = "grid",

classProbs = T,

savePredictions = T,

summaryFunction = twoClassSummary,

allowParallel = T)

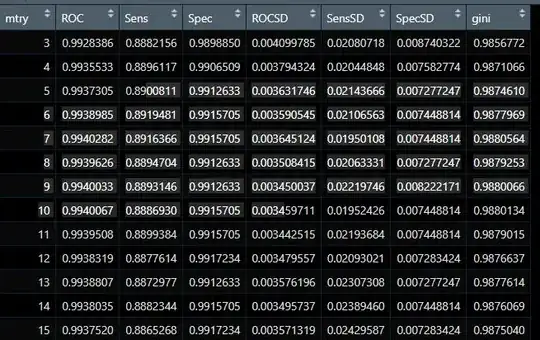

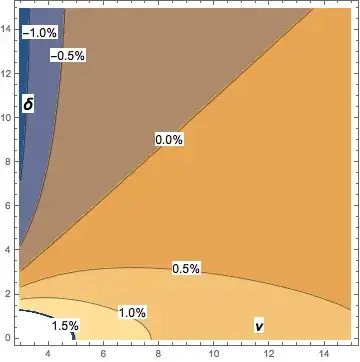

tune_grid <- expand.grid(.mtry = c(3:15))#1

rf_gridsearch <- NULL

rf_gridsearch <- train(bad ~.,

data = df_samples_rf[-27],

method = "rf",

metric = "ROC",

tuneGrid = tune_grid,

trControl = control)

So I assume the model can perform well.

Then I try to predict like this:

rf_predictions <- predict(rf_gridsearch$finalModel,

newdata = df_no_yes)

Data "df_no_yes" has exactly the same structure and class variables as "df_samples_rf", predicted variable also have the same class and levels.

results <- data.frame(real = df_no_yes$bad,

rf_predictions)

I run a confusionMatrix and get:

confusionMatrix(results$rf_predictions, results$real)

Why does caret::train have models with good ROC-AUC and classification metrics, and when predicting over a new set of data, I get so bad results? Am I doing something wrong? Applying the predict in the wrong way?