I'm interested in the moments of a given draw, $X_t$, of a time series conditional on the knowledge that all other draws within some window before and after $t$ were below a fixed threshold, $c$. For example, I might want the expectation of $X_{80}$ given that $X_{70}, \cdots, X_{90}$ were all below $c$.

To make things concrete and simple, let's suppose the time series arises from a basic AR(1) Gaussian process with $|\phi|<1$:

$$X_t = \mu + \phi X_{t-1}+\epsilon_t$$ $$\epsilon_t \sim N(0, \sigma^2_\epsilon)$$

Let's consider the expectation when conditioning on what I'll call "truncated knowledge'' about observations within a window of $t$ defined by $a \in \mathcal{N^+}$, $b \in \mathcal{N^+}$:

$$E \big[ X_t \; | \; X_{j} < c \; \forall \; j \in \{ t-a, \cdots, t+b \} \big]$$

Has this expectation been worked out theoretically for the simple AR(1) process above or a similar process? If not, is there a straightforward way to do so?

A little more nuance

This problem has a few interesting gnarls. For example, even though this simple stochastic process is causal (i.e., it depends only on past values, not future values), when our knowledge about the other observations is truncated, we probably cannot drop conditioning on future values. Also, it's interesting to note that this process' strict stationarity does not in fact imply that this conditional expectation is constant in $t$, because the conditional expectation holds constant the window $[t-a,t-b]$ rather than allowing the window to vary with $t$.

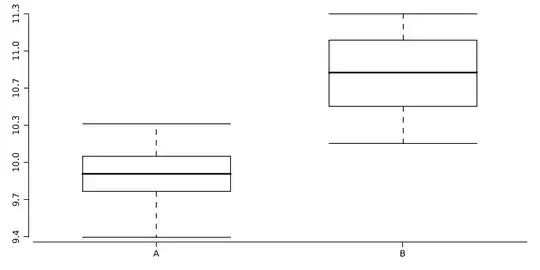

Empirically, the behavior of the conditional expectation given above is pretty interesting and clearly is not constant in $t$. The plot below shows sample means (across 5,000 individual time series) of $E[X_t]$ conditional on $t$. Each time series was from the same underlying AR(1) process with $\phi=0.9$ and errors $\epsilon_{t} \sim N(0, 0.1)$. Each time series had 100 simulated draws; I discarded the first 50 as a warmup. The plot distinguishes "always small" time series (i.e., $X_t < 1.18$ for all $t \in \{ 50, \cdots 100\}$, where the 1.18 is chosen to have 50% of the time series be always small) from time series in which at least one draw was not "small".

Intuitively, the behavior below would seem to reflect that "always small" time series are typically those that happened to follow an initially decreasing trajectory, because that would increase the chance that the series never managed to increase sufficiently to obtain a non-small draw. Later in the series, $E[X_t]$ begins to increase such that $X_{100}$ is close to $X_1$. Presumably this is regression to the mean.

library(dplyr)

library(tidyverse)

library(simts)

library(ggplot2)

# number of time series to simulate

k = 5000

# number of draws in each series

draws = 100

# simulate Gaussian AR(1)'s with autocorrelation = 0.9 and errors ~ N(0, 0.1)

for ( i in 1:k ) {

.d = data.frame( yi = as.numeric( gen_gts( draws, AR1(phi = 0.9, sigma2 = 0.1) ) ) )

.d$iterate = i

.d$draw.index = 1:nrow(.d)

if ( i == 1 ) d = .d else d = bind_rows(d, .d)

}

# avoid any asymptotic issues by discarding warmup draws

d2 = d %>% filter(draw.index >= 50) %>%

group_by(iterate) %>%

mutate(max.yi = max(yi))

# classify half of time series as "always small" based on median of max yi

threshold = median(d2$max.yi)

d2$always.small = d2$max.yi < threshold

# conditional non-stationarity

agg2 = d2 %>% group_by(always.small, draw.index) %>%

summarise( Mean = mean(yi),

SD = sd(yi) )

ggplot( data = agg2,

aes(x = draw.index,

y = Mean,

color = always.small) ) +

geom_line() +

theme_bw()