It's the conventional wisdom that a PCA transformation can cure multicollinearity. Putting this into practice on example data, I find myself confused. In the following case, applying PCA seems to have made the multicollinearity (as measured by VIF) much worse!

library(regclass)

library(car)

library(tidymodels)

data("WINE")

##Dropping rating variable

data<-WINE[,-1]

vif(lm(alcohol~.,data=data))

If we are going by the conservative benchmark that VIF of 2 or greater presents multicollinearity that needs to be removed, we need to do something to remove it.

Let's apply PCA.

##3 Varibles with vif over 2, would like to remove that multicollinearity. Let's try pca.

withpca<-recipe(alcohol~.,data=data) %>% step_pca(all_predictors()) %>% prep() %>% juice()

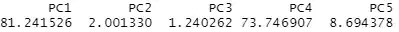

vif(lm(alcohol~.,data=withpca))

#multicollinearity is now much worse!

What has gone wrong? The VIFs for PC1 and PC4 are now astronomical! Can someone explain whether I've misunderstood the conventional wisdom about PCA and multicollinearity or whether that conventional wisdom is just wrong?