I working in R and I try to fit neural network with TensorFlow and Keras. Generally I am not satisficed with result from model.

I tried to fit this model sequential model.

model <- keras_model_sequential()

# Add layers to the model

model %>%

layer_dense(units = 512, activation = 'relu',input_shape = dim(train_data_tf)[2]) %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 256, activation = 'linear') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 128, activation = 'linear') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 64, activation = 'linear') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 32, activation = 'linear') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 16, activation = 'linear') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 1)

summary(model)

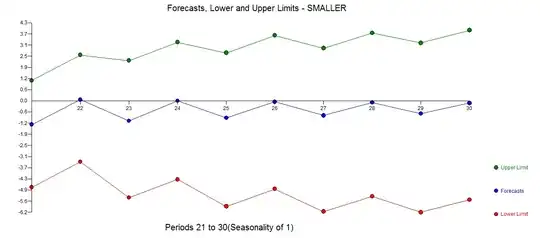

I also used optimizer=optimizer_rmsprop(lr = 0.001), so forecasting result you can see on next pic. Тhe red dots are the original values while the black ones are projections. So any suggestion how can tuning parameters of this model and project better?