As others have suggested, while automated methods will almost always provide some value as the optimal number of clusters, k, review by a human with subject matter expertise is necessary to ensure that the result is meaningful. For example, when the data have poor separation into discrete clusters, these algorithms will still provide a value, but it often takes a human to look at the diagnostic plots to determine if that value of k has significant merit over other nearby solutions and whether any prior knowledge about the dataset can inform intuition on an appropriate value or at least boundaries around where to find k.

Furthermore, there are many valid approaches to quantifying cluster separation/cohesion and they don't always agree. Selecting the correct distance metric and method of quantifying the 'optimal' value of k is nuanced and requires careful thinking about the specific data and problem you're working on.

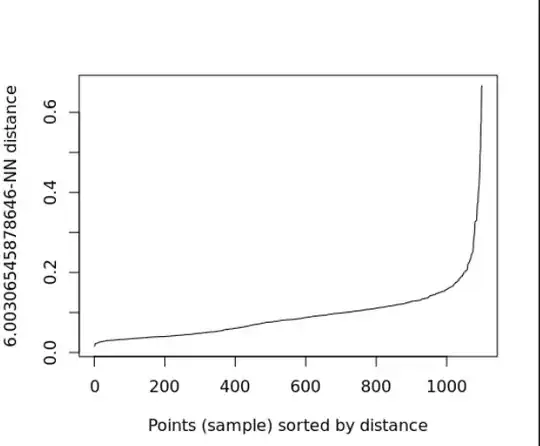

All that said - one approach is to use many algorithms and see if they provide a concensus answer to the 'optimal' value of k. Below is an example of how to do this in R with the classic iris dataset. Here I'm using the {NbClust} package which automatically runs 30 different methods and provides a summary for the number of clusters proposed by each (along with some diagnostic plots which I've clipped for brevity). This can be a useful starting place.

library(NbClust)

iris_k <- NbClust(data = iris[, 1:4],

distance = "euclidean",

method = "complete")

#> *******************************************************************

#> * Among all indices:

#> * 2 proposed 2 as the best number of clusters

#> * 13 proposed 3 as the best number of clusters

#> * 5 proposed 4 as the best number of clusters

#> * 1 proposed 6 as the best number of clusters

#> * 2 proposed 15 as the best number of clusters

#>

#> ***** Conclusion *****

#>

#> * According to the majority rule, the best number of clusters is 3

#>

#>

#> *******************************************************************

Created on 2021-12-30 by the reprex package (v2.0.1)