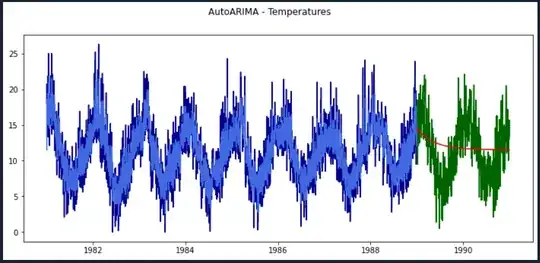

I am trying to implement a pmdarima AutoARIMA estimation exercise for learning reasons. Observation is that, selected model fits the train data quite well, however it performs poorly on test data, at a glance. Dataset is daily temperatures from 1981.01.01 to 1990.12.31 with strong cycle behaviour. Best model found by pmdarima.AutoARIMA is ARIMA(1,0,3)(0,0,0)[0].

I am not sure if the model should perform better on test data or results cannot be improved further for this dataset.

My question is, why the same model performs so differently on train and test data despite that they have similar properties?

Thank you very much.

import matplotlib.pyplot as plt

import pandas as pd

import pmdarima as pm

from sktime.forecasting.model_selection import temporal_train_test_split

from data_preparation import data_preparation

y = data_preparation('D:/Stat/dataset/temperatures.csv', 'Date', 'Temp', 'D')

y_train, y_test = temporal_train_test_split(y, train_size = 0.8)

forecaster = pm.auto_arima(y_train, trace = True)

fitted = forecaster.predict_in_sample()

forecast = forecaster.predict(len(y_test))

fitted = pd.Series(fitted, index = pd.date_range(start = y_train.index[0], periods = len(y_train) + 1, freq='D', closed='right'))

forecast = pd.Series(forecast, index = pd.date_range(start = y_test.index[0], periods = len(y_test) + 1, freq='D', closed='right'))

figure = plt.figure(figsize=(12, 5))

figure.suptitle("AutoARIMA - Temperatures")

new_plot_1 = figure.add_subplot(111)

new_plot_1.plot(y_train.index, y_train.values, 'darkblue')

new_plot_1.plot(y_test.index, y_test.values, 'darkgreen')

new_plot_1.plot(fitted, 'royalblue')

new_plot_1.plot(forecast, 'red')