I am trying to match the results from using CausalImpact with those from using BSTS for a custom model. I followed exactly what the package instruction says but the results completely do not match.

Here I tried a simple local level model. Dataset name: stopcount_trial, Y variable: stopcount, pre-period: 1-79, post-period:80-158.

First, I tried the CausalImpact package.

pre.period <- c(1, 79)

post.period <- c(80, 158)

impactpractice1 <- CausalImpact(stopcount_trial, pre.period, post.period, model.args = list(niter = 1000))

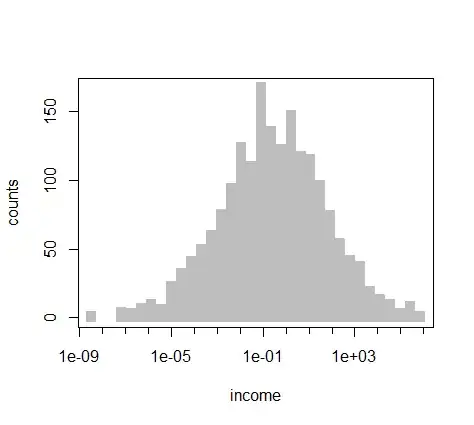

plot(impactpractice1)

Here, I tried the BSTS package (but should be the same as CausalImpact)

post.period <- c(80, 158)

post.period.response <- stopcount_final$stopcount[post.period[1] : post.period[2]]

stopcount_final$stopcount[post.period[1] : post.period[2]] <- NA

ss <- AddLocalLevel(list(), stopcount_final$stopcount)

bsts.model <- bsts(stopcount_final$stopcount, ss, niter = 1000)

impact <- CausalImpact(bsts.model = bsts.model,

post.period.response = post.period.response)

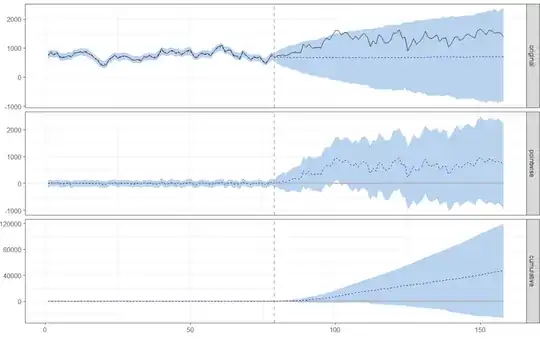

plot(impact)

The results are supposed to be identical, but they are not. What the heck I am doing wrong here? Please help.