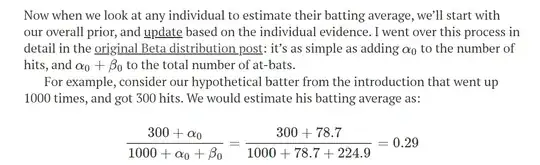

@drob showed a great example of adjusting batting averages using a beta-based prior distribution. He used a prior calculated Beta distribution to adjust batting averages individually, and

it’s as simple as adding α0 to the number of hits, and α0+β0 to the total number of at-bats

My question is:

- How can I do a similar adjustment for lognormal distributions, using

meanandvariance? I have anlmmodel trained onlognormaldata, and I would like to incorporate prior knowledge to weight/adjust my predictions (I cannot install Stan etc. due to sysadmin limitations)

Context

Let's say I built the following model, estimating income for different race groups, in 2019.

Come 2021, I do not have the same data, but I have some knowledge as to what the mean and stdev of the income looks like, for each race group.

If I have to do stick with some basic adjustment of my 2019 predictions (similar to David Robinson's), I imagine I can simply regularise my previous predictions based on a new distribution of income that I am aware of (instead of collecting new data and building a new model)

But how can I do so with a log-normal? How statistically proper is it?

library(readr)

library(broom)

# Read in data

income_mean <- readr::read_csv('https://raw.githubusercontent.com/rfordatascience/tidytuesday/master/data/2021/2021-02-09/income_mean.csv')

income_mean$log_income_dollars <- log10(income_mean$income_dollars)

# Model the data

lm1 <- lm(log_income_dollars ~ race + year, data = income_mean)

head(augment(lm1))

#> # A tibble: 6 x 9

#> log_income_doll~ race year .fitted .resid .hat .sigma .cooksd .std.resid

#> <dbl> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 4.18 All ~ 2019 4.97 -0.787 0.00256 0.482 7.63e-4 -1.64

#> 2 4.61 All ~ 2019 4.97 -0.363 0.00256 0.482 1.62e-4 -0.753

#> 3 4.84 All ~ 2019 4.97 -0.133 0.00256 0.482 2.18e-5 -0.277

#> 4 5.05 All ~ 2019 4.97 0.0741 0.00256 0.482 6.76e-6 0.154

#> 5 5.41 All ~ 2019 4.97 0.434 0.00256 0.482 2.32e-4 0.902

#> 6 5.65 All ~ 2019 4.97 0.683 0.00256 0.482 5.73e-4 1.42

Created on 2021-09-16 by the reprex package (v1.0.0)