I'm going to have a go at explaining why I think detecting a class imbalance problem is likely to be difficult because of the paucity of data when we actually do have a problem.

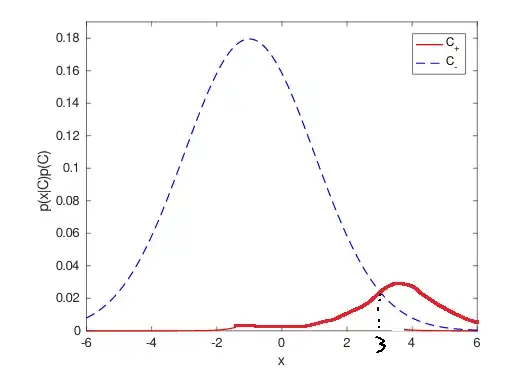

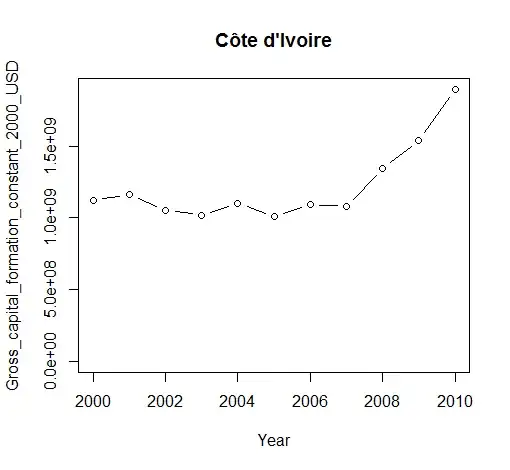

Consider a univariate normal pattern recognition task, with a 19:1 ratio of negative to positive examples (so that classifying everything as negative gives an accuracy of 95%), but where a decision boundary could be drawn giving an accuracy better than 95%. The ideal distributions and decision boundary are shown below:

The generalisation performance of the ideal classifier is as follows:

- TPR = 0.318385

- FNR = 0.681615

- TNR = 0.993286

- FPR = 0.006714

- ERR = 0.040459

- ACC = 0.959541

where TPR is the true positive rate, FNR is the false negative rate, TNR is the true negative rate, FPR is the false positive rate, ERR is the error rate and ACC = 1 - ERR is the accuracy.

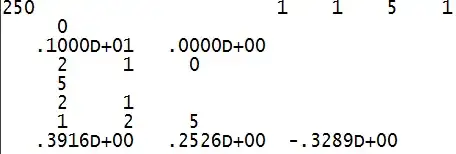

Assume the variances of both classes are know, so we only need to estimate the class means. Unfortunately, if we have to estimate the means from only a small sample of data, we might be unlucky and end up with a model where the decision boundary is so far from areas of high data density that we may as well classify everything as belonging to the majority negative class. This is an example of the class imbalance problem, because the uncertainty in estimating the parameters leads to a bias against the minority positive class. Here we have a model with 152 negative patterns and 8 positive patterns:

I didn't have to work to hard to be unlucky, this is only the 21st seed of the random number generator I tried. The training set statistics are:

- TPR = 0.00

- FNR = 1.00

- TNR = 1.00

- FPR = 0.00

- ERR = 0.05

- ACC = 0.95

Clearly this is not very good, it is no better than classifying everything as negative.

So lets see if we can detect this problem by having a validation set, again with 152 negative examples and 8 positive examples, in the same ratio as the training set:

- TPR = 0.00

- FNR = 1.00

- TNR = 1.00

- FPR = 0.00

- ERR = 0.05

- ACC = 0.95

Oh dear, the validations set suggests this is a case where no meaningful classification is possible. However, we know that is not true in this case, by construction. The problem is that, like the training set, it is only a small sample of data, and we have just been unlucky again. If we were to sample some more validation data, we might get a different result. However, if we could collect more data, we would use it for training the model and we would get better parameter estimates and the class imbalance problem would likely go away.

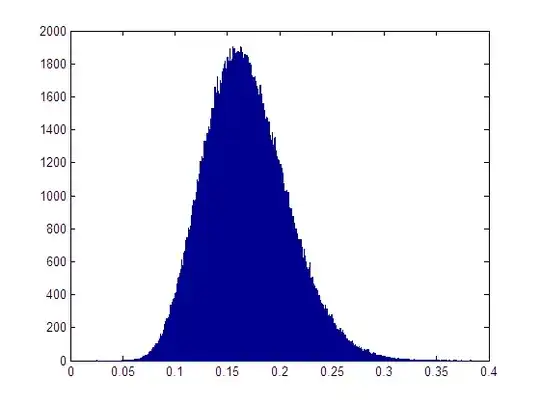

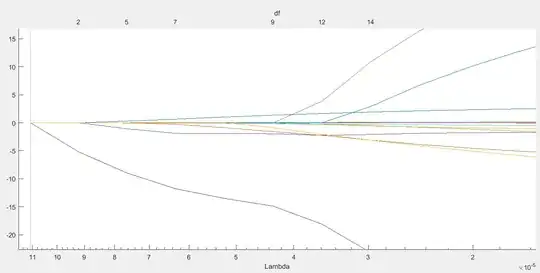

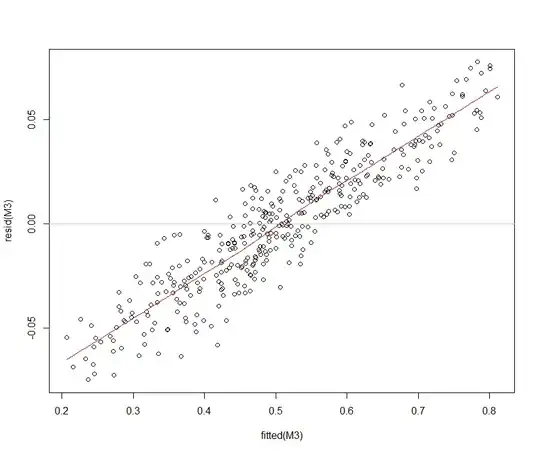

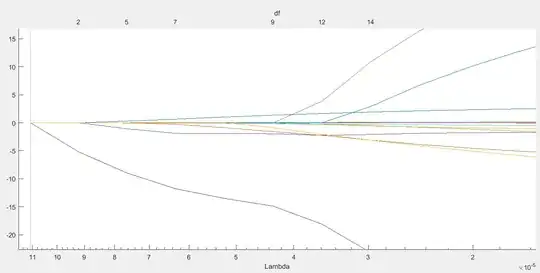

So my initial thought was to see if we could make a Bayesian test of whether it was plausible that there may be a non-trivial decision to be made, given the training data we actually have. If we choose an improper flat prior,our posterior distribution for the class means are Gaussian distributions, centered on the sample means, with standard deviations given by the standard errors of the means (in agreement with the frequentist confidence intervals). We can then perform a Monte Carlo simulation, of say 2^20 samples (as they can be collected so cheaply in this case and I like round numbers), and estimate the posterior distribution for the decision boundary.

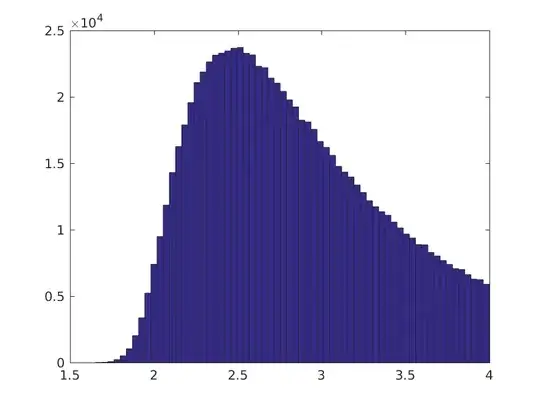

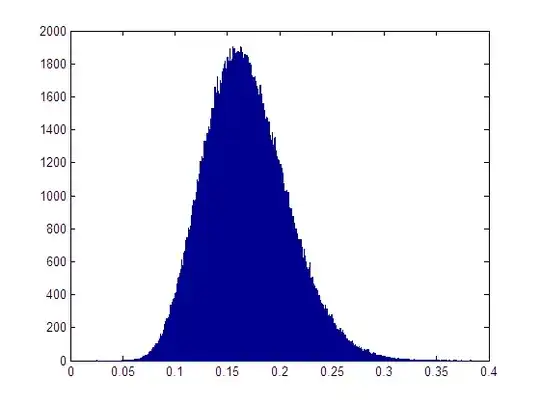

About 79% of the 2^20 samples gives a threshold that is in an area of high data density, the remaining 21% are so far to the right of both classes that essentially all patterns will be classified as negative. We can also look at the posterior distribution for the true positive rate:

This suggests that there is some chance of a meaningful classification. Let's make an arbitrary threshold at which we might consider a true positive rate as "meaningful" at 0.05. The proportion of Monte Carlo samples, for which the TPR >= 0.05 is about 22.7%, so in this case, we might diagnose the plausibility of a class imbalance problem.

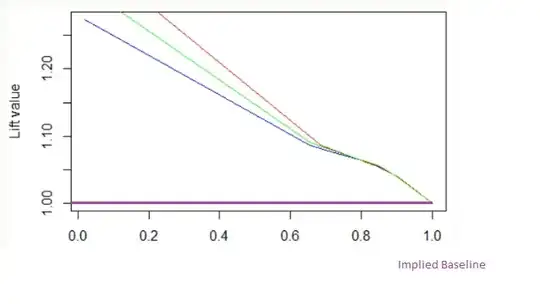

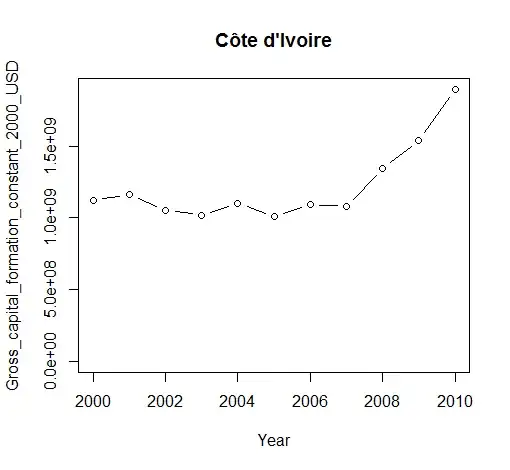

However, what happens if we try it again, but this time for a problem where classifying everything as negative is more or less optimal:

where the optimal model's generalisation performance is summarised by:

- TPR = 0.007254

- FNR = 0.992746

- TNR = 0.999714

- FPR = 0.000286

- ERR = 0.049909

- ACC = 0.950091

Again we have to estimate the class means from a small dataset with 152 negative examples and 8 positive examples, and again we are unlucky,

The training set performance is given by:

- TPR = 0.25

- FNR = 0.75

- TNR = 1.00

- FPR = 0.00

- ERR = 0.0375

- ACC = 0.9625

and the validation set performance by

- TPR = 0.125

- FNR = 0.875

- TNR = 1.000

- FPR = 0.000

- ERR = 0.04375

- ACC = 0.95625

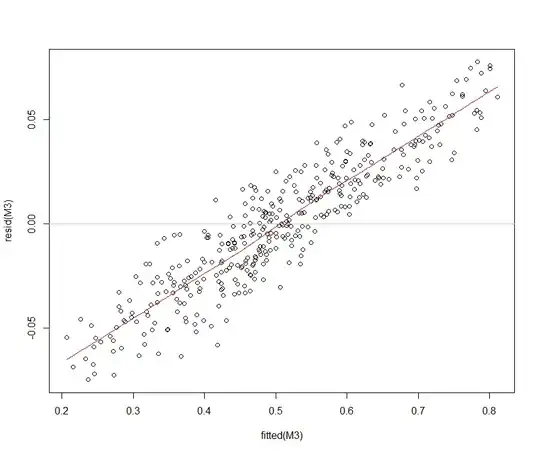

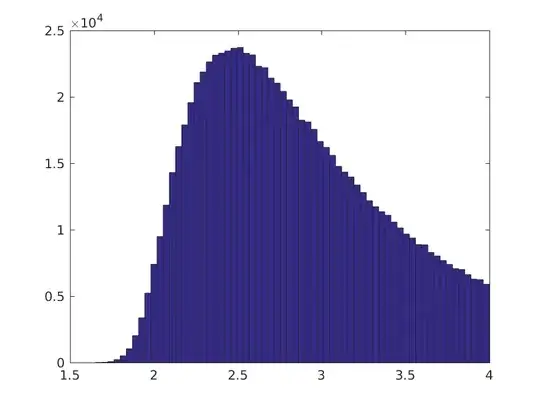

In this case, the Monte Carlo simulation is very confident that a meaningful classification is plausible

The proportion of Monte Carlo samples giving a TPR >= 0.05 is about 74.5%, when of course we know by construction that the optimal model assigns all patterns to the negative class.

This suggests the Bayesian analysis can suggest that a meaningful classification is plausible, even though we have a classifier that ostensibly classifies all patterns to the negative class. In that situation, we may want to think of doing something to alleviate the problem. However, such a test can't tell us when we should be classifying everything as negative.

Anyway, that was the sort of answer I was hoping for, but I'd much prefer something that actually worked in practice! ;o) I may well offer a second bounty if someone can provide something substantially better than this.