I have a load of time-depth data collected from some birds on a field trip.

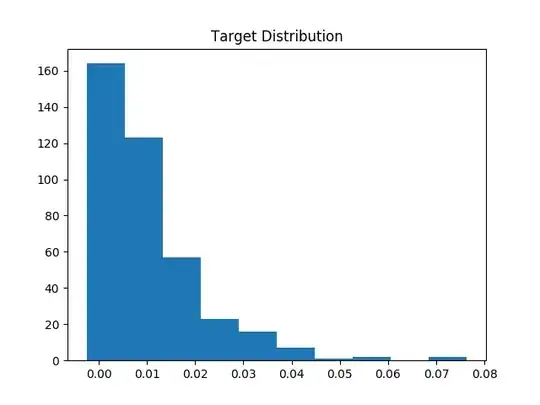

I am trying to classify diving behaviour by setting a depth threshold past which a dive can be said to have occurred. However, there is background noise in the data that varies within and between birds, making determining a fixed threshold problematic (as for one bird it will catch mostly dives but for another will catch everything - see image).

Is there a way to transform the data as to 'flatten' this baseline of background noise to say, 0, so that all spikes can be caught by depth values > 0?

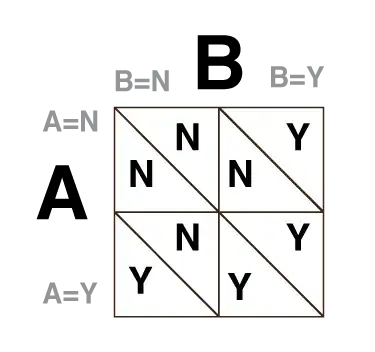

I know there are various clustering techniques that could fairly easily clasify dive behaviour here, but the problem with this is that I afterwards have to create a huge data set (for deep learning) from a rolling window of acceleration and depth values, with binary values on the end indicating whether or not a dive has occured within that window, and doing this with a variable threshold will drastically complicate things. (I would ideally like to get to a point where I could just take the depth vector from a given window and run a line of code like int((d_vec > thrshold).any()) to determine if any dives had occured)

UPDATE:

I worked out a satsfactory solution of taking a rolling window of ~30 values and offsetting them by the median of that window (taken to be the 'baseline'). This nicely 'smoothed' my plots while preserving the shape of each dive, but am still all ears for better solutions (or improvements to my code below)...

cat("\rTransforming data...")

k = 30

# take rolling median as baseline for each window

offset = zoo::rollapply(ts_data_d$Depth, width=k, by=k, FUN=median)

offset = rep(offset, each=k)

# match lengths

dif = length(ts_data_d$Depth) - length(offset)

offset = c(offset, rep(tail(offset, 1), dif))

# Zero-offset data

new_series = ts_data_d$Depth - offset

new_series[new_series<0] = 0 # negative depth meaningless

new_series[(length(new_series)-dif):length(new_series)] = 0 # no dives as device removed

ts_data_d$Depth_mod = new_series