Let's find out.

To begin with, what happens with balanced datasets?

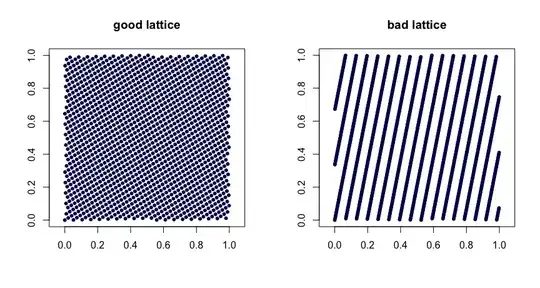

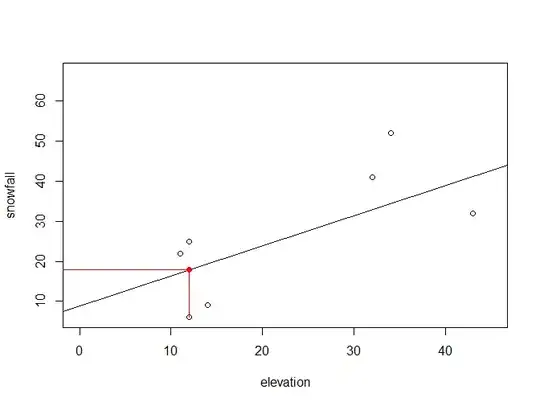

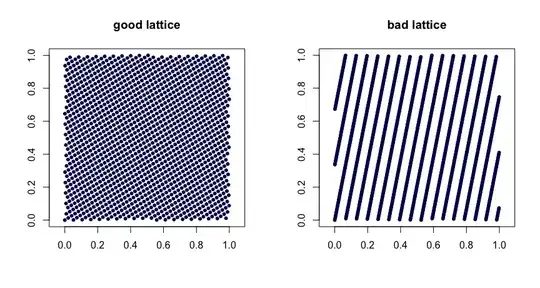

Here is a scatterplot of a dataset of $200$ observations, of which $50\%$ are zeros and the remainder are ones. On it I have graphed the underlying ("true") probabilities and the probabilities as fit with logistic regression. The two graphs agree closely, indicating logistic regression did a good job in this case.

To understand it better, I kept the same $x$ values but regenerated the $y$ values randomly $500$ times. Each fit yielded its estimate of the intercept and slope ($\hat\beta$) in this logistic regression. Here is a scatterplot of those estimates.

The central red triangle plots the true coefficients $(0, -3).$ The ellipses are the second order approximations to this point cloud: one is intended to enclose about half the points and the other is intended to enclose about 95% of the points. That they do so indicates they give a solid indication of how uncertain any given estimate of such a dataset might be: the intercept could be off by about $\pm 0.45$ (the width of the outer ellipse) and the slope could be off by about $\pm 1$ (the height of the outer ellipse). These are margins of error.

What happens with imbalanced datasets?

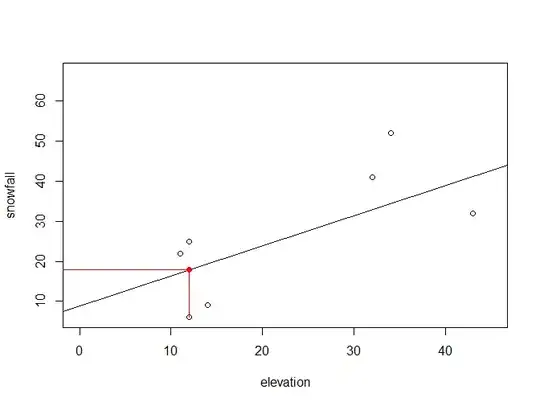

Here's a similar setup but with only $5\%$ of the points in one class (give or take a few points, depending on the randomness involved in making these observations):

($5\%$ is truly small: it tells us to expect to see only $10$ or so values in one class with the other $190$ in the other class.)

The fit now visibly departs from the true graph -- but is this evidence of logistic regression failing to be "robust"? Again, we can find out by repeating the process of generating random data and estimating the fit many times. Here is the scatterplot of $500$ estimates.

By and large the estimates stay near the true value near $(-4,-3).$ In this sense, logistic regression looks "robust." (I kept the same slope of $-3$ as before and adjusted the intercept to reduce the rate of of the $+1$ observations.)

The margins of error have changed : the semi-axis of the outer ellipse that (sort of) describes the uncertainty in the intercept has grown from $0.45$ to over $4$ while the other semi-axis has shrunk a little from $1$ to about $0.8;$ and the whole picture has tilted.

The ellipses no longer describe the point cloud quite as well as before: now, there is some tendency for logistic regression to estimate extremely negative slopes and intercepts. The tilting indicates noticeable correlation among the estimates: low (negative) intercepts tend to be associated with low negative slopes (which compensate for the small intercepts by predicting some $1$ values near $x=-1.$) But such correlation is to be expected: this looks just like ordinary least squares regression whenever the point of averages of the data is not close to the vertical axis.

What do these experiments show?

For datasets this size (or larger), at least:

Logistic regression tends to work well and give values reasonably close to the correct parameters even when the outcomes are imbalanced.

Second-order descriptions of the correlation between the parameter estimates (which are routine outputs of logistic regression) don't quite capture the possibility that the estimates could simultaneously be quite far away from the truth.

A meta-conclusion

You can assess the "robustness" (or, more generally, the salient statistical properties) of any procedure, such as logistic regression, by running it repeatedly on data generated according to a known realistic model and tracking the outputs that are important to you.

This is the R code that produced the figures. For the first two figures, the first line was altered to p <- 50/100. Remove the set.seed call to generate additional random examples.

Experimenting with simulations like this (extended to more explanatory variables) might persuade you of the utility of a standard rule of thumb:

Let the number of observations in the smaller class guide the complexity of the model.

Whereas in ordinary least squares regression we might be comfortable having ten observations (total) for each explanatory variable, for logistic regression we will want to have ten observations in the smaller class for each explanatory variable.

p <- 5/100 # Proportion of one class

n <- 200 # Dataset size

x <- seq(-1, 1, length.out=n) # Explanatory variable

beta <- -3 # Slope

#

# Find an intercept that yields `p` as the true proportion for these `x`.

#

logistic <- function(z) 1 - 1/(1 + exp(z))

alpha <- uniroot(function(a) mean(logistic(a + beta*x)) - p, c(-5,5))$root

#

# Create and plot a dataset with an expected value of `p`.

#

set.seed(17)

y <- rbinom(n, 1, logistic(alpha + beta*x))

plot(range(x), c(-0.015, 1.015), type="n", bty="n", xlab="x", ylab="y",

main="Data with True (Solid) and Fitted (Dashed) Probabilities")

curve(logistic(alpha + beta*x), add=TRUE, col="Gray", lwd=2)

rug(x[y==0], side=1, col="Red")

rug(x[y==1], side=3, col="Red")

points(x, y, pch=21, bg="#00000020")

#

# Fit a logistic model.

#

X <- data.frame(x=x, y=y)

fit <- glm(y ~ x, data=X, family="binomial")

summary(fit)

#

# Sketch the fit.

#

b <- coefficients(fit)

curve(logistic(b[1] + b[2]*x), add=TRUE, col="Black", lty=3, lwd=2)

#

# Evaluate the robustness of the fit.

#

sim <- replicate(500, {

X$y.new <- with(X, rbinom(n, 1, logistic(alpha + beta*x)))

coefficients(glm(y.new ~ x, data=X, family="binomial"))

})

plot(t(sim), main="Estimated Coefficients", ylab="")

mtext(expression(hat(beta)), side=2, line=2.5, las=2, cex=1.25)

points(alpha, beta, pch=24, bg="#ff0000c0", cex=1.6)

#

# Plot second moment ellipses.

#

V <- cov(t(sim))

obj <- eigen(V)

a <- seq(0, 2*pi, length.out=361)

for (level in c(.50, .95)) {

lambda <- sqrt(obj$values) * sqrt(qchisq(level, 2))

st <- obj$vectors %*% (rbind(cos(a), sin(a)) * lambda) + c(alpha, beta)

polygon(st[1,], st[2,], col="#ffff0010")

}