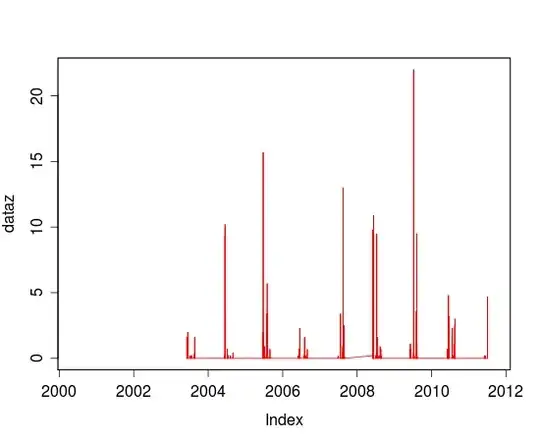

My data:

My model:

mod <- glm(Y2/Y1 ~ Var_1, data = df, family = binomial, weights = Y1)

summary(mod) shows that my response variable declines significantly as Var_1 increases. However, in order to assess model fit, I conduct (1) cross-validation, and (2) calculate the Mean Standard Error (MSE).

# CV boot::cv.glm(df, mod, K=8) # MSE actual = df$Var_1 pred = predict(mod) MSE = mean((actual - pred)^2) MSE

The delta values obtained from cv.glm = 0.004935280 & 0.004797322 The calculated MSE is 3012.686.

I was under the impression that a good model fit is indicated by low delta values and a low MSE value. So what am I doing wrong for these two methods to provide such different results?

Are there any other procedures I could do to assess how reliable my model is, given the very small dataset?