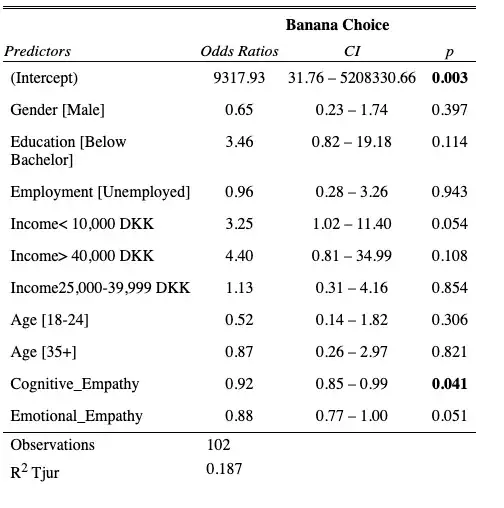

I'm trying to compute multiple logistic regression model and here are the results with covariates and covariates only:

As you can see, I have a very large range of CI and OR. I don't really understand how this happens. Does anyone know how to fix this?

abs(new.data$Cognitive_Empathy)

[1] 54 46 54 58 64 57 49 53 57 62 57 44 60 61 51 64 58 61 58 51 53 62 60 56 54 51 58 53 52 49 53 55 66 52 55 46 54 48

[39] 58 59 60 57 59 62 46 49 59 63 56 55 55 48 57 53 68 58 49 57 69 62 50 40 59 63 52 60 59 44 53 61 62 59 57 66 60 65

[77] 57 55 58 67 50 63 60 52 71 51 56 63 52 69 57 56 56 67 48 66 61 46 52 57 53 66

and here are the choices:

[1] Non-organic Non-organic Organic Non-organic Organic Organic Non-organic Non-organic Non-organic

[10] Non-organic Non-organic Organic Organic Non-organic Organic Non-organic Organic Non-organic

[19] Non-organic Organic Organic Organic Organic Organic Organic Organic Organic

[28] Organic Organic Organic Organic Organic Non-organic Non-organic Organic Organic

[37] Organic Organic Non-organic Non-organic Organic Organic Organic Non-organic Organic

[46] Non-organic Organic Non-organic Organic Organic Organic Non-organic Non-organic Organic

[55] Non-organic Organic Organic Non-organic Organic Organic Organic Organic Organic

[64] Non-organic Organic Non-organic Non-organic Organic Organic Organic Organic Organic

[73] Non-organic Organic Non-organic Organic Organic Organic Non-organic Non-organic Non-organic

[82] Non-organic Organic Organic Non-organic Organic Organic Non-organic Organic Organic

[91] Non-organic Non-organic Organic Organic Organic Organic Non-organic Organic Organic

[100] Non-organic Organic Non-organic