I am calculating a logistic regression with the amount of letters of a word given (0-5) in a task as the IV and whether the corresponding word was recalled in a later test or not (0/1) as the DV. All words in the dataset are five-letter words.

I want to check the assumption that my continuous predictor lettersProvided has a linear association with the logit of the outcome. This is usually investigated with the Box-Tidwell Test:

There are multiple ways to do this in R, here are two.

- Calculating a regression with an added interaction term of the IV and the ln of the IV:

lessR::Logit(correctAnswer ~ ltrsProvided + ltrsProvided:log(ltrsProvided), data= myData)

In this case, a sifgnificant interaction term (p<.05) indicates non-linearity.

- Using a dedicated function from the car package:

car::boxTidwell(rec_correct~ltrsProvided, data = myData)

However, both methods do not work with my data because it includes zero, for which the ln is undefined.

There are some questions on the network about the Box-Tidwell test, but none of those with answers talk about my problem specifically. Some resources mention a transformation of the IV (only for the Box Tidwell, as the regression works fine with 0s in the data!), but what kind of transformation is that? Adding 0.0001 to each level of ltrsProvided enables calculation of the ln but seems weird as the variable cannot realistically include e.g. 1.0001. Others talk about dropping the zeros which is impossible for me because 0 is an important level in my IV.

Frequently, splining comes up to similar (but not the same) questions but I dont think its appropriate in my case (few levels) and I have no reason to suspect that there is no linear relationship.

tl;dr: In what way can I transform my continuous IV temporarily to conduct a Box Tidwell Test?

Example of a reduced version of my data:

ltrsProvided correctAnswer

1 1

2 1

3 1

4 0

0 1

1 1

5 0

2 1

0 1

4 0

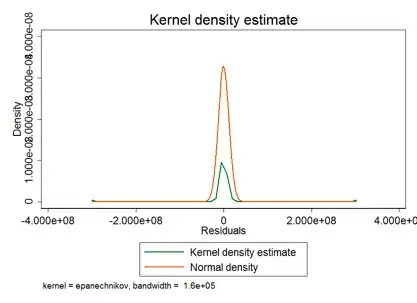

Code and Plot regarding @Demetri Pananos 's post and comment.

The relationship does not look linear at all...

vis_grouped_d <- grouped_d %>% mutate(prop = y/(n+y), prop_log = log(prop))

ggplot(vis_grouped_d) + geom_line(aes(ltrs_gvn, prop_log))

More information about the research design

Its basically a replication of McCurdy et al. (2021), but online. Its an experiments on the generation effect (content that you create yourself is remembered better than content you only read) and the generation constraint (the degree of the self generation might have an influence on the memory benefit from generation). In the experiment the subjects had to create and write down target words with the help of a cue and 0-5 letters of the target given to them. In a later test, their target words and some distractors were presented to them and they had to decide whether that word was created earlier (recognition correct; 0/1). The ltrs_given levels 0-4 are basically the manipulation of the generation constraint while ltrs_gvn is the read control condition.

They used mixed effect logistic regression with random intercepts for subjects and words (Code and Data are available online). I want to use regular logistic regression because there are so few resources for mixed models.