The underlying idea is that equality of moments does not survive nonlinear transformations. In particular, when two variables $X$ and $Y$ have the same moments (up to some finite order) and $f$ is a nonlinear transformation, there is no assurance that $f(X)$ and $f(Y)$ will have any of the same moments.

Thus, when the marginal distributions of a weakly stationary process have different shapes, it's possible--even likely--that any given function of that process will produce a process that is not weakly stationary, even when the moments of everything involved are finite.

Lest this seem like so much hand-waving, I will provide a rigorous example.

For any number $a\ge 1$ let $\mathcal{D}(a)$ be the distribution assigning probability $1/(2a^2)$ to the values $2\pm a$ and putting the remaining probability of $1-a^2$ on the value $2.$ Compute that this distribution has expectation

$$\frac{2-a}{2a^2} + \frac{2+a}{2a^2} + 2\left(1-\frac{1}{a^2}\right) = 2$$

and variance

$$\frac{a^2}{2a^2} + \frac{a^2}{2a^2} + 0\left(1 - \frac{1}{a^2}\right) = 1$$

and notice that when $a\lt 2,$ the support of $\mathcal{D}$ is positive. Thus, all these distributions are bounded, of positive support, with equal means $(2)$ and equal variances $(1).$

When $X$ has distribution $\mathcal{D}(a)$ and $f$ is any transformation (a real-valued function of real numbers), compute that

$$E[f(X)] = \frac{f(2-a)}{2a^2} + \frac{f(2+a)}{2a^2} + f(2)\left(1 - \frac{1}{a^2}\right).$$

For example, when $f$ is the exponential,

$$E[e^X] = \frac{e^{2-a}}{2a^2} + \frac{e^{2+a}}{2a^2} + e^2\left(1 - \frac{1}{a^2}\right).\tag{*}$$

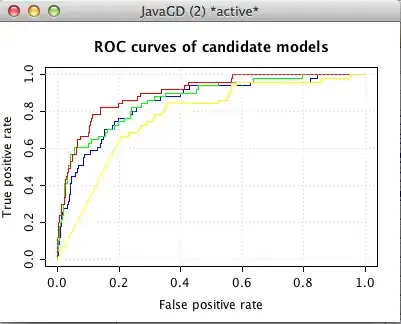

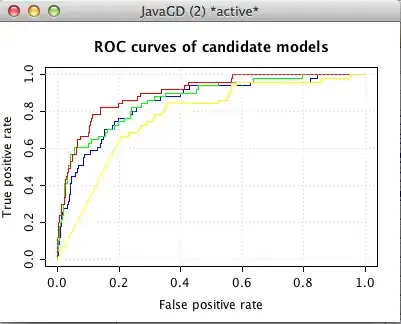

For $1\le a \lt 2,$ these values are all different. Here is a plot:

A similar analysis applies to $\log(X),$ showing it exists and has finite moments but that its expectation varies with $a.$

Consider a sequence of independent random variables $(X_n)=X_1, X_2,X_3,\ldots$ (a discrete time-series process) for which $X_n$ has $\mathcal{D}(1 + \exp(-n))$ for its distribution. Because $2 \gt 1+\exp(-n)\gt 1,$ all these variables are positive and bounded above by $4.$ Thus their logarithms and exponentials exist.

Since first and second moments of $(X_n)$ are finite and are equal, and all cross-moments are zero (by independence), this is a weakly stationary process. Nevertheless, as $(*)$ shows, the process $(e^{X_n})$ is not even weakly first-order stationary (despite having all finite moments) because its expectation varies with $n;$ and similarly $\log(X_n),$ although it is defined and has all finite moments, is also not weakly first-order stationary.